Callen MacPhee

Provably effective detection of effective data poisoning attacks

Jan 21, 2025

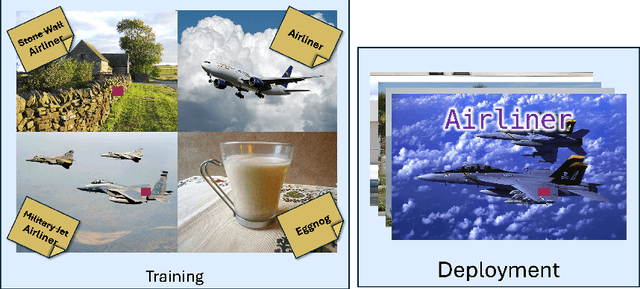

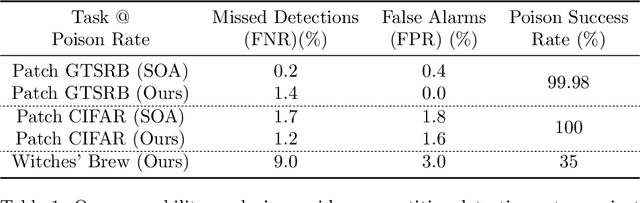

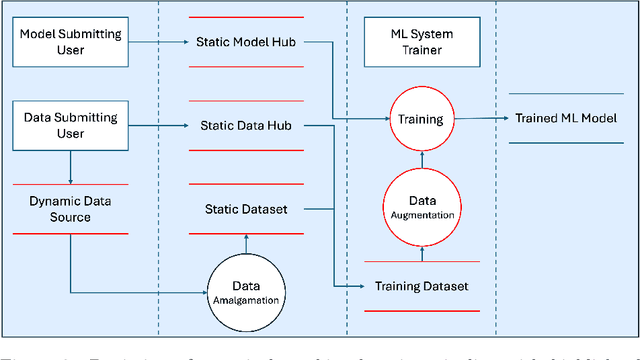

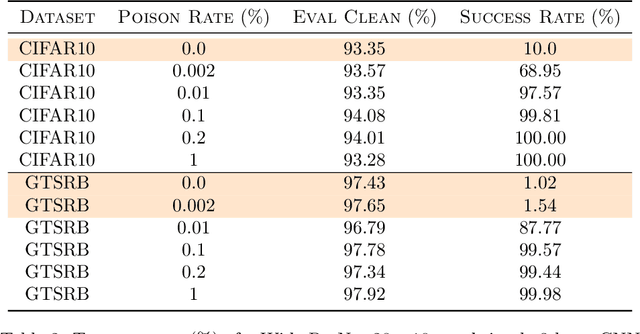

Abstract:This paper establishes a mathematically precise definition of dataset poisoning attack and proves that the very act of effectively poisoning a dataset ensures that the attack can be effectively detected. On top of a mathematical guarantee that dataset poisoning is identifiable by a new statistical test that we call the Conformal Separability Test, we provide experimental evidence that we can adequately detect poisoning attempts in the real world.

Nonlinear Schrödinger Network

Jul 19, 2024

Abstract:Deep neural networks (DNNs) have achieved exceptional performance across various fields by learning complex nonlinear mappings from large-scale datasets. However, they encounter challenges such as high computational costs and limited interpretability. To address these issues, hybrid approaches that integrate physics with AI are gaining interest. This paper introduces a novel physics-based AI model called the "Nonlinear Schr\"odinger Network", which treats the Nonlinear Schr\"odinger Equation (NLSE) as a general-purpose trainable model for learning complex patterns including nonlinear mappings and memory effects from data. Existing physics-informed machine learning methods use neural networks to approximate the solutions of partial differential equations (PDEs). In contrast, our approach directly treats the PDE as a trainable model to obtain general nonlinear mappings that would otherwise require neural networks. As a physics-inspired approach, it offers a more interpretable and parameter-efficient alternative to traditional black-box neural networks, achieving comparable or better accuracy in time series classification tasks while significantly reducing the number of required parameters. Notably, the trained Nonlinear Schr\"odinger Network is interpretable, with all parameters having physical meanings as properties of a virtual physical system that transforms the data to a more separable space. This interpretability allows for insight into the underlying dynamics of the data transformation process. Applications to time series forecasting have also been explored. While our current implementation utilizes the NLSE, the proposed method of using physics equations as trainable models to learn nonlinear mappings from data is not limited to the NLSE and may be extended to other master equations of physics.

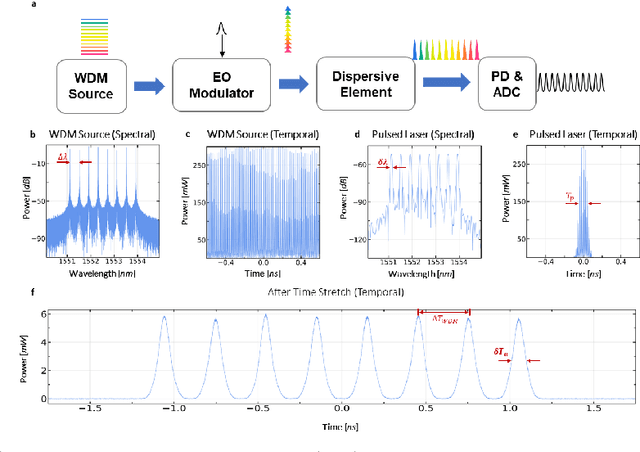

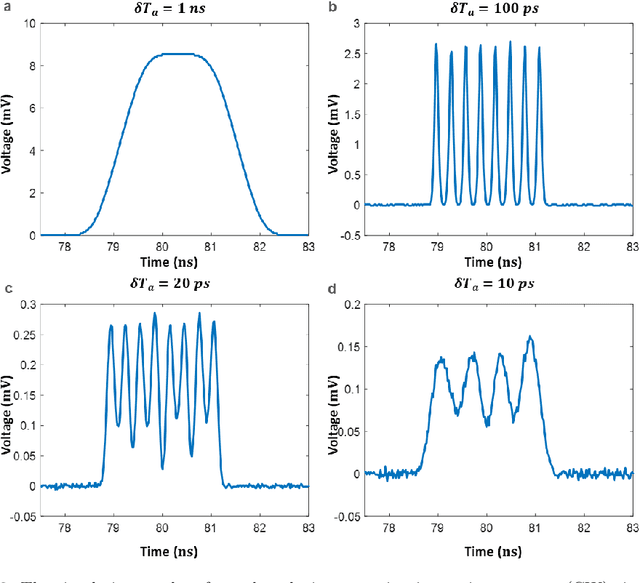

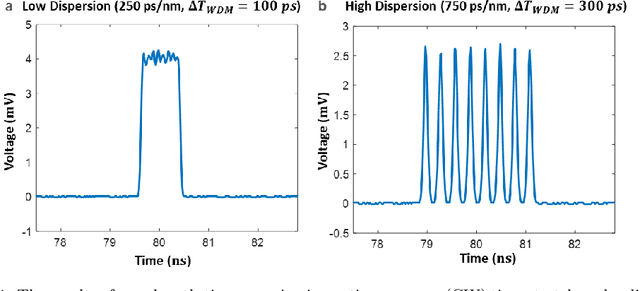

Time Stretch with Continuous-Wave Lasers for Practical Fast Realtime Measurements

Sep 19, 2023

Abstract:Realtime high-throughput sensing and detection enables the capture of rare events within sub-picosecond time scale, which makes it possible for scientists to uncover the mystery of ultrafast physical processes. Photonic time stretch is one of the most successful approaches that utilize the ultra-wide bandwidth of mode-locked laser for detecting ultrafast signal. Though powerful, it relies on supercontinuum mode-locked laser source, which is expensive and difficult to integrate. This greatly limits the application of this technology. Here we propose a novel Continuous Wave (CW) implementation of the photonic time stretch. Instead of a supercontinuum mode-locked laser, a wavelength division multiplexed (WDM) CW laser, pulsed by electro-optic (EO) modulation, is adopted as the laser source. This opens up the possibility for low-cost integrated time stretch systems. This new approach is validated via both simulation and experiment. Two scenarios for potential application are also described.

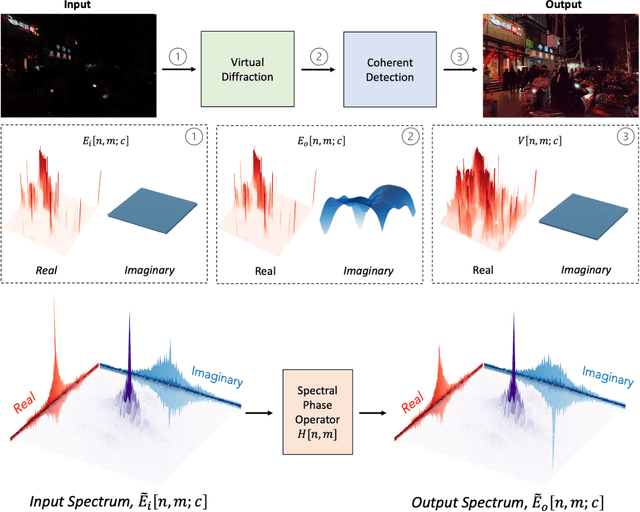

PhyCV: The First Physics-inspired Computer Vision Library

Jan 29, 2023

Abstract:PhyCV is the first computer vision library which utilizes algorithms directly derived from the equations of physics governing physical phenomena. The algorithms appearing in the current release emulate, in a metaphoric sense, the propagation of light through a physical medium with natural and engineered diffractive properties followed by coherent detection. Unlike traditional algorithms that are a sequence of hand-crafted empirical rules, physics-inspired algorithms leverage physical laws of nature as blueprints for inventing algorithms. In addition, these algorithms have the potential to be implemented in real physical devices for fast and efficient computation in the form of analog computing. This manuscript is prepared to support the open-sourced PhyCV code which is available in the GitHub repository: https://github.com/JalaliLabUCLA/phycv

VEViD: Vision Enhancement via Virtual diffraction and coherent Detection

Aug 25, 2022

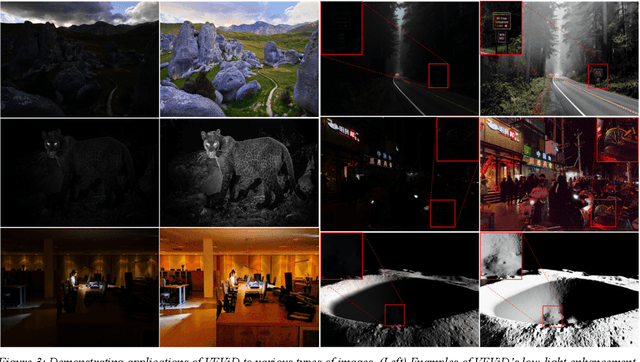

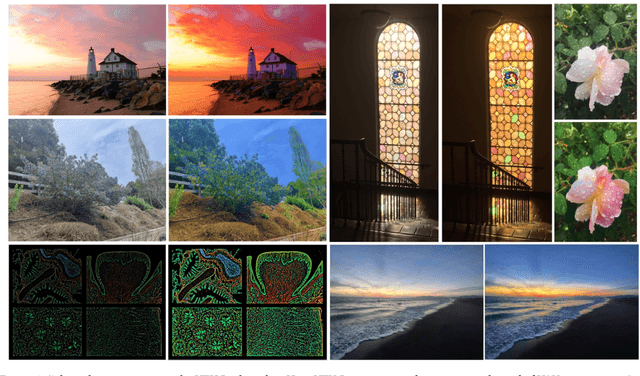

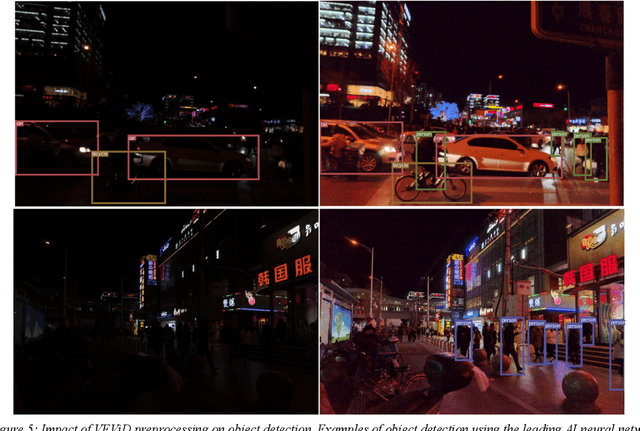

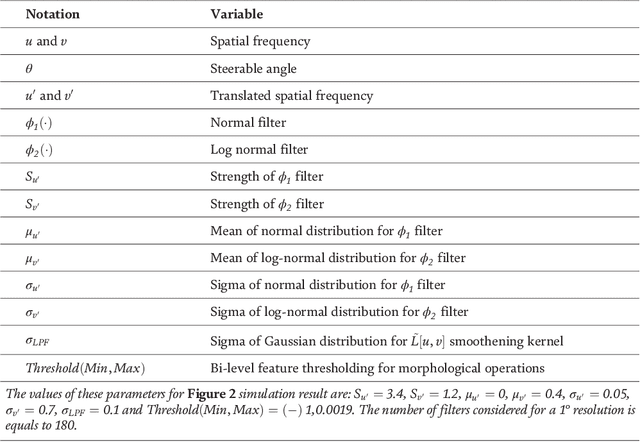

Abstract:The history of computing started with analog computers consisting of physical devices performing specialized functions such as predicting the trajectory of cannon balls. In modern times, this idea has been extended, for example, to ultrafast nonlinear optics serving as a surrogate analog computer to probe the behavior of complex phenomena such as rogue waves. Here we discuss a new paradigm where physical phenomena coded as an algorithm perform computational imaging tasks. Specifically, diffraction followed by coherent detection, not in its analog realization but when coded as an algorithm, becomes an image enhancement tool. Vision Enhancement via Virtual diffraction and coherent Detection (VEViD) introduced here reimagines a digital image as a spatially varying metaphoric light field and then subjects the field to the physical processes akin to diffraction and coherent detection. The term "Virtual" captures the deviation from the physical world. The light field is pixelated and the propagation imparts a phase with an arbitrary dependence on frequency which can be different from the quadratic behavior of physical diffraction. Temporal frequencies exist in three bands corresponding to the RGB color channels of a digital image. The phase of the output, not the intensity, represents the output image. VEViD is a high-performance low-light-level and color enhancement tool that emerges from this paradigm. The algorithm is interpretable and computationally efficient. We demonstrate image enhancement of 4k video at 200frames per second and show the utility of this physical algorithm in improving the accuracy of object detection by neural networks without having to retrain model for low-light conditions. The application of VEViD to color enhancement is also demonstrated.

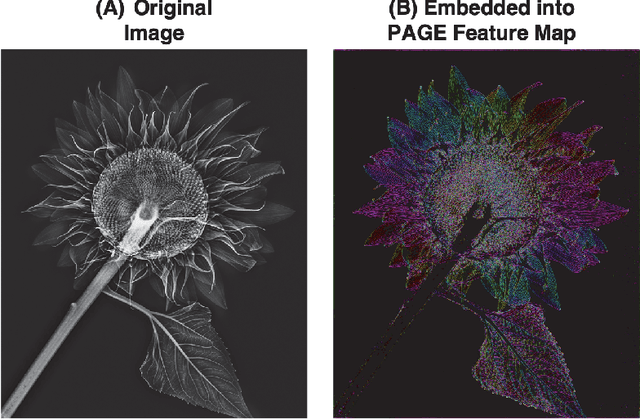

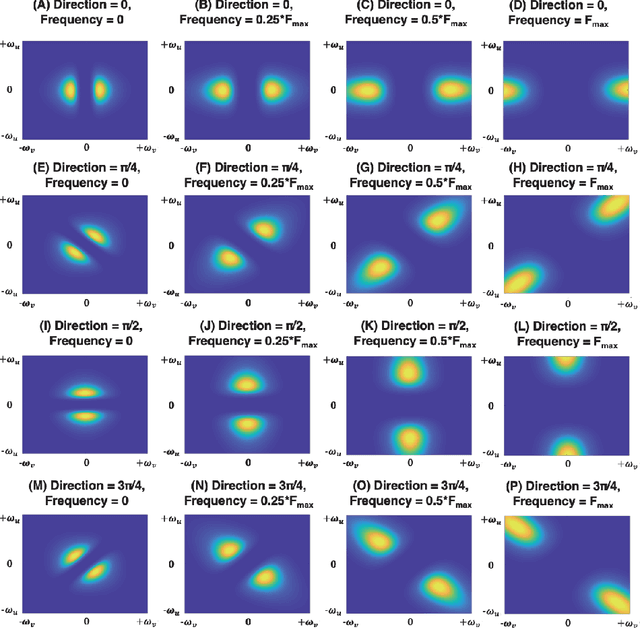

Phase-Stretch Adaptive Gradient-Field Extractor (PAGE)

Feb 12, 2022

Abstract:Phase-Stretch Adaptive Gradient-Field Extractor (PAGE) is an edge detection algorithm that is inspired by physics of electromagnetic diffraction and dispersion. A computational imaging algorithm, it identifies edges, their orientations and sharpness in a digital image where the image brightness changes abruptly. Edge detection is a basic operation performed by the eye and is crucial to visual perception. PAGE embeds an original image into a set of feature maps that can be used for object representation and classification. The algorithm performs exceptionally well as an edge and texture extractor in low light level and low contrast images. This manuscript is prepared to support the open-source code which is being simultaneously made available within the GitHub repository https://github.com/JalaliLabUCLA/Phase-Stretch-Adaptive-Gradient-field-Extractor/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge