Tingyi Zhou

Nonlinear Schrödinger Network

Jul 19, 2024

Abstract:Deep neural networks (DNNs) have achieved exceptional performance across various fields by learning complex nonlinear mappings from large-scale datasets. However, they encounter challenges such as high computational costs and limited interpretability. To address these issues, hybrid approaches that integrate physics with AI are gaining interest. This paper introduces a novel physics-based AI model called the "Nonlinear Schr\"odinger Network", which treats the Nonlinear Schr\"odinger Equation (NLSE) as a general-purpose trainable model for learning complex patterns including nonlinear mappings and memory effects from data. Existing physics-informed machine learning methods use neural networks to approximate the solutions of partial differential equations (PDEs). In contrast, our approach directly treats the PDE as a trainable model to obtain general nonlinear mappings that would otherwise require neural networks. As a physics-inspired approach, it offers a more interpretable and parameter-efficient alternative to traditional black-box neural networks, achieving comparable or better accuracy in time series classification tasks while significantly reducing the number of required parameters. Notably, the trained Nonlinear Schr\"odinger Network is interpretable, with all parameters having physical meanings as properties of a virtual physical system that transforms the data to a more separable space. This interpretability allows for insight into the underlying dynamics of the data transformation process. Applications to time series forecasting have also been explored. While our current implementation utilizes the NLSE, the proposed method of using physics equations as trainable models to learn nonlinear mappings from data is not limited to the NLSE and may be extended to other master equations of physics.

Time Stretch with Continuous-Wave Lasers for Practical Fast Realtime Measurements

Sep 19, 2023

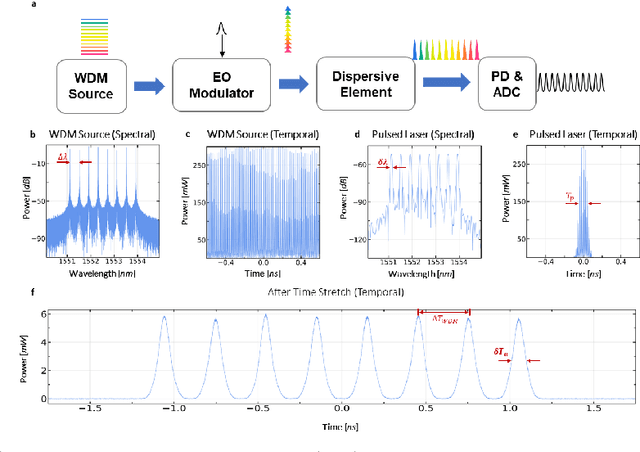

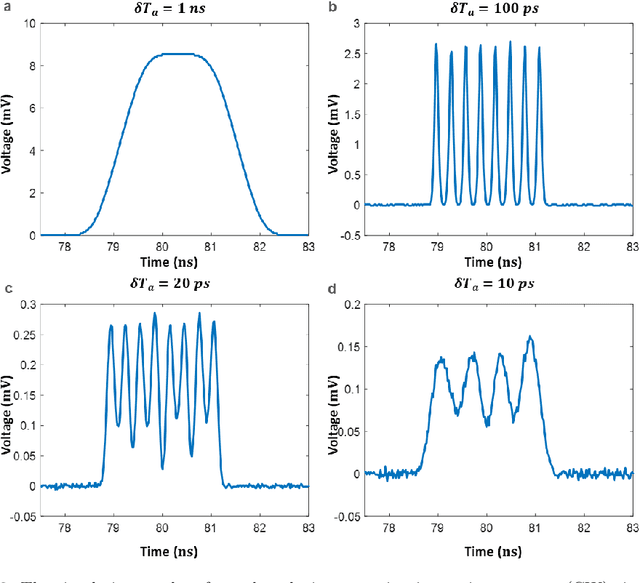

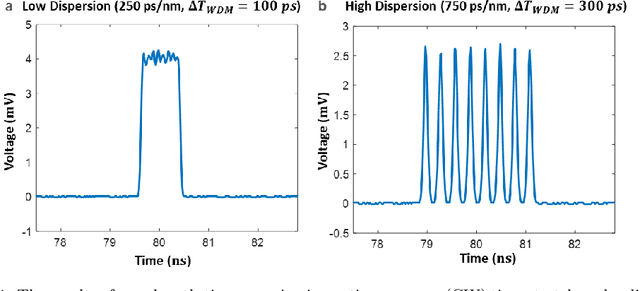

Abstract:Realtime high-throughput sensing and detection enables the capture of rare events within sub-picosecond time scale, which makes it possible for scientists to uncover the mystery of ultrafast physical processes. Photonic time stretch is one of the most successful approaches that utilize the ultra-wide bandwidth of mode-locked laser for detecting ultrafast signal. Though powerful, it relies on supercontinuum mode-locked laser source, which is expensive and difficult to integrate. This greatly limits the application of this technology. Here we propose a novel Continuous Wave (CW) implementation of the photonic time stretch. Instead of a supercontinuum mode-locked laser, a wavelength division multiplexed (WDM) CW laser, pulsed by electro-optic (EO) modulation, is adopted as the laser source. This opens up the possibility for low-cost integrated time stretch systems. This new approach is validated via both simulation and experiment. Two scenarios for potential application are also described.

Low Latency Computing for Time Stretch Instruments

Apr 05, 2023Abstract:Time stretch instruments have been exceptionally successful in discovering single-shot ultrafast phenomena such as optical rogue waves and have led to record-speed microscopy, spectroscopy, lidar, etc. These instruments encode the ultrafast events into the spectrum of a femtosecond pulse and then dilate the time scale of the data using group velocity dispersion. Generating as much as Tbit per second of data, they are ideal partners for deep learning networks which by their inherent complexity, require large datasets for training. However, the inference time scale of neural networks in the millisecond regime is orders of magnitude longer than the data acquisition rate of time stretch instruments. This underscores the need to explore means where some of the lower-level computational tasks can be done while the data is still in the optical domain. The Nonlinear Schr\"{o}dinger Kernel computing addresses this predicament. It utilizes optical nonlinearities to map the data onto a new domain in which classification accuracy is enhanced, without increasing the data dimensions. One limitation of this technique is the fixed optical transfer function, which prevents training and generalizability. Here we show that the optical kernel can be effectively tuned and trained by utilizing digital phase encoding of the femtosecond laser pulse leading to a reduction of the error rate in data classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge