C. L. Morris

Los Alamos National Laboratory, Los Alamos, NM, USA

First experimental study of multiple orientation muon tomography, with image optimization in sparse data environments

Oct 08, 2024

Abstract:Due to the high penetrating power of cosmic ray muons, they can be used to probe very thick and dense objects. As charged particles, they can be tracked by ionization detectors, determining the position and direction of the muons. With detectors on either side of an object, particle direction changes can be used to extract scattering information within an object. This can be used to produce a scattering intensity image within the object related to density and atomic number. Such imaging is typically performed with a single detector-object orientation, taking advantage of the more intense downward flux of muons, producing planar imaging with some depth-of-field information in the third dimension. Several simulation studies have been published with multi-orientation tomography, which can form a three-dimensional representation faster than a single orientation view. In this work we present the first experimental multiple orientation muon tomography study. Experimental muon-scatter based tomography was performed using a concrete filled steel drum with several different metal wedges inside, between detector planes. Data was collected from different detector-object orientations by rotating the steel drum. The data collected from each orientation were then combined using two different tomographic methods. Results showed that using a combination of multiple depth-of-field reconstructions, rather than a traditional inverse Radon transform approach used for CT, resulted in more useful images for sparser data. As cosmic ray muon flux imaging is rate limited, the imaging techniques were compared for sparse data. Using the combined depth-of-field reconstruction technique, fewer detector-object orientations were needed to reconstruct images that could be used to differentiate the metal wedge compositions.

Neural Network Methods for Radiation Detectors and Imaging

Nov 09, 2023

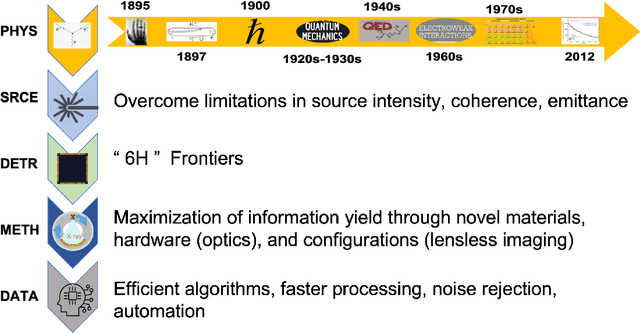

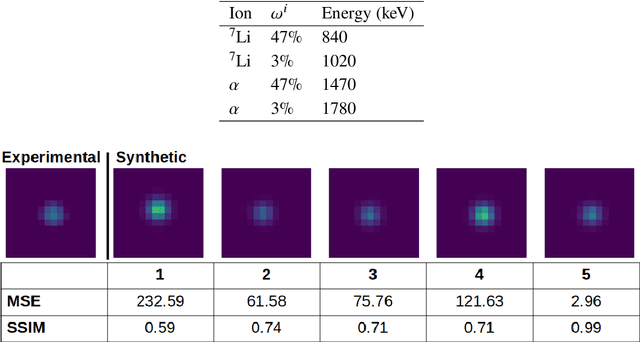

Abstract:Recent advances in image data processing through machine learning and especially deep neural networks (DNNs) allow for new optimization and performance-enhancement schemes for radiation detectors and imaging hardware through data-endowed artificial intelligence. We give an overview of data generation at photon sources, deep learning-based methods for image processing tasks, and hardware solutions for deep learning acceleration. Most existing deep learning approaches are trained offline, typically using large amounts of computational resources. However, once trained, DNNs can achieve fast inference speeds and can be deployed to edge devices. A new trend is edge computing with less energy consumption (hundreds of watts or less) and real-time analysis potential. While popularly used for edge computing, electronic-based hardware accelerators ranging from general purpose processors such as central processing units (CPUs) to application-specific integrated circuits (ASICs) are constantly reaching performance limits in latency, energy consumption, and other physical constraints. These limits give rise to next-generation analog neuromorhpic hardware platforms, such as optical neural networks (ONNs), for high parallel, low latency, and low energy computing to boost deep learning acceleration.

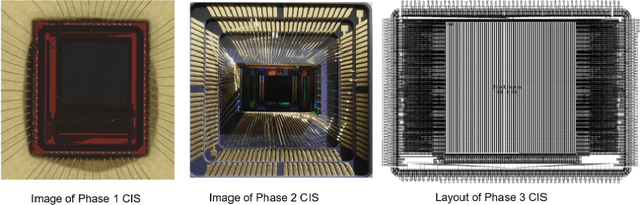

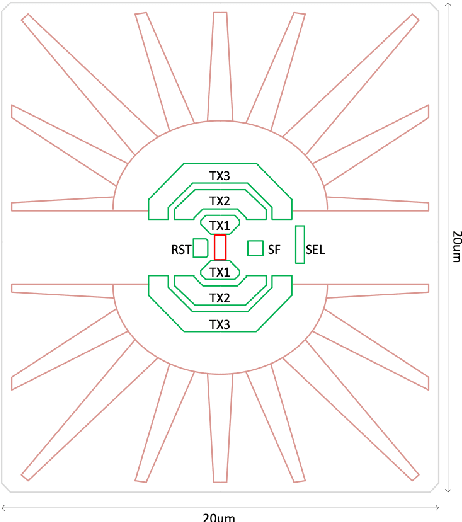

Ultrafast CMOS image sensors and data-enabled super-resolution for multimodal radiographic imaging and tomography

Jan 27, 2023

Abstract:We summarize recent progress in ultrafast Complementary Metal Oxide Semiconductor (CMOS) image sensor development and the application of neural networks for post-processing of CMOS and charge-coupled device (CCD) image data to achieve sub-pixel resolution (thus $super$-$resolution$). The combination of novel CMOS pixel designs and data-enabled image post-processing provides a promising path towards ultrafast high-resolution multi-modal radiographic imaging and tomography applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge