C. -H. Luke Ong

Quantitative Verification of Omega-regular Properties in Probabilistic Programming

Dec 25, 2025Abstract:Probabilistic programming provides a high-level framework for specifying statistical models as executable programs with built-in randomness and conditioning. Existing inference techniques, however, typically compute posterior distributions over program states at fixed time points, most often at termination, thereby failing to capture the temporal evolution of probabilistic behaviors. We introduce temporal posterior inference (TPI), a new framework that unifies probabilistic programming with temporal logic by computing posterior distributions over execution traces that satisfy omega-regular specifications, conditioned on possibly temporal observations. To obtain rigorous quantitative guarantees, we develop a new method for computing upper and lower bounds on the satisfaction probabilities of omega-regular properties. Our approach decomposes Rabin acceptance conditions into persistence and recurrence components and constructs stochastic barrier certificates that soundly bound each component. We implement our approach in a prototype tool, TPInfer, and evaluate it on a suite of benchmarks, demonstrating effective and efficient inference over rich temporal properties in probabilistic models.

Diagonalisation SGD: Fast & Convergent SGD for Non-Differentiable Models via Reparameterisation and Smoothing

Feb 20, 2024Abstract:It is well-known that the reparameterisation gradient estimator, which exhibits low variance in practice, is biased for non-differentiable models. This may compromise correctness of gradient-based optimisation methods such as stochastic gradient descent (SGD). We introduce a simple syntactic framework to define non-differentiable functions piecewisely and present a systematic approach to obtain smoothings for which the reparameterisation gradient estimator is unbiased. Our main contribution is a novel variant of SGD, Diagonalisation Stochastic Gradient Descent, which progressively enhances the accuracy of the smoothed approximation during optimisation, and we prove convergence to stationary points of the unsmoothed (original) objective. Our empirical evaluation reveals benefits over the state of the art: our approach is simple, fast, stable and attains orders of magnitude reduction in work-normalised variance.

Fast and Correct Gradient-Based Optimisation for Probabilistic Programming via Smoothing

Jan 09, 2023

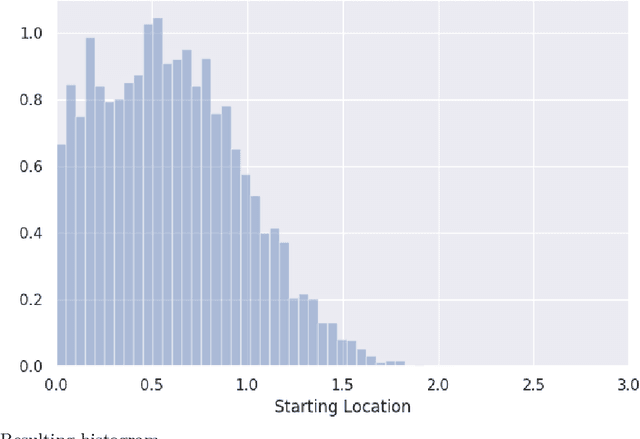

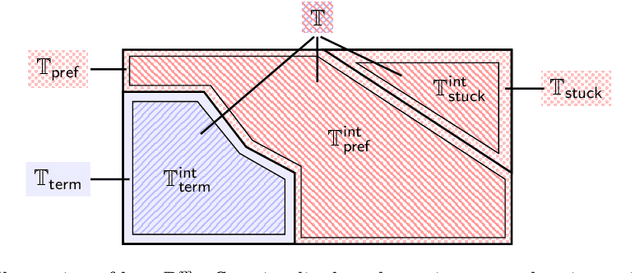

Abstract:We study the foundations of variational inference, which frames posterior inference as an optimisation problem, for probabilistic programming. The dominant approach for optimisation in practice is stochastic gradient descent. In particular, a variant using the so-called reparameterisation gradient estimator exhibits fast convergence in a traditional statistics setting. Unfortunately, discontinuities, which are readily expressible in programming languages, can compromise the correctness of this approach. We consider a simple (higher-order, probabilistic) programming language with conditionals, and we endow our language with both a measurable and a smoothed (approximate) value semantics. We present type systems which establish technical pre-conditions. Thus we can prove stochastic gradient descent with the reparameterisation gradient estimator to be correct when applied to the smoothed problem. Besides, we can solve the original problem up to any error tolerance by choosing an accuracy coefficient suitably. Empirically we demonstrate that our approach has a similar convergence as a key competitor, but is simpler, faster, and attains orders of magnitude reduction in work-normalised variance.

Densities of almost-surely terminating probabilistic programs are differentiable almost everywhere

Apr 08, 2020

Abstract:We study the differential properties of higher-order statistical probabilistic programs with recursion and conditioning. Our starting point is an open problem posed by Hongseok Yang: what class of statistical probabilistic programs have densities that are differentiable almost everywhere? To formalise the problem, we consider Statistical PCF (SPCF), an extension of call-by-value PCF with real numbers, and constructs for sampling and conditioning. We give SPCF a sampling-style operational semantics a la Borgstrom et al., and study the associated weight (commonly referred to as the density) function and value function on the set of possible execution traces. Our main result is that almost-surely terminating SPCF programs, generated from a set of primitive functions (e.g. the set of analytic functions) satisfying mild closure properties, have weight and value functions that are almost-everywhere differentiable. We use a stochastic form of symbolic execution to reason about almost-everywhere differentiability. A by-product of this work is that almost-surely terminating deterministic (S)PCF programs with real parameters denote functions that are almost-everywhere differentiable. Our result is of practical interest, as almost-everywhere differentiability of the density function is required to hold for the correctness of major gradient-based inference algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge