Burak Kakillioglu

Background-Aware 3D Point Cloud Segmentationwith Dynamic Point Feature Aggregation

Nov 14, 2021

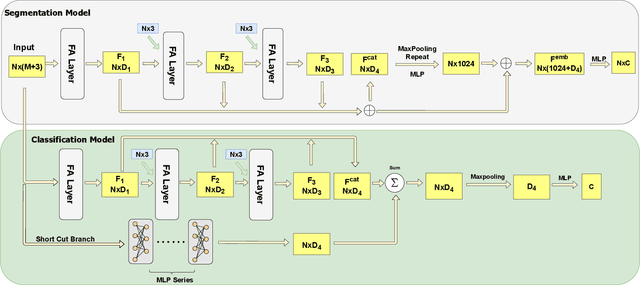

Abstract:With the proliferation of Lidar sensors and 3D vision cameras, 3D point cloud analysis has attracted significant attention in recent years. After the success of the pioneer work PointNet, deep learning-based methods have been increasingly applied to various tasks, including 3D point cloud segmentation and 3D object classification. In this paper, we propose a novel 3D point cloud learning network, referred to as Dynamic Point Feature Aggregation Network (DPFA-Net), by selectively performing the neighborhood feature aggregation with dynamic pooling and an attention mechanism. DPFA-Net has two variants for semantic segmentation and classification of 3D point clouds. As the core module of the DPFA-Net, we propose a Feature Aggregation layer, in which features of the dynamic neighborhood of each point are aggregated via a self-attention mechanism. In contrast to other segmentation models, which aggregate features from fixed neighborhoods, our approach can aggregate features from different neighbors in different layers providing a more selective and broader view to the query points, and focusing more on the relevant features in a local neighborhood. In addition, to further improve the performance of the proposed semantic segmentation model, we present two novel approaches, namely Two-Stage BF-Net and BF-Regularization to exploit the background-foreground information. Experimental results show that the proposed DPFA-Net achieves the state-of-the-art overall accuracy score for semantic segmentation on the S3DIS dataset, and provides a consistently satisfactory performance across different tasks of semantic segmentation, part segmentation, and 3D object classification. It is also computationally more efficient compared to other methods.

Autonomously and Simultaneously Refining Deep Neural Network Parameters by a Bi-Generative Adversarial Network Aided Genetic Algorithm

Sep 24, 2018

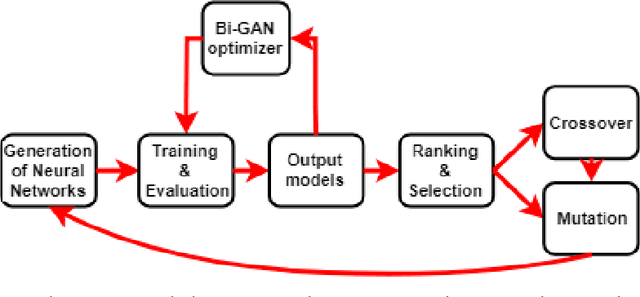

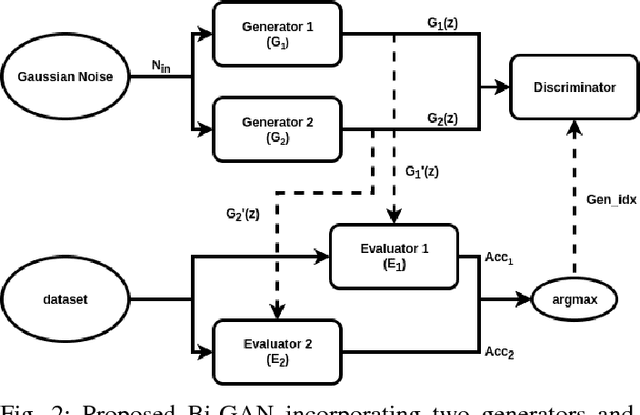

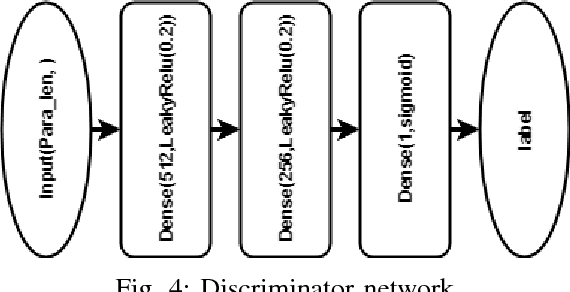

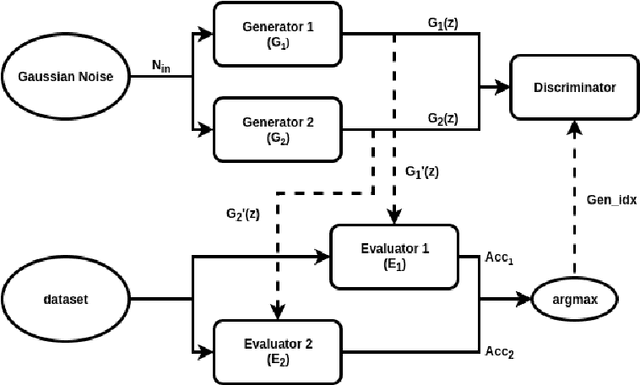

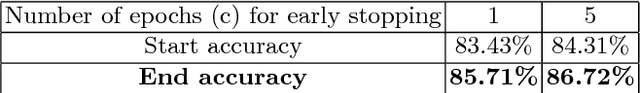

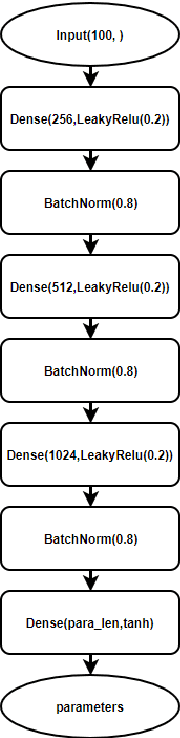

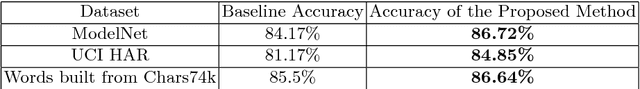

Abstract:The choice of parameters, and the design of the network architecture are important factors affecting the performance of deep neural networks. Genetic Algorithms (GA) have been used before to determine parameters of a network. Yet, GAs perform a finite search over a discrete set of pre-defined candidates, and cannot, in general, generate unseen configurations. In this paper, to move from exploration to exploitation, we propose a novel and systematic method that autonomously and simultaneously optimizes multiple parameters of any deep neural network by using a GA aided by a bi-generative adversarial network (Bi-GAN). The proposed Bi-GAN allows the autonomous exploitation and choice of the number of neurons, for fully-connected layers, and number of filters, for convolutional layers, from a large range of values. Our proposed Bi-GAN involves two generators, and two different models compete and improve each other progressively with a GAN-based strategy to optimize the networks during GA evolution. Our proposed approach can be used to autonomously refine the number of convolutional layers and dense layers, number and size of kernels, and the number of neurons for the dense layers; choose the type of the activation function; and decide whether to use dropout and batch normalization or not, to improve the accuracy of different deep neural network architectures. Without loss of generality, the proposed method has been tested with the ModelNet database, and compared with the 3D Shapenets and two GA-only methods. The results show that the presented approach can simultaneously and successfully optimize multiple neural network parameters, and achieve higher accuracy even with shallower networks.

Autonomously and Simultaneously Refining Deep Neural Network Parameters by Generative Adversarial Networks

May 24, 2018

Abstract:The choice of parameters, and the design of the network architecture are important factors affecting the performance of deep neural networks. However, there has not been much work on developing an established and systematic way of building the structure and choosing the parameters of a neural network, and this task heavily depends on trial and error and empirical results. Considering that there are many design and parameter choices, such as the number of neurons in each layer, the type of activation function, the choice of using drop out or not, it is very hard to cover every configuration, and find the optimal structure. In this paper, we propose a novel and systematic method that autonomously and simultaneously optimizes multiple parameters of any given deep neural network by using a generative adversarial network (GAN). In our proposed approach, two different models compete and improve each other progressively with a GAN-based strategy. Our proposed approach can be used to autonomously refine the parameters, and improve the accuracy of different deep neural network architectures. Without loss of generality, the proposed method has been tested with three different neural network architectures, and three very different datasets and applications. The results show that the presented approach can simultaneously and successfully optimize multiple neural network parameters, and achieve increased accuracy in all three scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge