Bruno Sudret

Probabilistic function-on-function nonlinear autoregressive model for emulation and reliability analysis of dynamical systems

Feb 02, 2026Abstract:Constructing accurate and computationally efficient surrogate models (or emulators) for predicting dynamical system responses is critical in many engineering domains, yet remains challenging due to the strongly nonlinear and high-dimensional mapping from external excitations and system parameters to system responses. This work introduces a novel Function-on-Function Nonlinear AutoRegressive model with eXogenous inputs (F2NARX), which reformulates the conventional NARX model from a function-on-function regression perspective, inspired by the recently proposed $\mathcal{F}$-NARX method. The proposed framework substantially improves predictive efficiency while maintaining high accuracy. By combining principal component analysis with Gaussian process regression, F2NARX further enables probabilistic predictions of dynamical responses via the unscented transform in an autoregressive manner. The effectiveness of the method is demonstrated through case studies of varying complexity. Results show that F2NARX outperforms state-of-the-art NARX model by orders of magnitude in efficiency while achieving higher accuracy in general. Moreover, its probabilistic prediction capabilities facilitate active learning, enabling accurate estimation of first-passage failure probabilities of dynamical systems using only a small number of training time histories.

Reliability analysis for non-deterministic limit-states using stochastic emulators

Dec 18, 2024Abstract:Reliability analysis is a sub-field of uncertainty quantification that assesses the probability of a system performing as intended under various uncertainties. Traditionally, this analysis relies on deterministic models, where experiments are repeatable, i.e., they produce consistent outputs for a given set of inputs. However, real-world systems often exhibit stochastic behavior, leading to non-repeatable outcomes. These so-called stochastic simulators produce different outputs each time the model is run, even with fixed inputs. This paper formally introduces reliability analysis for stochastic models and addresses it by using suitable surrogate models to lower its typically high computational cost. Specifically, we focus on the recently introduced generalized lambda models and stochastic polynomial chaos expansions. These emulators are designed to learn the inherent randomness of the simulator's response and enable efficient uncertainty quantification at a much lower cost than traditional Monte Carlo simulation. We validate our methodology through three case studies. First, using an analytical function with a closed-form solution, we demonstrate that the emulators converge to the correct solution. Second, we present results obtained from the surrogates using a toy example of a simply supported beam. Finally, we apply the emulators to perform reliability analysis on a realistic wind turbine case study, where only a dataset of simulation results is available.

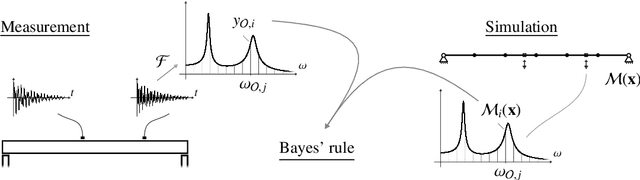

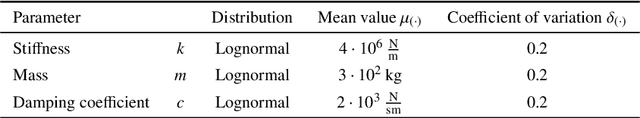

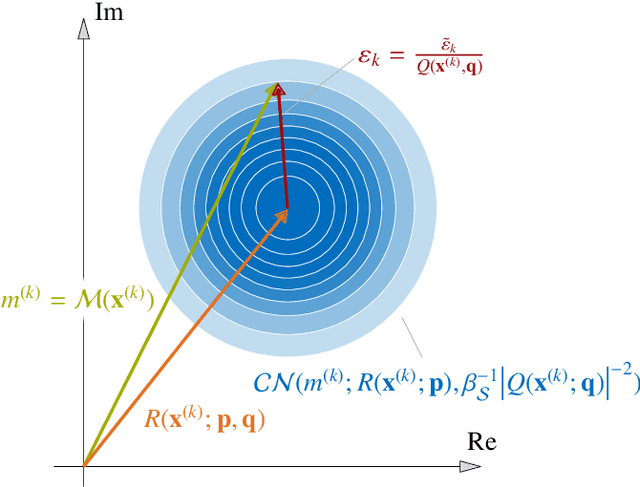

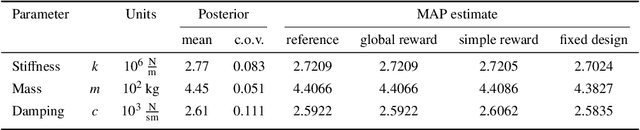

Maximum a Posteriori Estimation for Linear Structural Dynamics Models Using Bayesian Optimization with Rational Polynomial Chaos Expansions

Aug 07, 2024

Abstract:Bayesian analysis enables combining prior knowledge with measurement data to learn model parameters. Commonly, one resorts to computing the maximum a posteriori (MAP) estimate, when only a point estimate of the parameters is of interest. We apply MAP estimation in the context of structural dynamic models, where the system response can be described by the frequency response function. To alleviate high computational demands from repeated expensive model calls, we utilize a rational polynomial chaos expansion (RPCE) surrogate model that expresses the system frequency response as a rational of two polynomials with complex coefficients. We propose an extension to an existing sparse Bayesian learning approach for RPCE based on Laplace's approximation for the posterior distribution of the denominator coefficients. Furthermore, we introduce a Bayesian optimization approach, which allows to adaptively enrich the experimental design throughout the optimization process of MAP estimation. Thereby, we utilize the expected improvement acquisition function as a means to identify sample points in the input space that are possibly associated with large objective function values. The acquisition function is estimated through Monte Carlo sampling based on the posterior distribution of the expansion coefficients identified in the sparse Bayesian learning process. By combining the sparsity-inducing learning procedure with the sequential experimental design, we effectively reduce the number of model evaluations in the MAP estimation problem. We demonstrate the applicability of the presented methods on the parameter updating problem of an algebraic two-degree-of-freedom system and the finite element model of a cross-laminated timber plate.

A comprehensive framework for multi-fidelity surrogate modeling with noisy data: a gray-box perspective

Jan 12, 2024Abstract:Computer simulations (a.k.a. white-box models) are more indispensable than ever to model intricate engineering systems. However, computational models alone often fail to fully capture the complexities of reality. When physical experiments are accessible though, it is of interest to enhance the incomplete information offered by computational models. Gray-box modeling is concerned with the problem of merging information from data-driven (a.k.a. black-box) models and white-box (i.e., physics-based) models. In this paper, we propose to perform this task by using multi-fidelity surrogate models (MFSMs). A MFSM integrates information from models with varying computational fidelity into a new surrogate model. The multi-fidelity surrogate modeling framework we propose handles noise-contaminated data and is able to estimate the underlying noise-free high-fidelity function. Our methodology emphasizes on delivering precise estimates of the uncertainty in its predictions in the form of confidence and prediction intervals, by quantitatively incorporating the different types of uncertainty that affect the problem, arising from measurement noise and from lack of knowledge due to the limited experimental design budget on both the high- and low-fidelity models. Applied to gray-box modeling, our MFSM framework treats noisy experimental data as the high-fidelity and the white-box computational models as their low-fidelity counterparts. The effectiveness of our methodology is showcased through synthetic examples and a wind turbine application.

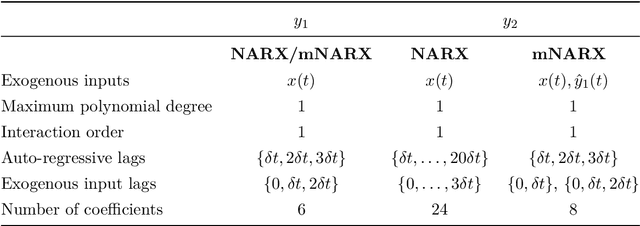

Emulating the dynamics of complex systems using autoregressive models on manifolds (mNARX)

Jun 28, 2023

Abstract:In this study, we propose a novel surrogate modelling approach to efficiently and accurately approximate the response of complex dynamical systems driven by time-varying exogenous excitations over extended time periods. Our approach, that we name \emph{manifold nonlinear autoregressive modelling with exogenous input} (mNARX), involves constructing a problem-specific exogenous input manifold that is optimal for constructing autoregressive surrogates. The manifold, which forms the core of mNARX, is constructed incrementally by incorporating the physics of the system, as well as prior expert- and domain- knowledge. Because mNARX decomposes the full problem into a series of smaller sub-problems, each with a lower complexity than the original, it scales well with the complexity of the problem, both in terms of training and evaluation costs of the final surrogate. Furthermore, mNARX synergizes well with traditional dimensionality reduction techniques, making it highly suitable for modelling dynamical systems with high-dimensional exogenous inputs, a class of problems that is typically challenging to solve.Since domain knowledge is particularly abundant in physical systems, such as those found in engineering applications, mNARX is well suited for these applications. We demonstrate that mNARX outperforms traditional autoregressive surrogates in predicting the response of a classical coupled spring-mass system excited by a one-dimensional random excitation. Additionally, we show that mNARX is well suited for emulating very high-dimensional time- and state-dependent systems, even when affected by active controllers, by surrogating the dynamics of a realistic aero-servo-elastic onshore wind turbine simulator. In general, our results demonstrate that mNARX offers promising prospects for modelling complex dynamical systems, in terms of accuracy and efficiency.

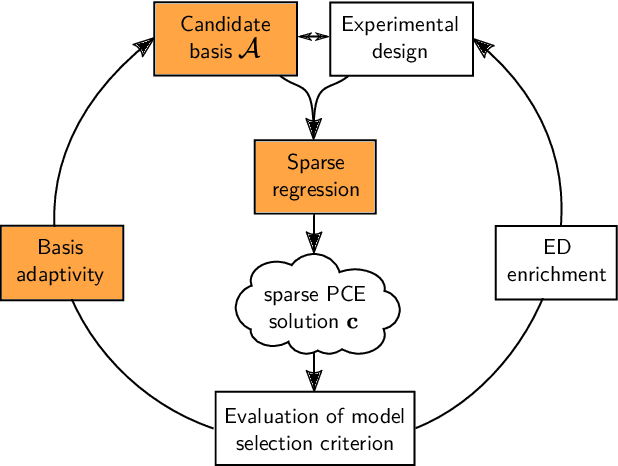

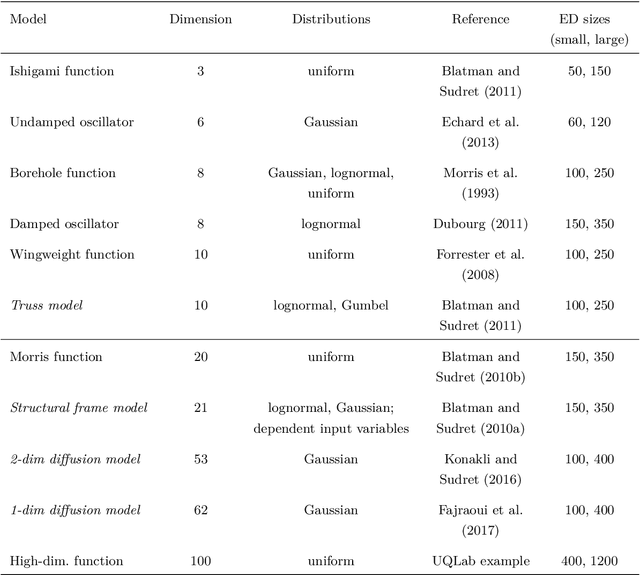

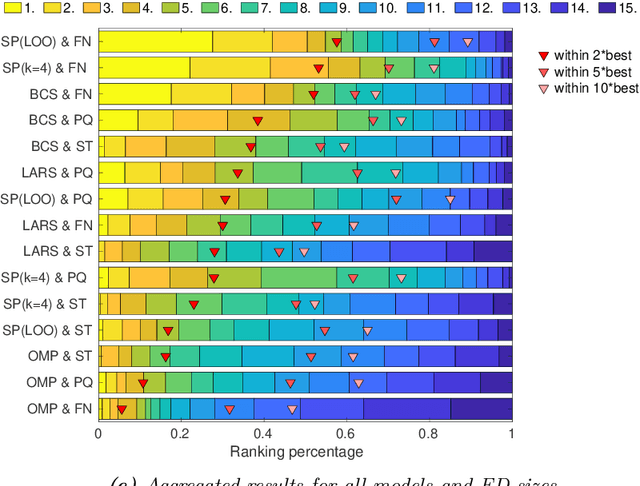

Sparse Polynomial Chaos Expansions: Solvers, Basis Adaptivity and Meta-selection

Sep 10, 2020

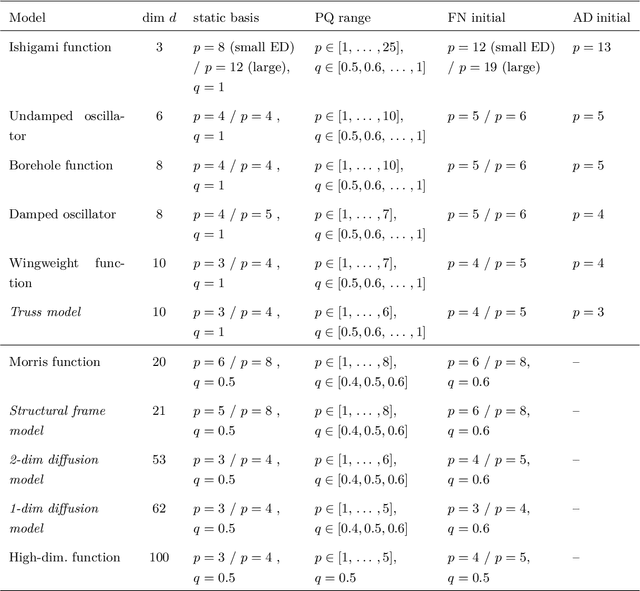

Abstract:Sparse polynomial chaos expansions (PCEs) are an efficient and widely used surrogate modeling method in uncertainty quantification. Among the many contributions aiming at computing an accurate sparse PCE while using as few model evaluations as possible, basis adaptivity is a particularly interesting approach. It consists in starting from an expansion with a small number of polynomial terms, and then parsimoniously adding and removing basis functions in an iterative fashion. We describe several state-of-the-art approaches from the recent literature and extensively benchmark them on a large set of computational models representative of a wide range of engineering problems. We investigate the synergies between sparse regression solvers and basis adaptivity schemes, and provide recommendations on which of them are most promising for specific classes of problems. Furthermore, we explore the performance of a novel cross-validation-based solver and basis adaptivity selection scheme, which consistently provides close-to-optimal results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge