Bruce Martin

Marine Mammal Species Classification using Convolutional Neural Networks and a Novel Acoustic Representation

Jul 30, 2019

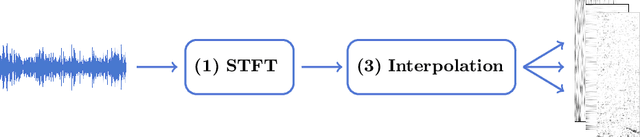

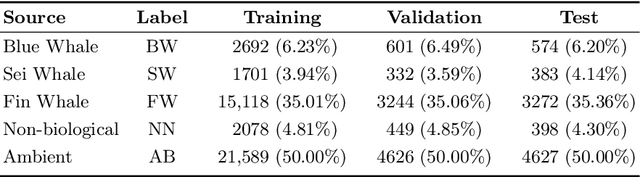

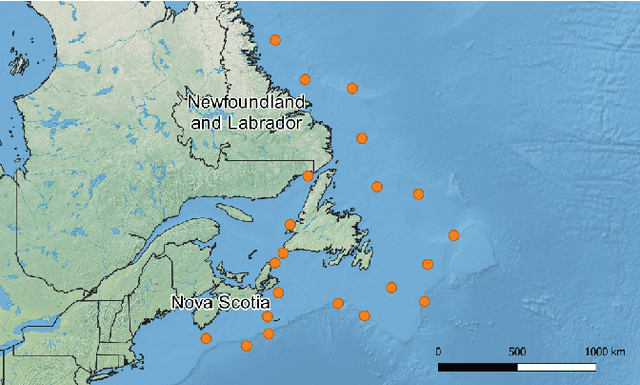

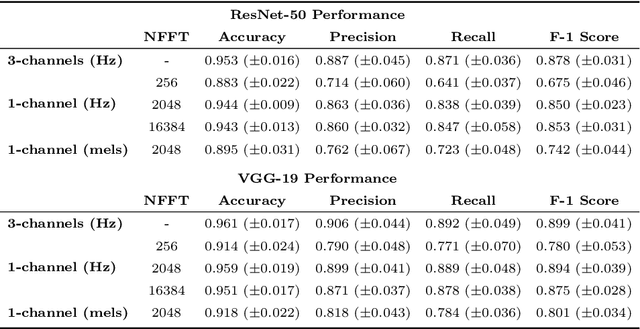

Abstract:Research into automated systems for detecting and classifying marine mammals in acoustic recordings is expanding internationally due to the necessity to analyze large collections of data for conservation purposes. In this work, we present a Convolutional Neural Network that is capable of classifying the vocalizations of three species of whales, non-biological sources of noise, and a fifth class pertaining to ambient noise. In this way, the classifier is capable of detecting the presence and absence of whale vocalizations in an acoustic recording. Through transfer learning, we show that the classifier is capable of learning high-level representations and can generalize to additional species. We also propose a novel representation of acoustic signals that builds upon the commonly used spectrogram representation by way of interpolating and stacking multiple spectrograms produced using different Short-time Fourier Transform (STFT) parameters. The proposed representation is particularly effective for the task of marine mammal species classification where the acoustic events we are attempting to classify are sensitive to the parameters of the STFT.

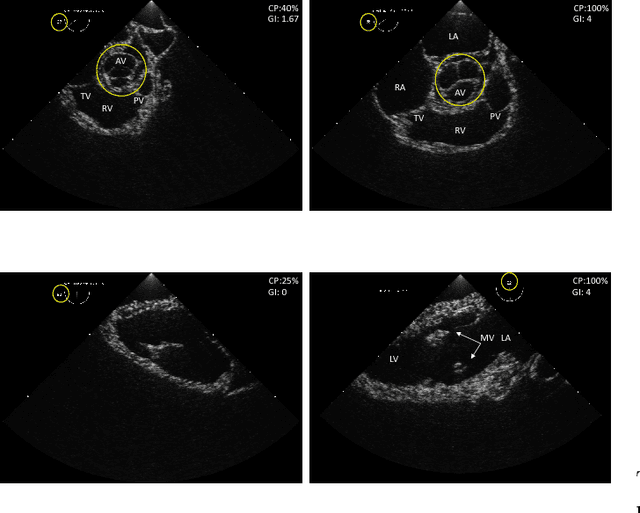

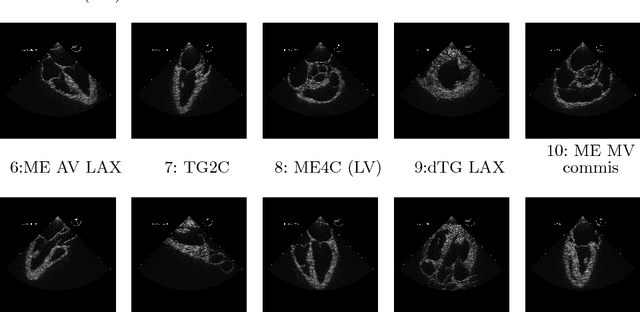

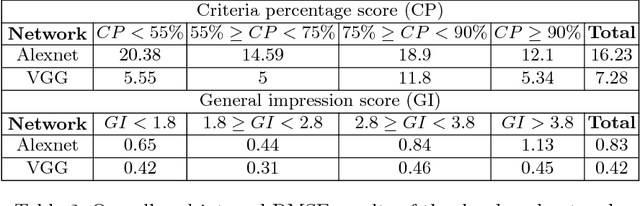

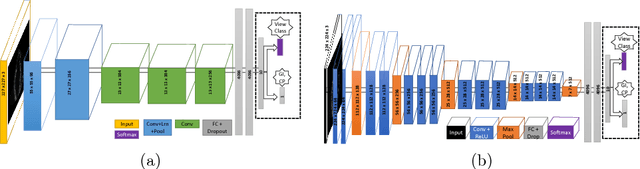

Automated Performance Assessment in Transoesophageal Echocardiography with Convolutional Neural Networks

Jun 13, 2018

Abstract:Transoesophageal echocardiography (TEE) is a valuable diagnostic and monitoring imaging modality. Proper image acquisition is essential for diagnosis, yet current assessment techniques are solely based on manual expert review. This paper presents a supervised deep learn ing framework for automatically evaluating and grading the quality of TEE images. To obtain the necessary dataset, 38 participants of varied experience performed TEE exams with a high-fidelity virtual reality (VR) platform. Two Convolutional Neural Network (CNN) architectures, AlexNet and VGG, structured to perform regression, were finetuned and validated on manually graded images from three evaluators. Two different scoring strategies, a criteria-based percentage and an overall general impression, were used. The developed CNN models estimate the average score with a root mean square accuracy ranging between 84%-93%, indicating the ability to replicate expert valuation. Proposed strategies for automated TEE assessment can have a significant impact on the training process of new TEE operators, providing direct feedback and facilitating the development of the necessary dexterous skills.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge