Brian Ruttenberg

Causal Learning and Explanation of Deep Neural Networks via Autoencoded Activations

Feb 02, 2018

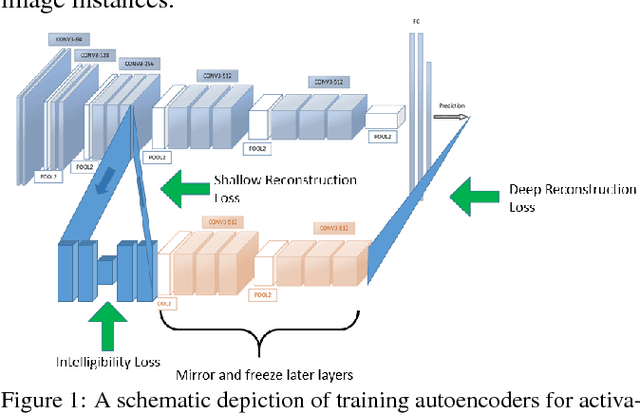

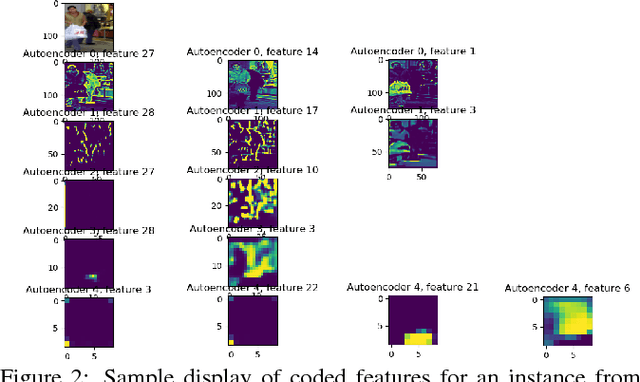

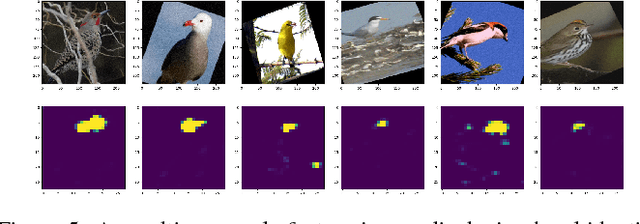

Abstract:Deep neural networks are complex and opaque. As they enter application in a variety of important and safety critical domains, users seek methods to explain their output predictions. We develop an approach to explaining deep neural networks by constructing causal models on salient concepts contained in a CNN. We develop methods to extract salient concepts throughout a target network by using autoencoders trained to extract human-understandable representations of network activations. We then build a bayesian causal model using these extracted concepts as variables in order to explain image classification. Finally, we use this causal model to identify and visualize features with significant causal influence on final classification.

Artificial Intelligence Based Malware Analysis

Apr 27, 2017

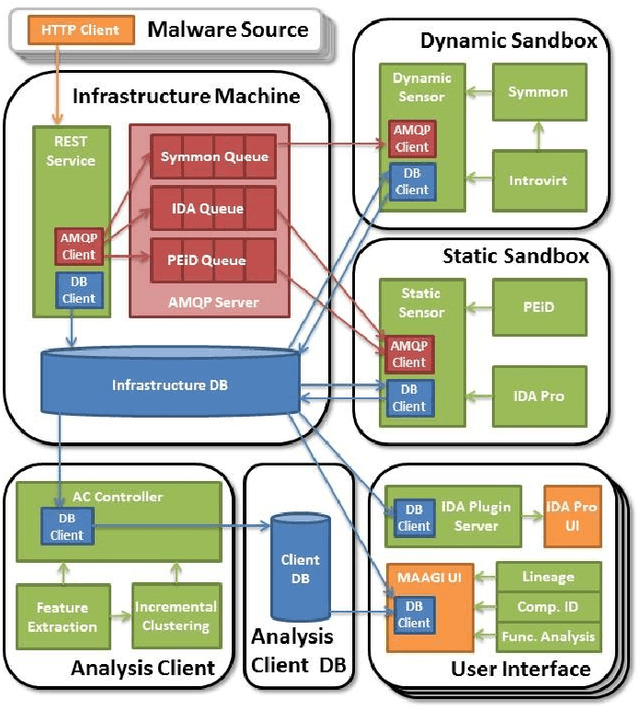

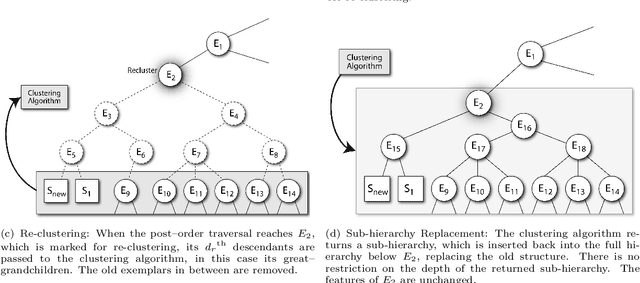

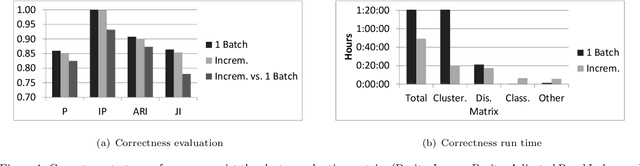

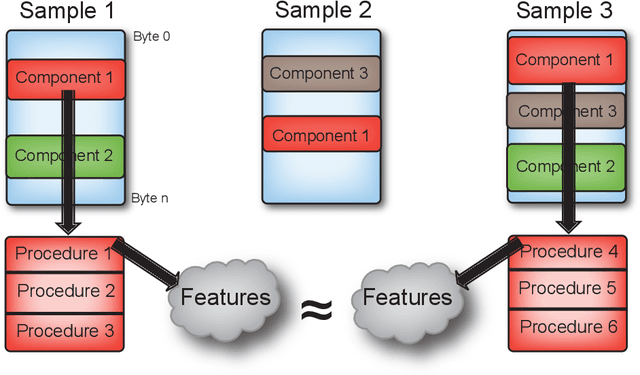

Abstract:Artificial intelligence methods have often been applied to perform specific functions or tasks in the cyber-defense realm. However, as adversary methods become more complex and difficult to divine, piecemeal efforts to understand cyber-attacks, and malware-based attacks in particular, are not providing sufficient means for malware analysts to understand the past, present and future characteristics of malware. In this paper, we present the Malware Analysis and Attributed using Genetic Information (MAAGI) system. The underlying idea behind the MAAGI system is that there are strong similarities between malware behavior and biological organism behavior, and applying biologically inspired methods to corpora of malware can help analysts better understand the ecosystem of malware attacks. Due to the sophistication of the malware and the analysis, the MAAGI system relies heavily on artificial intelligence techniques to provide this capability. It has already yielded promising results over its development life, and will hopefully inspire more integration between the artificial intelligence and cyber--defense communities.

Structured Factored Inference: A Framework for Automated Reasoning in Probabilistic Programming Languages

Jun 10, 2016

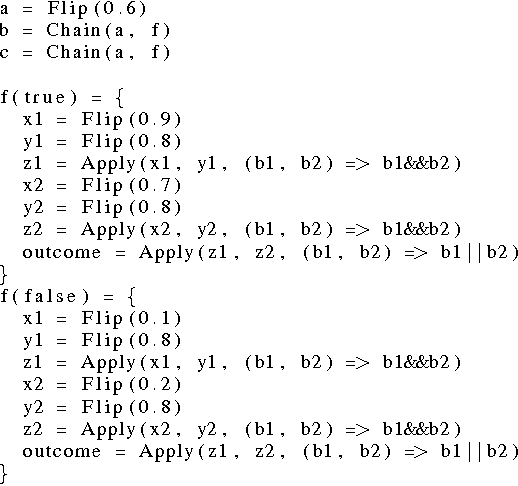

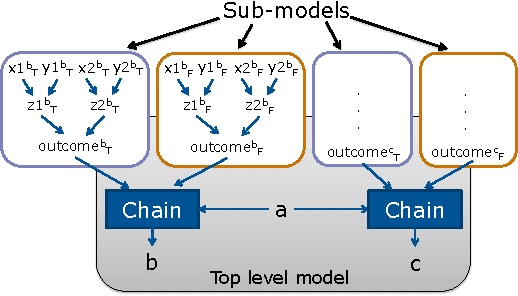

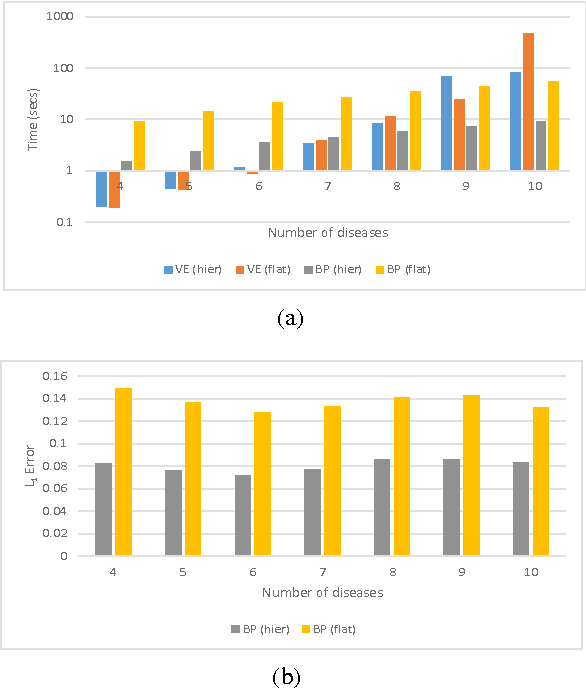

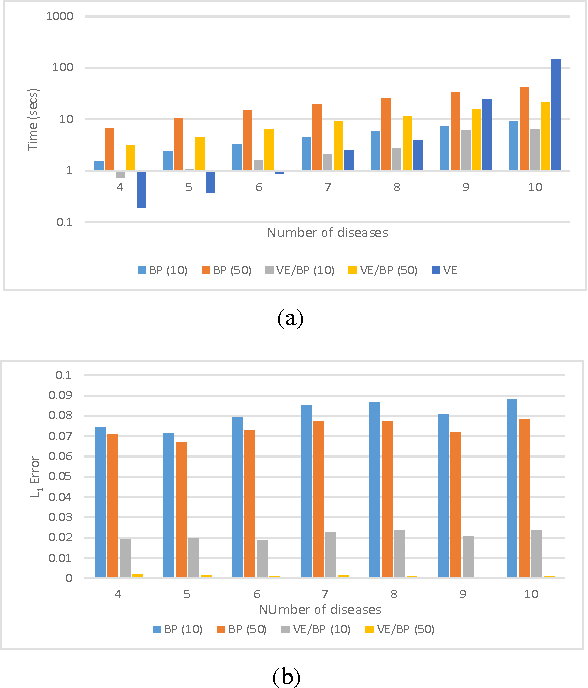

Abstract:Reasoning on large and complex real-world models is a computationally difficult task, yet one that is required for effective use of many AI applications. A plethora of inference algorithms have been developed that work well on specific models or only on parts of general models. Consequently, a system that can intelligently apply these inference algorithms to different parts of a model for fast reasoning is highly desirable. We introduce a new framework called structured factored inference (SFI) that provides the foundation for such a system. Using models encoded in a probabilistic programming language, SFI provides a sound means to decompose a model into sub-models, apply an inference algorithm to each sub-model, and combine the resulting information to answer a query. Our results show that SFI is nearly as accurate as exact inference yet retains the benefits of approximate inference methods.

Lazy Factored Inference for Functional Probabilistic Programming

Sep 11, 2015

Abstract:Probabilistic programming provides the means to represent and reason about complex probabilistic models using programming language constructs. Even simple probabilistic programs can produce models with infinitely many variables. Factored inference algorithms are widely used for probabilistic graphical models, but cannot be applied to these programs because all the variables and factors have to be enumerated. In this paper, we present a new inference framework, lazy factored inference (LFI), that enables factored algorithms to be used for models with infinitely many variables. LFI expands the model to a bounded depth and uses the structure of the program to precisely quantify the effect of the unexpanded part of the model, producing lower and upper bounds to the probability of the query.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge