Binghao Zhang

OpenKBP-Opt: An international and reproducible evaluation of 76 knowledge-based planning pipelines

Feb 16, 2022

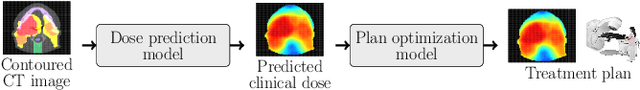

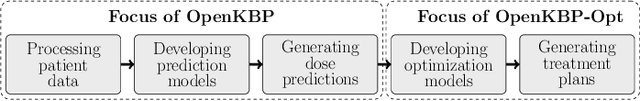

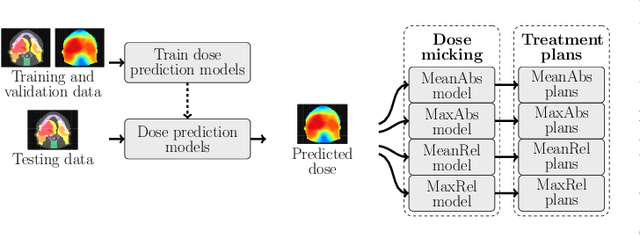

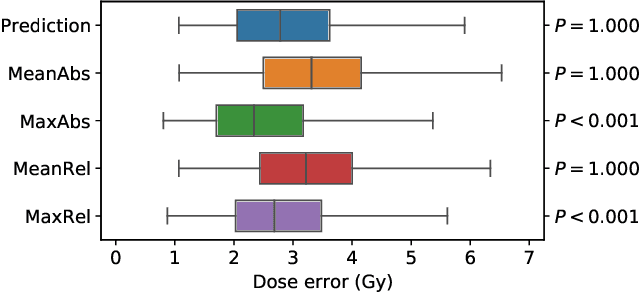

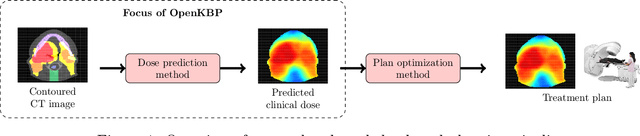

Abstract:We establish an open framework for developing plan optimization models for knowledge-based planning (KBP) in radiotherapy. Our framework includes reference plans for 100 patients with head-and-neck cancer and high-quality dose predictions from 19 KBP models that were developed by different research groups during the OpenKBP Grand Challenge. The dose predictions were input to four optimization models to form 76 unique KBP pipelines that generated 7600 plans. The predictions and plans were compared to the reference plans via: dose score, which is the average mean absolute voxel-by-voxel difference in dose a model achieved; the deviation in dose-volume histogram (DVH) criterion; and the frequency of clinical planning criteria satisfaction. We also performed a theoretical investigation to justify our dose mimicking models. The range in rank order correlation of the dose score between predictions and their KBP pipelines was 0.50 to 0.62, which indicates that the quality of the predictions is generally positively correlated with the quality of the plans. Additionally, compared to the input predictions, the KBP-generated plans performed significantly better (P<0.05; one-sided Wilcoxon test) on 18 of 23 DVH criteria. Similarly, each optimization model generated plans that satisfied a higher percentage of criteria than the reference plans. Lastly, our theoretical investigation demonstrated that the dose mimicking models generated plans that are also optimal for a conventional planning model. This was the largest international effort to date for evaluating the combination of KBP prediction and optimization models. In the interest of reproducibility, our data and code is freely available at https://github.com/ababier/open-kbp-opt.

OpenKBP: The open-access knowledge-based planning grand challenge

Nov 28, 2020

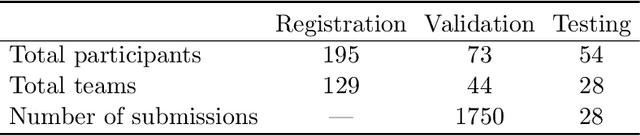

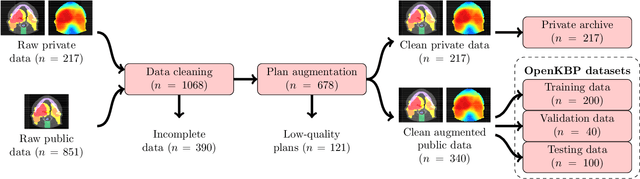

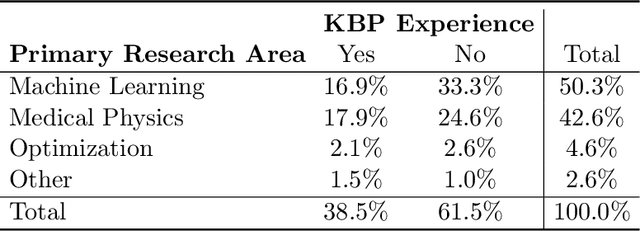

Abstract:The purpose of this work is to advance fair and consistent comparisons of dose prediction methods for knowledge-based planning (KBP) in radiation therapy research. We hosted OpenKBP, a 2020 AAPM Grand Challenge, and challenged participants to develop the best method for predicting the dose of contoured CT images. The models were evaluated according to two separate scores: (1) dose score, which evaluates the full 3D dose distributions, and (2) dose-volume histogram (DVH) score, which evaluates a set DVH metrics. Participants were given the data of 340 patients who were treated for head-and-neck cancer with radiation therapy. The data was partitioned into training (n=200), validation (n=40), and testing (n=100) datasets. All participants performed training and validation with the corresponding datasets during the validation phase of the Challenge, and we ranked the models in the testing phase based on out-of-sample performance. The Challenge attracted 195 participants from 28 countries, and 73 of those participants formed 44 teams in the validation phase, which received a total of 1750 submissions. The testing phase garnered submissions from 28 teams. On average, over the course of the validation phase, participants improved the dose and DVH scores of their models by a factor of 2.7 and 5.7, respectively. In the testing phase one model achieved significantly better dose and DVH score than the runner-up models. Lastly, many of the top performing teams reported using generalizable techniques (e.g., ensembles) to achieve higher performance than their competition. This is the first competition for knowledge-based planning research, and it helped launch the first platform for comparing KBP prediction methods fairly and consistently. The OpenKBP datasets are available publicly to help benchmark future KBP research, which has also democratized KBP research by making it accessible to everyone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge