Bilgin Kosucu

A Deep Reinforcement Learning Approach for the Meal Delivery Problem

Apr 24, 2021

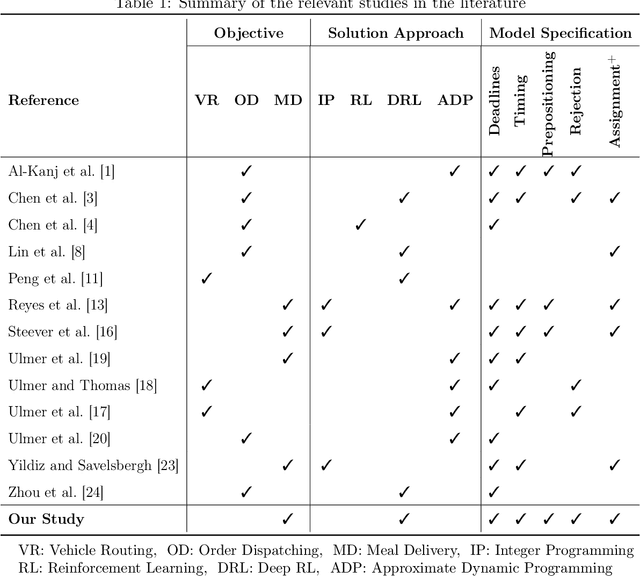

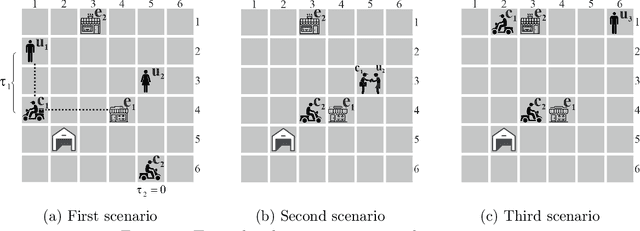

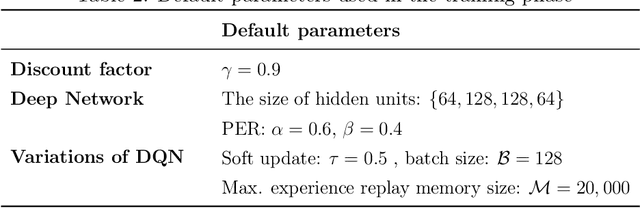

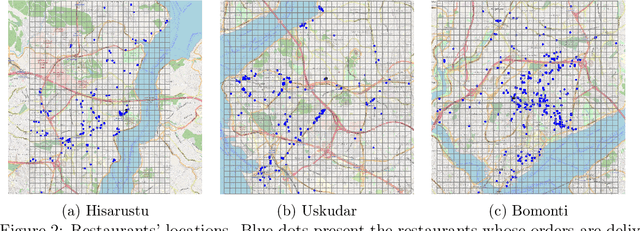

Abstract:We consider a meal delivery service fulfilling dynamic customer requests given a set of couriers over the course of a day. A courier's duty is to pick-up an order from a restaurant and deliver it to a customer. We model this service as a Markov decision process and use deep reinforcement learning as the solution approach. We experiment with the resulting policies on synthetic and real-world datasets and compare those with the baseline policies. We also examine the courier utilization for different numbers of couriers. In our analysis, we specifically focus on the impact of the limited available resources in the meal delivery problem. Furthermore, we investigate the effect of intelligent order rejection and re-positioning of the couriers. Our numerical experiments show that, by incorporating the geographical locations of the restaurants, customers, and the depot, our model significantly improves the overall service quality as characterized by the expected total reward and the delivery times. Our results present valuable insights on both the courier assignment process and the optimal number of couriers for different order frequencies on a given day. The proposed model also shows a robust performance under a variety of scenarios for real-world implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge