Bilel Derbel

BONUS

On the Combined Impact of Population Size and Sub-problem Selection in MOEA/D

Apr 15, 2020

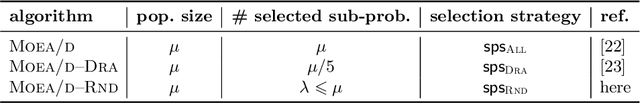

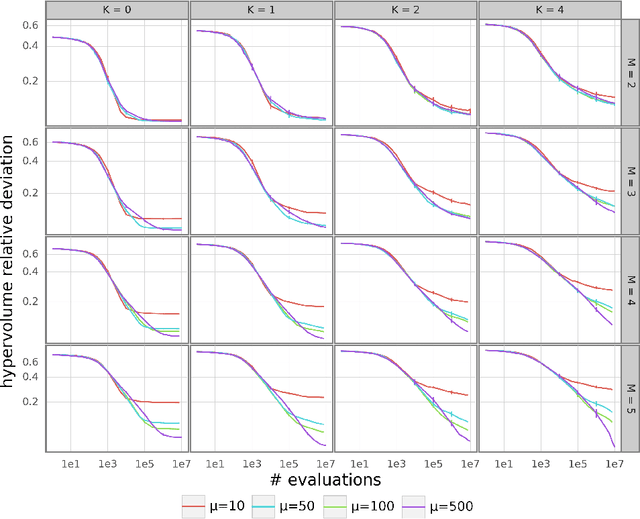

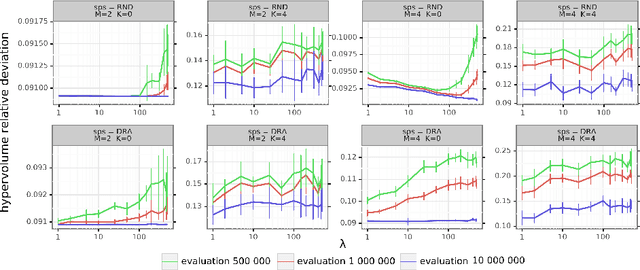

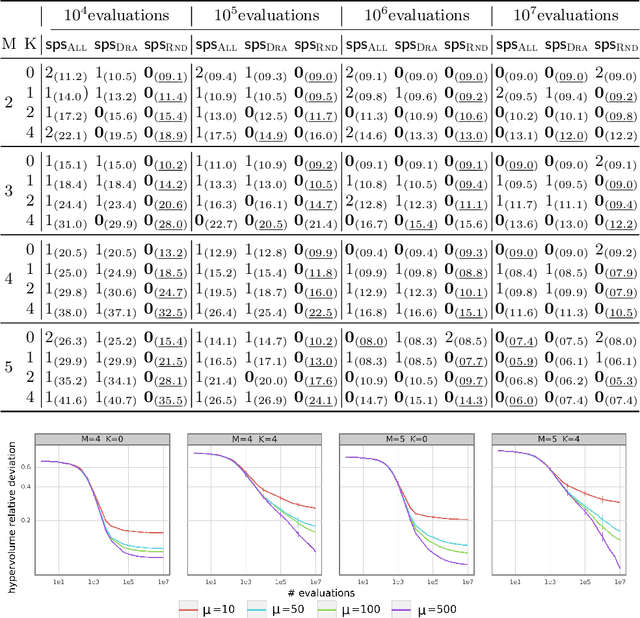

Abstract:This paper intends to understand and to improve the working principle of decomposition-based multi-objective evolutionary algorithms. We review the design of the well-established Moea/d framework to support the smooth integration of different strategies for sub-problem selection, while emphasizing the role of the population size and of the number of offspring created at each generation. By conducting a comprehensive empirical analysis on a wide range of multi-and many-objective combinatorial NK landscapes, we provide new insights into the combined effect of those parameters on the anytime performance of the underlying search process. In particular, we show that even a simple random strategy selecting sub-problems at random outperforms existing sophisticated strategies. We also study the sensitivity of such strategies with respect to the ruggedness and the objective space dimension of the target problem.

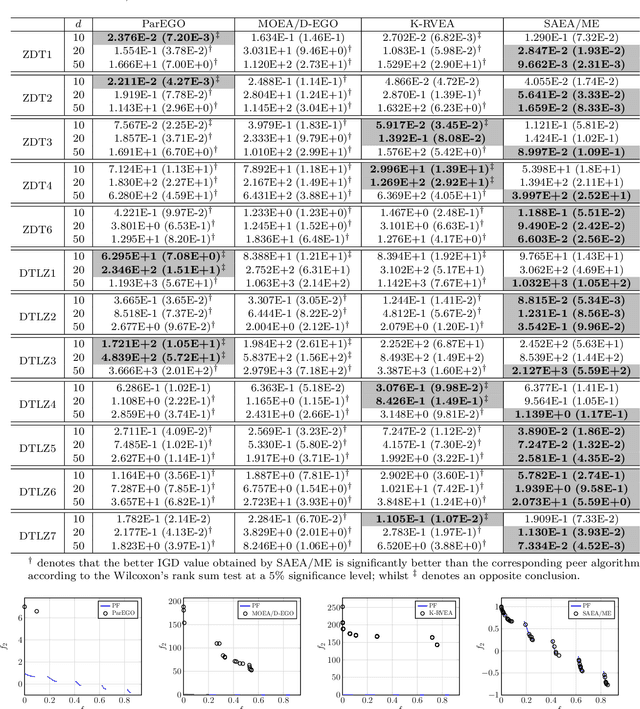

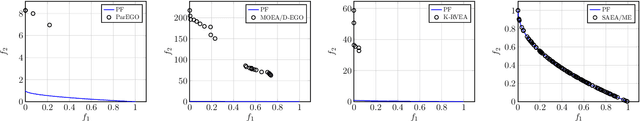

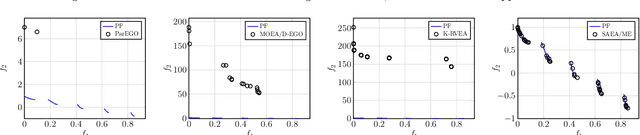

Surrogate Assisted Evolutionary Algorithm for Medium Scale Expensive Multi-Objective Optimisation Problems

Feb 08, 2020

Abstract:Building a surrogate model of an objective function has shown to be effective to assist evolutionary algorithms (EAs) to solve real-world complex optimisation problems which involve either computationally expensive numerical simulations or costly physical experiments. However, their effectiveness mostly focuses on small-scale problems with less than 10 decision variables. The scalability of surrogate assisted EAs (SAEAs) have not been well studied yet. In this paper, we propose a Gaussian process surrogate model assisted EA for medium-scale expensive multi-objective optimisation problems with up to 50 decision variables. There are three distinctive features of our proposed SAEA. First, instead of using all decision variables in surrogate model building, we only use those correlated ones to build the surrogate model for each objective function. Second, rather than directly optimising the surrogate objective functions, the original multi-objective optimisation problem is transformed to a new one based on the surrogate models. Last but not the least, a subset selection method is developed to choose a couple of promising candidate solutions for actual objective function evaluations thus to update the training dataset. The effectiveness of our proposed algorithm is validated on benchmark problems with 10, 20, 50 variables, comparing with three state-of-the-art SAEAs.

On the Impact of Multiobjective Scalarizing Functions

Sep 19, 2014

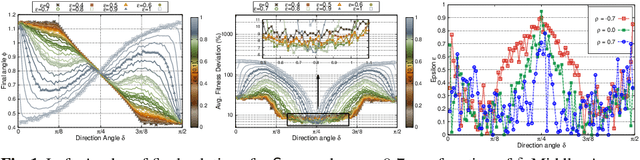

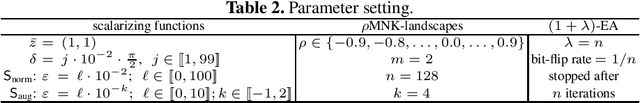

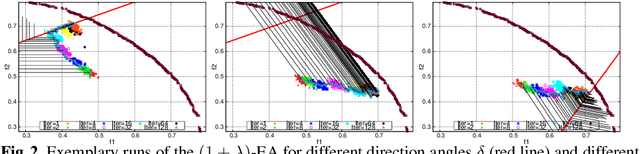

Abstract:Recently, there has been a renewed interest in decomposition-based approaches for evolutionary multiobjective optimization. However, the impact of the choice of the underlying scalarizing function(s) is still far from being well understood. In this paper, we investigate the behavior of different scalarizing functions and their parameters. We thereby abstract firstly from any specific algorithm and only consider the difficulty of the single scalarized problems in terms of the search ability of a (1+lambda)-EA on biobjective NK-landscapes. Secondly, combining the outcomes of independent single-objective runs allows for more general statements on set-based performance measures. Finally, we investigate the correlation between the opening angle of the scalarizing function's underlying contour lines and the position of the final solution in the objective space. Our analysis is of fundamental nature and sheds more light on the key characteristics of multiobjective scalarizing functions.

DAMS: Distributed Adaptive Metaheuristic Selection

Jul 18, 2012

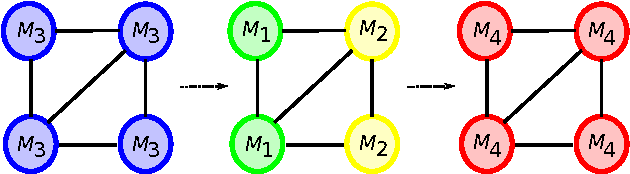

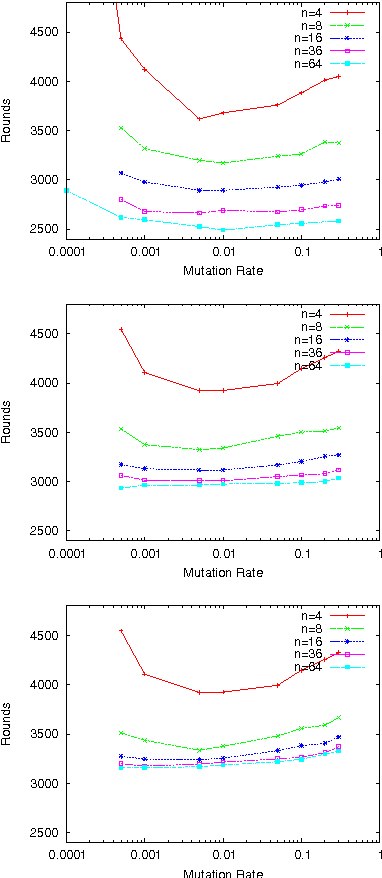

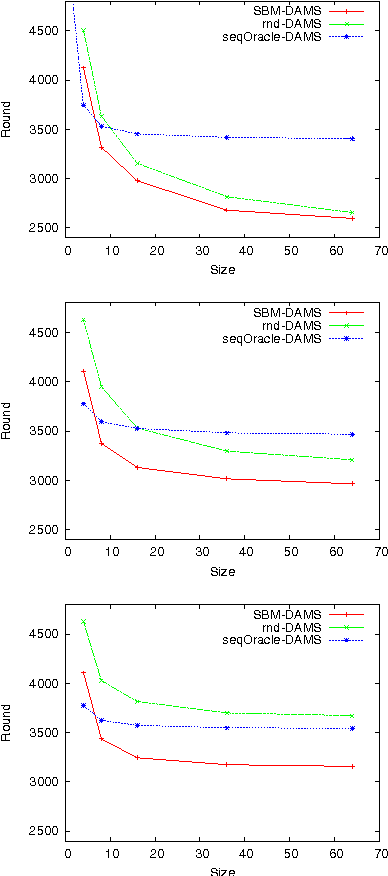

Abstract:We present a distributed generic algorithm called DAMS dedicated to adaptive optimization in distributed environments. Given a set of metaheuristic, the goal of DAMS is to coordinate their local execution on distributed nodes in order to optimize the global performance of the distributed system. DAMS is based on three-layer architecture allowing node to decide distributively what local information to communicate, and what metaheuristic to apply while the optimization process is in progress. The adaptive features of DAMS are first addressed in a very general setting. A specific DAMS called SBM is then described and analyzed from both a parallel and an adaptive point of view. SBM is a simple, yet efficient, adaptive distributed algorithm using an exploitation component allowing nodes to select the metaheuristic with the best locally observed performance, and an exploration component allowing nodes to detect the metaheuristic with the actual best performance. The efficiency of BSM-DAMS is demonstrated through experimentations and comparisons with other adaptive strategies (sequential and distributed).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge