Beom-Jin Lee

Multi-focus Attention Network for Efficient Deep Reinforcement Learning

Dec 13, 2017

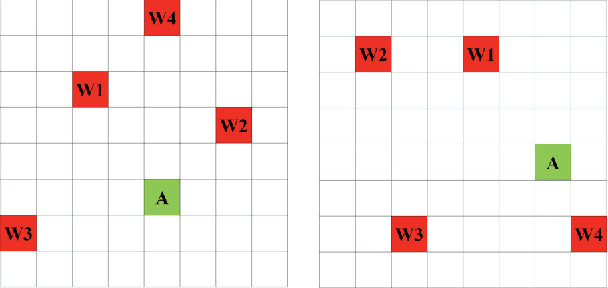

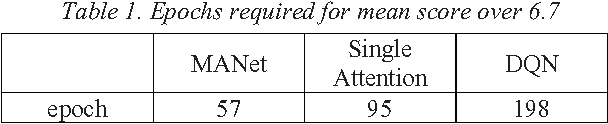

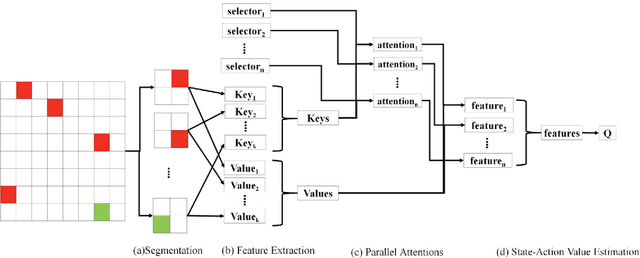

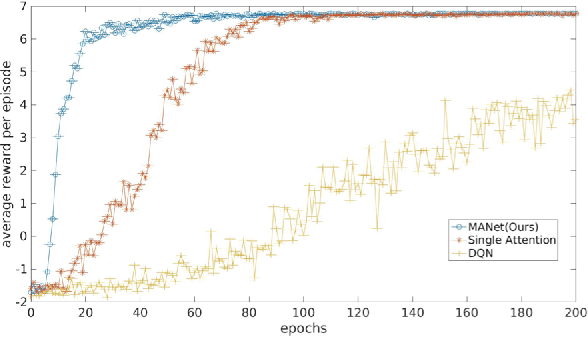

Abstract:Deep reinforcement learning (DRL) has shown incredible performance in learning various tasks to the human level. However, unlike human perception, current DRL models connect the entire low-level sensory input to the state-action values rather than exploiting the relationship between and among entities that constitute the sensory input. Because of this difference, DRL needs vast amount of experience samples to learn. In this paper, we propose a Multi-focus Attention Network (MANet) which mimics human ability to spatially abstract the low-level sensory input into multiple entities and attend to them simultaneously. The proposed method first divides the low-level input into several segments which we refer to as partial states. After this segmentation, parallel attention layers attend to the partial states relevant to solving the task. Our model estimates state-action values using these attended partial states. In our experiments, MANet attains highest scores with significantly less experience samples. Additionally, the model shows higher performance compared to the Deep Q-network and the single attention model as benchmarks. Furthermore, we extend our model to attentive communication model for performing multi-agent cooperative tasks. In multi-agent cooperative task experiments, our model shows 20% faster learning than existing state-of-the-art model.

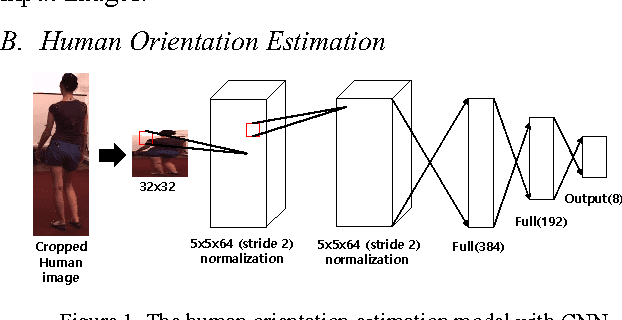

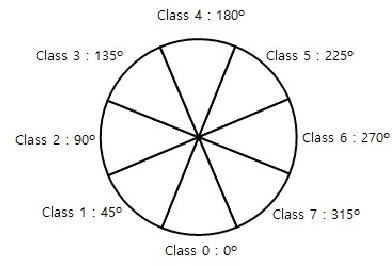

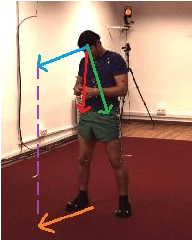

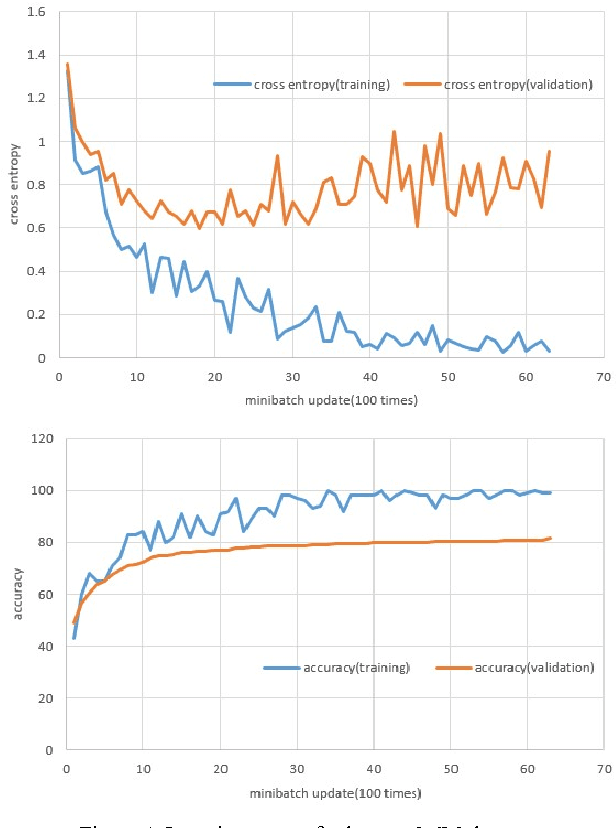

Human Body Orientation Estimation using Convolutional Neural Network

Sep 07, 2016

Abstract:Personal robots are expected to interact with the user by recognizing the user's face. However, in most of the service robot applications, the user needs to move himself/herself to allow the robot to see him/her face to face. To overcome such limitations, a method for estimating human body orientation is required. Previous studies used various components such as feature extractors and classification models to classify the orientation which resulted in low performance. For a more robust and accurate approach, we propose the light weight convolutional neural networks, an end to end system, for estimating human body orientation. Our body orientation estimation model achieved 81.58% and 94% accuracy with the benchmark dataset and our own dataset respectively. The proposed method can be used in a wide range of service robot applications which depend on the ability to estimate human body orientation. To show its usefulness in service robot applications, we designed a simple robot application which allows the robot to move towards the user's frontal plane. With this, we demonstrated an improved face detection rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge