Benjamin V. Tucker

The Mason-Alberta Phonetic Segmenter: A forced alignment system based on deep neural networks and interpolation

Oct 24, 2023

Abstract:Forced alignment systems automatically determine boundaries between segments in speech data, given an orthographic transcription. These tools are commonplace in phonetics to facilitate the use of speech data that would be infeasible to manually transcribe and segment. In the present paper, we describe a new neural network-based forced alignment system, the Mason-Alberta Phonetic Segmenter (MAPS). The MAPS aligner serves as a testbed for two possible improvements we pursue for forced alignment systems. The first is treating the acoustic model in a forced aligner as a tagging task, rather than a classification task, motivated by the common understanding that segments in speech are not truly discrete and commonly overlap. The second is an interpolation technique to allow boundaries more precise than the common 10 ms limit in modern forced alignment systems. We compare configurations of our system to a state-of-the-art system, the Montreal Forced Aligner. The tagging approach did not generally yield improved results over the Montreal Forced Aligner. However, a system with the interpolation technique had a 27.92% increase relative to the Montreal Forced Aligner in the amount of boundaries within 10 ms of the target on the test set. We also reflect on the task and training process for acoustic modeling in forced alignment, highlighting how the output targets for these models do not match phoneticians' conception of similarity between phones and that reconciliation of this tension may require rethinking the task and output targets or how speech itself should be segmented.

A learning perspective on the emergence of abstractions: the curious case of phonemes

Dec 17, 2020

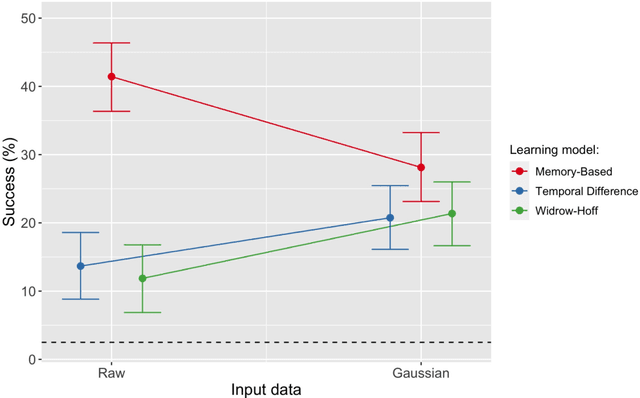

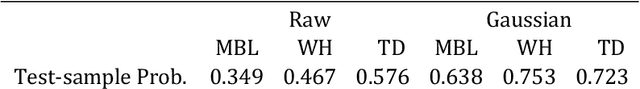

Abstract:In the present paper we use a range of modeling techniques to investigate whether an abstract phone could emerge from exposure to speech sounds. We test two opposing principles regarding the development of language knowledge in linguistically untrained language users: Memory-Based Learning (MBL) and Error-Correction Learning (ECL). A process of generalization underlies the abstractions linguists operate with, and we probed whether MBL and ECL could give rise to a type of language knowledge that resembles linguistic abstractions. Each model was presented with a significant amount of pre-processed speech produced by one speaker. We assessed the consistency or stability of what the models have learned and their ability to give rise to abstract categories. Both types of models fare differently with regard to these tests. We show that ECL learning models can learn abstractions and that at least part of the phone inventory can be reliably identified from the input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge