Benjamin Unger

Nonlinear model reduction for transport-dominated problems

Feb 01, 2026Abstract:This article surveys nonlinear model reduction methods that remain effective in regimes where linear reduced-space approximations are intrinsically inefficient, such as transport-dominated problems with wave-like phenomena and moving coherent structures, which are commonly associated with the Kolmogorov barrier. The article organizes nonlinear model reduction techniques around three key elements -- nonlinear parametrizations, reduced dynamics, and online solvers -- and categorizes existing approaches into transformation-based methods, online adaptive techniques, and formulations that combine generic nonlinear parametrizations with instantaneous residual minimization.

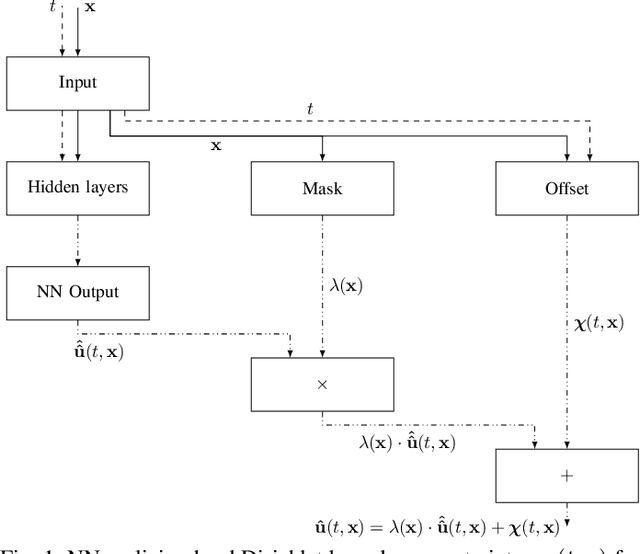

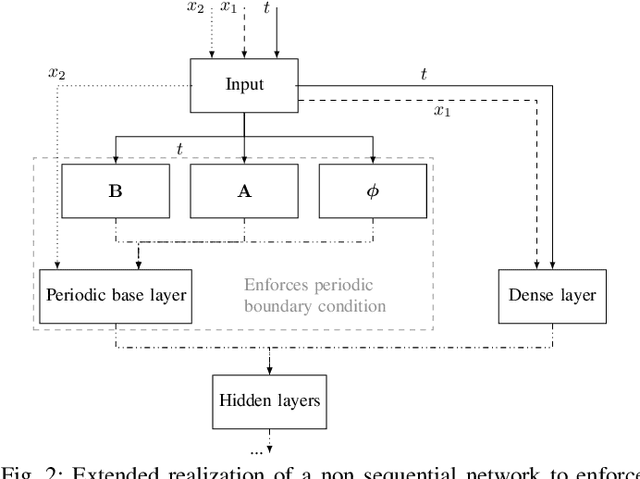

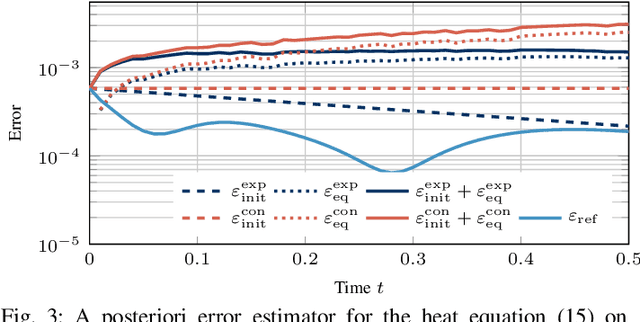

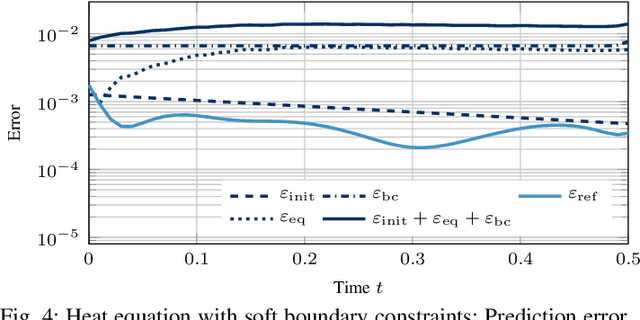

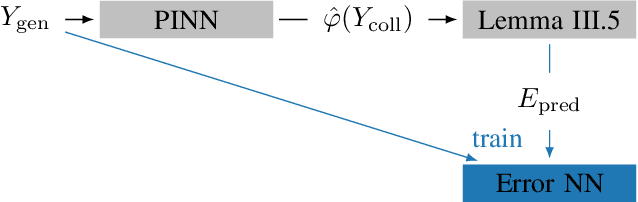

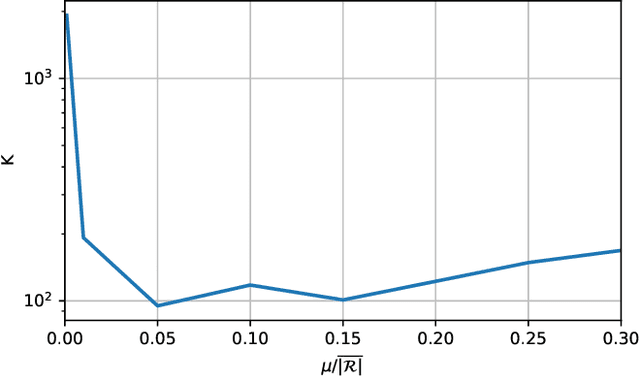

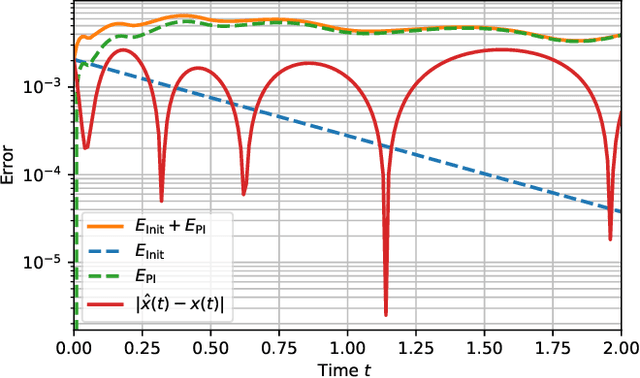

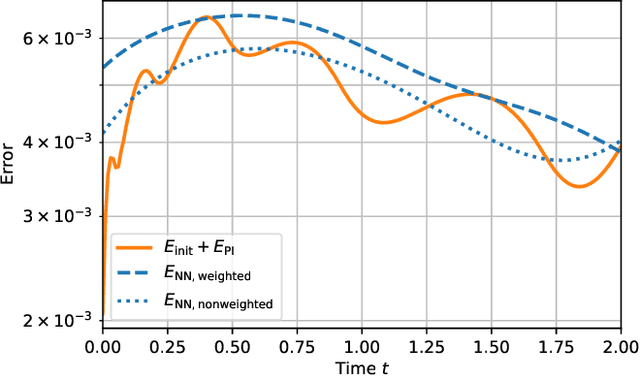

Prediction error certification for PINNs: Theory, computation, and application to Stokes flow

Aug 11, 2025Abstract:Rigorous error estimation is a fundamental topic in numerical analysis. With the increasing use of physics-informed neural networks (PINNs) for solving partial differential equations, several approaches have been developed to quantify the associated prediction error. In this work, we build upon a semigroup-based framework previously introduced by the authors for estimating the PINN error. While this estimator has so far been limited to academic examples - due to the need to compute quantities related to input-to-state stability - we extend its applicability to a significantly broader class of problems. This is accomplished by modifying the error bound and proposing numerical strategies to approximate the required stability parameters. The extended framework enables the certification of PINN predictions in more realistic scenarios, as demonstrated by a numerical study of Stokes flow around a cylinder.

Leveraging time and parameters for nonlinear model reduction methods

Jan 07, 2025

Abstract:In this paper, we consider model order reduction (MOR) methods for problems with slowly decaying Kolmogorov $n$-widths as, e.g., certain wave-like or transport-dominated problems. To overcome this Kolmogorov barrier within MOR, nonlinear projections are used, which are often realized numerically using autoencoders. These autoencoders generally consist of a nonlinear encoder and a nonlinear decoder and involve costly training of the hyperparameters to obtain a good approximation quality of the reduced system. To facilitate the training process, we show that extending the to-be-reduced system and its corresponding training data makes it possible to replace the nonlinear encoder with a linear encoder without sacrificing accuracy, thus roughly halving the number of hyperparameters to be trained.

Robust Recurrent Neural Network to Identify Ship Motion in Open Water with Performance Guarantees -- Technical Report

Dec 16, 2022

Abstract:Recurrent neural networks are capable of learning the dynamics of an unknown nonlinear system purely from input-output measurements. However, the resulting models do not provide any stability guarantees on the input-output mapping. In this work, we represent a recurrent neural network as a linear time-invariant system with nonlinear disturbances. By introducing constraints on the parameters, we can guarantee finite gain stability and incremental finite gain stability. We apply this identification method to learn the motion of a four-degrees-of-freedom ship that is moving in open water and compare it against other purely learning-based approaches with unconstrained parameters. Our analysis shows that the constrained recurrent neural network has a lower prediction accuracy on the test set, but it achieves comparable results on an out-of-distribution set and respects stability conditions.

Certified machine learning: Rigorous a posteriori error bounds for PDE defined PINNs

Oct 07, 2022

Abstract:Prediction error quantification in machine learning has been left out of most methodological investigations of neural networks, for both purely data-driven and physics-informed approaches. Beyond statistical investigations and generic results on the approximation capabilities of neural networks, we present a rigorous upper bound on the prediction error of physics-informed neural networks. This bound can be calculated without the knowledge of the true solution and only with a priori available information about the characteristics of the underlying dynamical system governed by a partial differential equation. We apply this a posteriori error bound exemplarily to four problems: the transport equation, the heat equation, the Navier-Stokes equation and the Klein-Gordon equation.

Certified machine learning: A posteriori error estimation for physics-informed neural networks

Mar 31, 2022

Abstract:Physics-informed neural networks (PINNs) are one popular approach to introduce a priori knowledge about physical systems into the learning framework. PINNs are known to be robust for smaller training sets, derive better generalization problems, and are faster to train. In this paper, we show that using PINNs in comparison with purely data-driven neural networks is not only favorable for training performance but allows us to extract significant information on the quality of the approximated solution. Assuming that the underlying differential equation for the PINN training is an ordinary differential equation, we derive a rigorous upper limit on the PINN prediction error. This bound is applicable even for input data not included in the training phase and without any prior knowledge about the true solution. Therefore, our a posteriori error estimation is an essential step to certify the PINN. We apply our error estimator exemplarily to two academic toy problems, whereof one falls in the category of model-predictive control and thereby shows the practical use of the derived results.

Physics-informed Neural Networks-based Model Predictive Control for Multi-link Manipulators

Sep 22, 2021

Abstract:We discuss nonlinear model predictive control (NMPC) for multi-body dynamics via physics-informed machine learning methods. Physics-informed neural networks (PINNs) are a promising tool to approximate (partial) differential equations. PINNs are not suited for control tasks in their original form since they are not designed to handle variable control actions or variable initial values. We thus present the idea of enhancing PINNs by adding control actions and initial conditions as additional network inputs. The high-dimensional input space is subsequently reduced via a sampling strategy and a zero-hold assumption. This strategy enables the controller design based on a PINN as an approximation of the underlying system dynamics. The additional benefit is that the sensitivities are easily computed via automatic differentiation, thus leading to efficient gradient-based algorithms. Finally, we present our results using our PINN-based MPC to solve a tracking problem for a complex mechanical system, a multi-link manipulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge