Barbara Caroline Benato

Intestinal Parasites Classification Using Deep Belief Networks

Jan 17, 2021

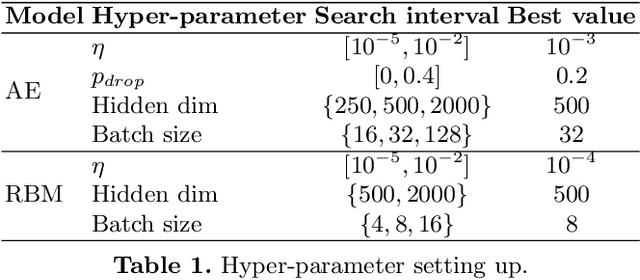

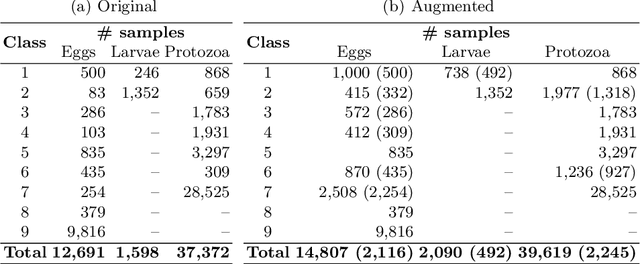

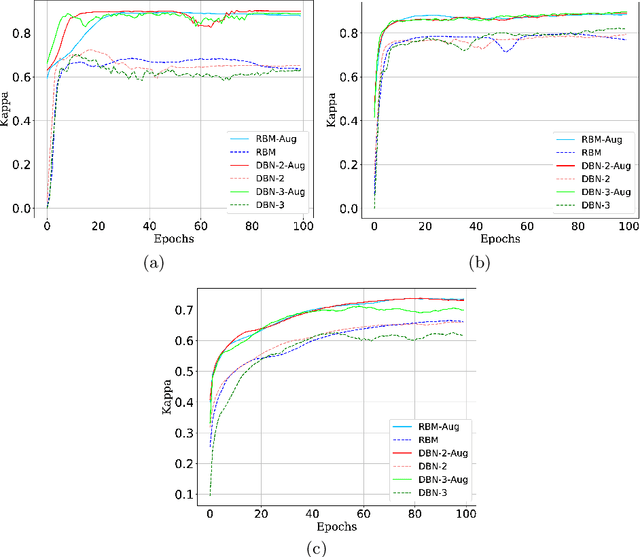

Abstract:Currently, approximately $4$ billion people are infected by intestinal parasites worldwide. Diseases caused by such infections constitute a public health problem in most tropical countries, leading to physical and mental disorders, and even death to children and immunodeficient individuals. Although subjected to high error rates, human visual inspection is still in charge of the vast majority of clinical diagnoses. In the past years, some works addressed intelligent computer-aided intestinal parasites classification, but they usually suffer from misclassification due to similarities between parasites and fecal impurities. In this paper, we introduce Deep Belief Networks to the context of automatic intestinal parasites classification. Experiments conducted over three datasets composed of eggs, larvae, and protozoa provided promising results, even considering unbalanced classes and also fecal impurities.

Semi-supervised deep learning based on label propagation in a 2D embedded space

Aug 02, 2020

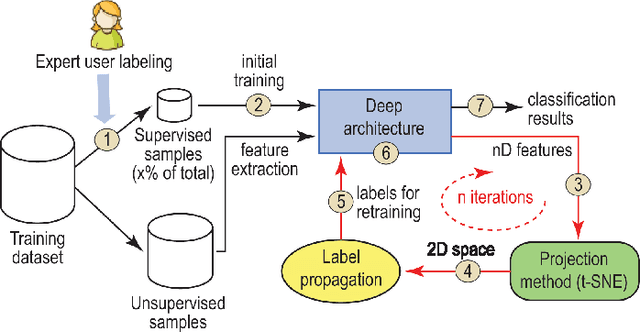

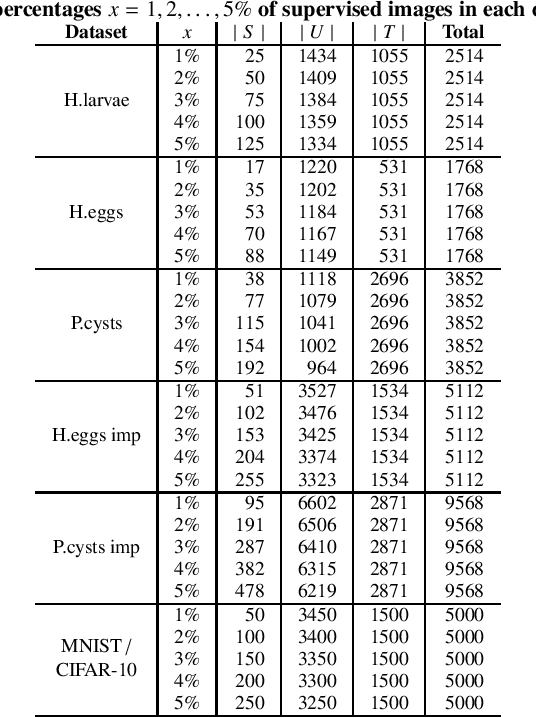

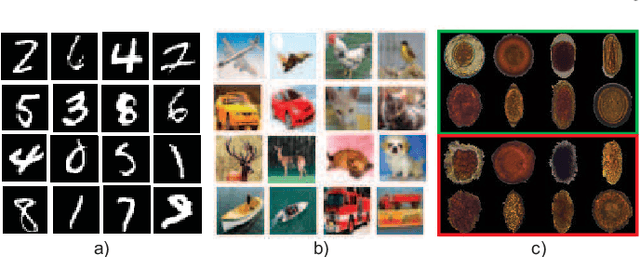

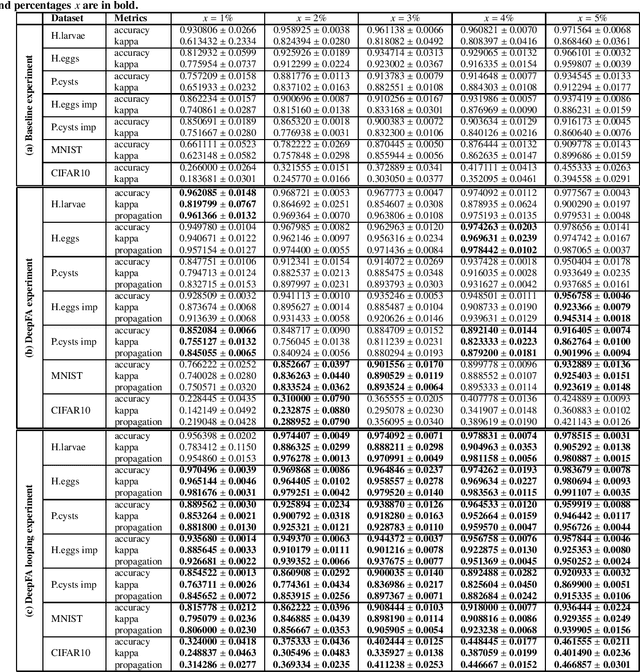

Abstract:While convolutional neural networks need large labeled sets for training images, expert human supervision of such datasets can be very laborious. Proposed solutions propagate labels from a small set of supervised images to a large set of unsupervised ones to obtain sufficient truly-and-artificially labeled samples to train a deep neural network model. Yet, such solutions need many supervised images for validation. We present a loop in which a deep neural network (VGG-16) is trained from a set with more correctly labeled samples along iterations, created by using t-SNE to project the features of its last max-pooling layer into a 2D embedded space in which labels are propagated using the Optimum-Path Forest semi-supervised classifier. As the labeled set improves along iterations, it improves the features of the neural network. We show that this can significantly improve classification results on test data (using only 1\% to 5\% of supervised samples) of three private challenging datasets and two public ones.

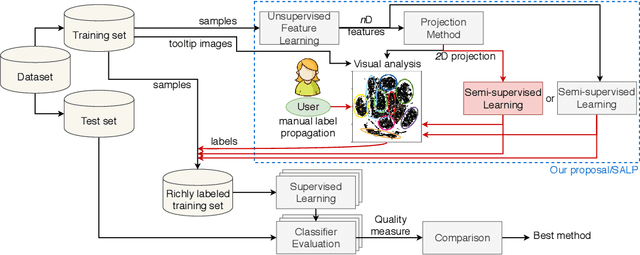

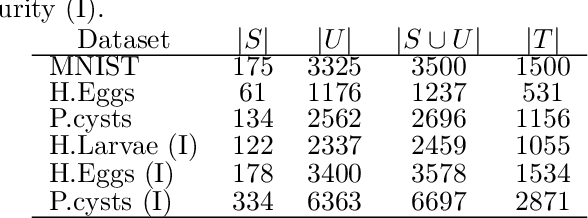

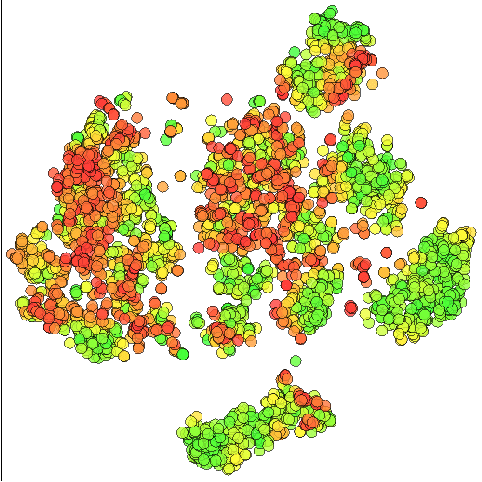

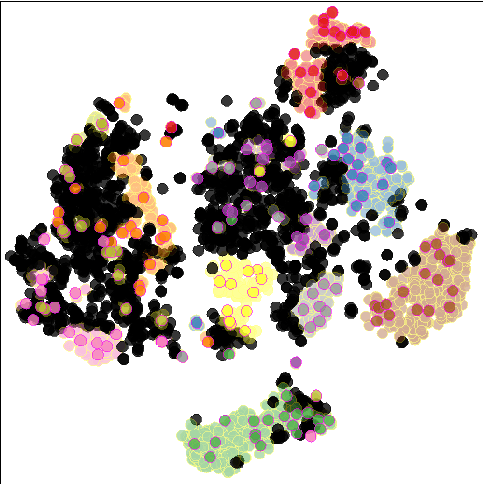

Semi-Automatic Data Annotation guided by Feature Space Projection

Jul 27, 2020

Abstract:Data annotation using visual inspection (supervision) of each training sample can be laborious. Interactive solutions alleviate this by helping experts propagate labels from a few supervised samples to unlabeled ones based solely on the visual analysis of their feature space projection (with no further sample supervision). We present a semi-automatic data annotation approach based on suitable feature space projection and semi-supervised label estimation. We validate our method on the popular MNIST dataset and on images of human intestinal parasites with and without fecal impurities, a large and diverse dataset that makes classification very hard. We evaluate two approaches for semi-supervised learning from the latent and projection spaces, to choose the one that best reduces user annotation effort and also increases classification accuracy on unseen data. Our results demonstrate the added-value of visual analytics tools that combine complementary abilities of humans and machines for more effective machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge