Baifu Huang

Mind to Hand: Purposeful Robotic Control via Embodied Reasoning

Dec 10, 2025Abstract:Humans act with context and intention, with reasoning playing a central role. While internet-scale data has enabled broad reasoning capabilities in AI systems, grounding these abilities in physical action remains a major challenge. We introduce Lumo-1, a generalist vision-language-action (VLA) model that unifies robot reasoning ("mind") with robot action ("hand"). Our approach builds upon the general multi-modal reasoning capabilities of pre-trained vision-language models (VLMs), progressively extending them to embodied reasoning and action prediction, and ultimately towards structured reasoning and reasoning-action alignment. This results in a three-stage pre-training pipeline: (1) Continued VLM pre-training on curated vision-language data to enhance embodied reasoning skills such as planning, spatial understanding, and trajectory prediction; (2) Co-training on cross-embodiment robot data alongside vision-language data; and (3) Action training with reasoning process on trajectories collected on Astribot S1, a bimanual mobile manipulator with human-like dexterity and agility. Finally, we integrate reinforcement learning to further refine reasoning-action consistency and close the loop between semantic inference and motor control. Extensive experiments demonstrate that Lumo-1 achieves significant performance improvements in embodied vision-language reasoning, a critical component for generalist robotic control. Real-world evaluations further show that Lumo-1 surpasses strong baselines across a wide range of challenging robotic tasks, with strong generalization to novel objects and environments, excelling particularly in long-horizon tasks and responding to human-natural instructions that require reasoning over strategy, concepts and space.

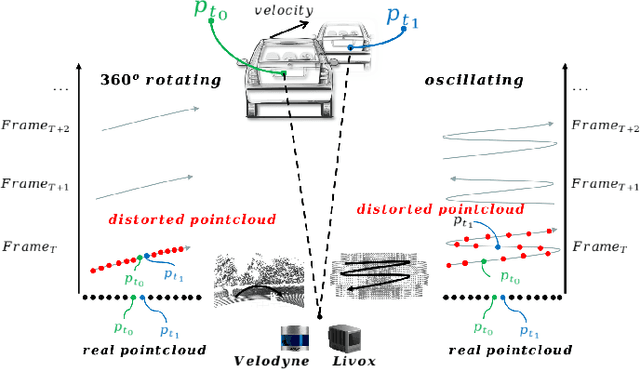

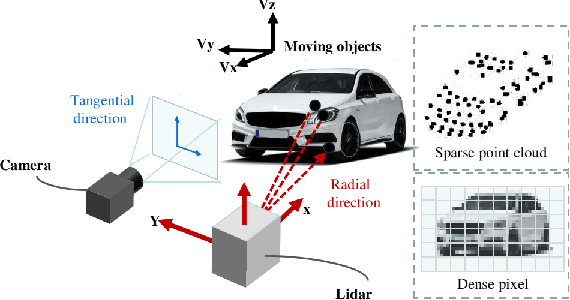

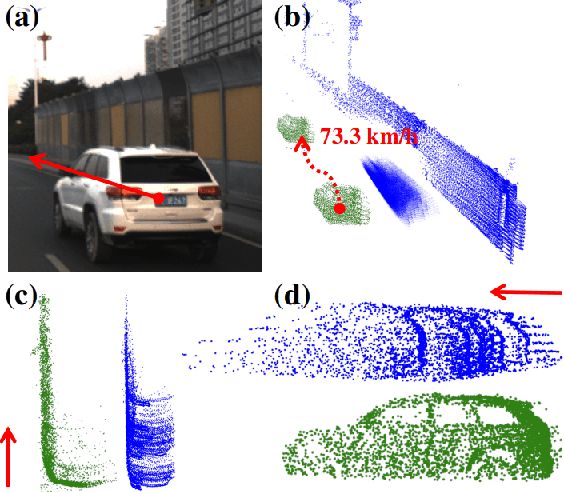

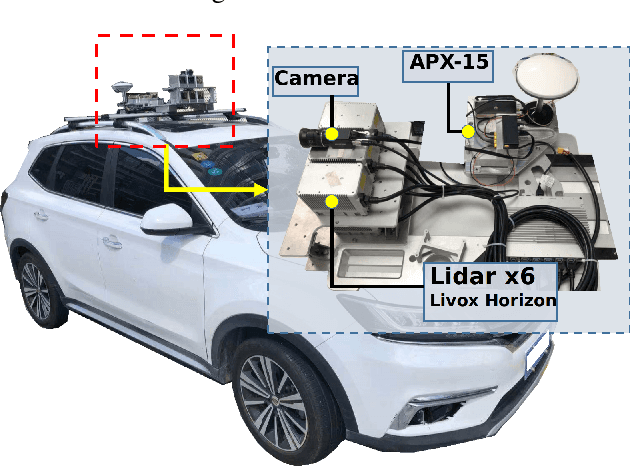

Lidar with Velocity: Motion Distortion Correction of Point Clouds from Oscillating Scanning Lidars

Nov 18, 2021

Abstract:Lidar point cloud distortion from moving object is an important problem in autonomous driving, and recently becomes even more demanding with the emerging of newer lidars, which feature back-and-forth scanning patterns. Accurately estimating moving object velocity would not only provide a tracking capability but also correct the point cloud distortion with more accurate description of the moving object. Since lidar measures the time-of-flight distance but with a sparse angular resolution, the measurement is precise in the radial measurement but lacks angularly. Camera on the other hand provides a dense angular resolution. In this paper, Gaussian-based lidar and camera fusion is proposed to estimate the full velocity and correct the lidar distortion. A probabilistic Kalman-filter framework is provided to track the moving objects, estimate their velocities and simultaneously correct the point clouds distortions. The framework is evaluated on real road data and the fusion method outperforms the traditional ICP-based and point-cloud only method. The complete working framework is open-sourced (https://github.com/ISEE-Technology/lidar-with-velocity) to accelerate the adoption of the emerging lidars.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge