Bahram Parvin

Multi-Aperture Fusion of Transformer-Convolutional Network (MFTC-Net) for 3D Medical Image Segmentation and Visualization

Jun 24, 2024

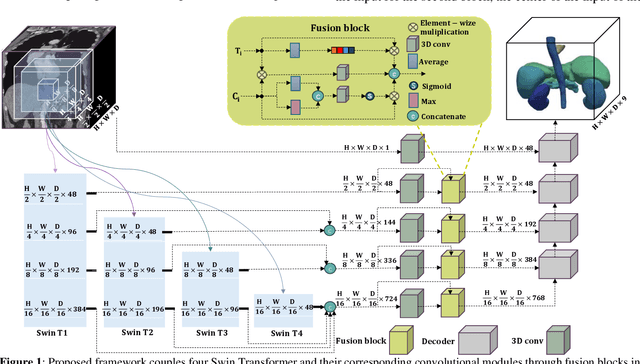

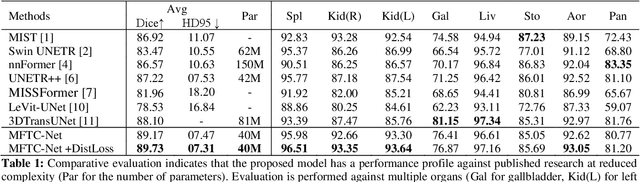

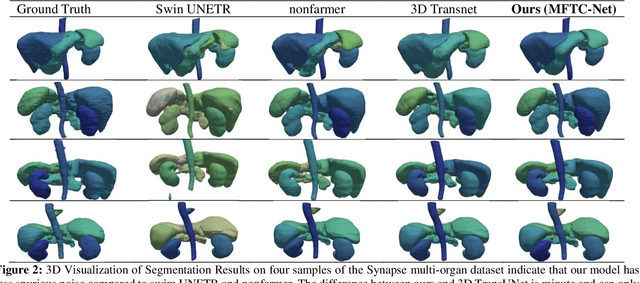

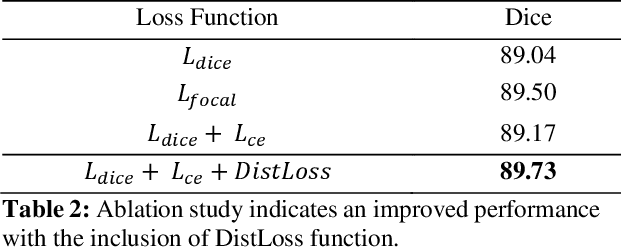

Abstract:Vision Transformers have shown superior performance to the traditional convolutional-based frameworks in many vision applications, including but not limited to the segmentation of 3D medical images. To further advance this area, this study introduces the Multi-Aperture Fusion of Transformer-Convolutional Network (MFTC-Net), which integrates the output of Swin Transformers and their corresponding convolutional blocks using 3D fusion blocks. The Multi-Aperture incorporates each image patch at its original resolutions with its pyramid representation to better preserve minute details. The proposed architecture has demonstrated a score of 89.73 and 7.31 for Dice and HD95, respectively, on the Synapse multi-organs dataset an improvement over the published results. The improved performance also comes with the added benefits of the reduced complexity of approximately 40 million parameters. Our code is available at https://github.com/Siyavashshabani/MFTC-Net

Deep Learning Models Delineates Multiple Nuclear Phenotypes in H&E Stained Histology Sections

Feb 14, 2018

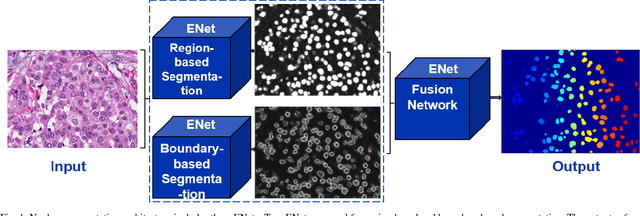

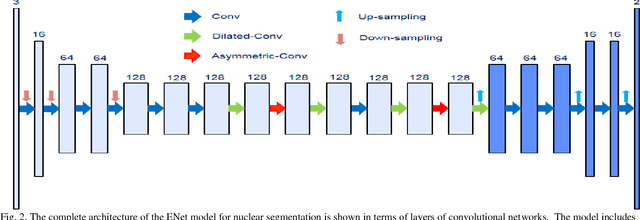

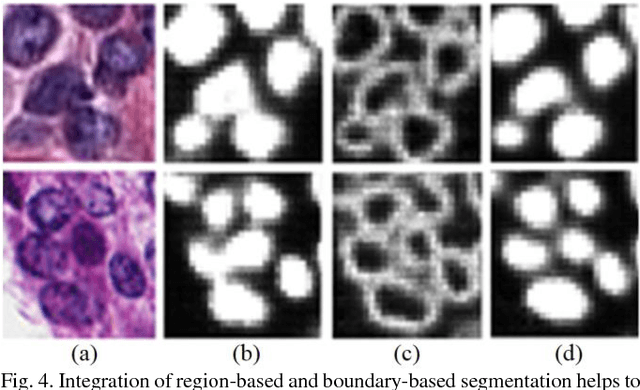

Abstract:Nuclear segmentation is an important step for profiling aberrant regions of histology sections. However, segmentation is a complex problem as a result of variations in nuclear geometry (e.g., size, shape), nuclear type (e.g., epithelial, fibroblast), and nuclear phenotypes (e.g., vesicular, aneuploidy). The problem is further complicated as a result of variations in sample preparation. It is shown and validated that fusion of very deep convolutional networks overcomes (i) complexities associated with multiple nuclear phenotypes, and (ii) separation of overlapping nuclei. The fusion relies on integrating of networks that learn region- and boundary-based representations. The system has been validated on a diverse set of nuclear phenotypes that correspond to the breast and brain histology sections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge