Deep Learning Models Delineates Multiple Nuclear Phenotypes in H&E Stained Histology Sections

Paper and Code

Feb 14, 2018

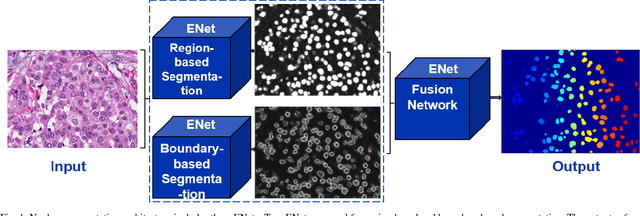

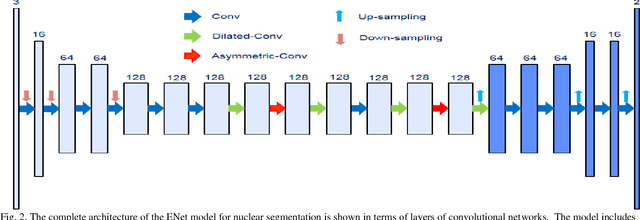

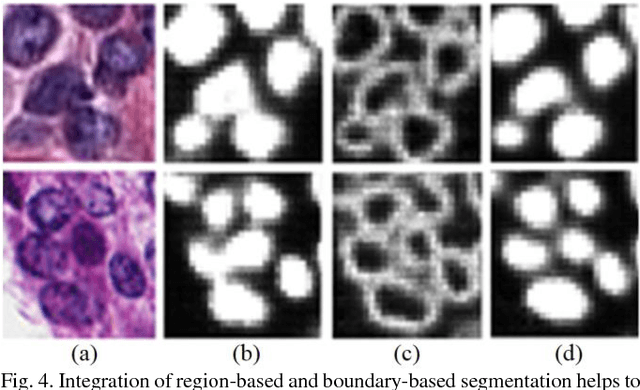

Nuclear segmentation is an important step for profiling aberrant regions of histology sections. However, segmentation is a complex problem as a result of variations in nuclear geometry (e.g., size, shape), nuclear type (e.g., epithelial, fibroblast), and nuclear phenotypes (e.g., vesicular, aneuploidy). The problem is further complicated as a result of variations in sample preparation. It is shown and validated that fusion of very deep convolutional networks overcomes (i) complexities associated with multiple nuclear phenotypes, and (ii) separation of overlapping nuclei. The fusion relies on integrating of networks that learn region- and boundary-based representations. The system has been validated on a diverse set of nuclear phenotypes that correspond to the breast and brain histology sections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge