Ayberk Yaraneri

Efficient Constrained Multi-Agent Interactive Planning using Constrained Dynamic Potential Games

Jun 17, 2022

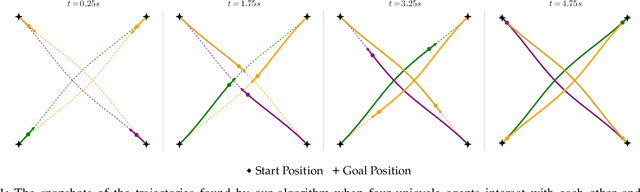

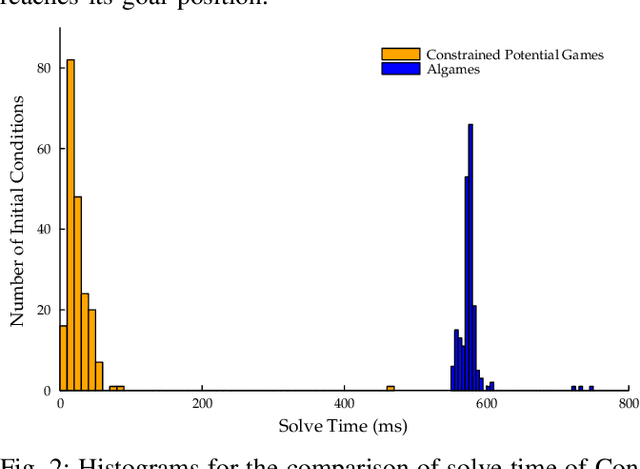

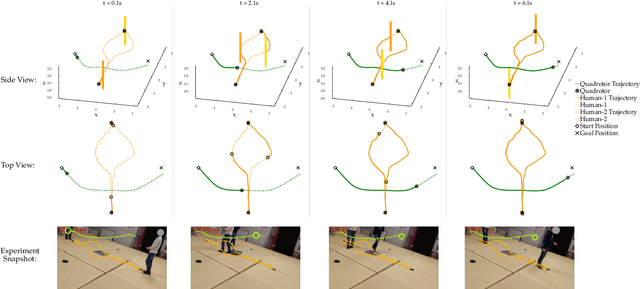

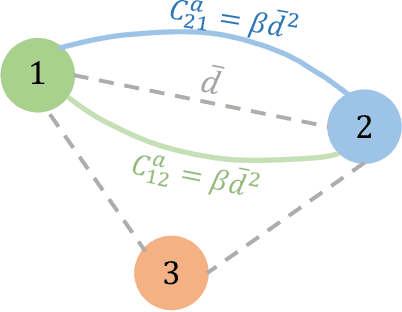

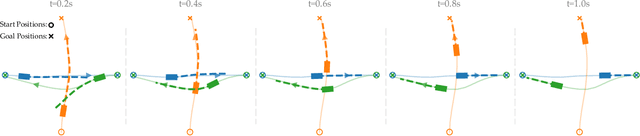

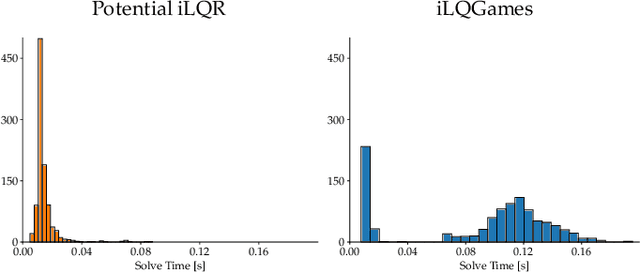

Abstract:Although dynamic games provide a rich paradigm for modeling agents' interactions, solving these games for real-world applications is often challenging. Many real-wold interactive settings involve general nonlinear state and input constraints which couple agents' decisions with one another. In this work, we develop an efficient and fast planner for interactive planning in constrained setups using a constrained game-theoretical framework. Our key insight is to leverage the special structure of agents' objective and constraint functions that are common in multi-agent interactions for fast and reliable planning. More precisely, we identify the structure of agents' cost functions under which the resulting dynamic game is an instance of a constrained potential dynamic game. Constrained potential dynamic games are a class of games for which instead of solving a set of coupled constrained optimal control problems, a Nash equilibrium can be found by solving a single constrained optimal control problem. This simplifies constrained interactive trajectory planning significantly. We compare the performance of our method in a navigation setup involving four planar agents and show that our method is on average 20 times faster than the state-of-the-art. We further provide experimental validation of our proposed method in a navigation setup involving one quadrotor and two humans.

Potential iLQR: A Potential-Minimizing Controller for Planning Multi-Agent Interactive Trajectories

Jul 10, 2021

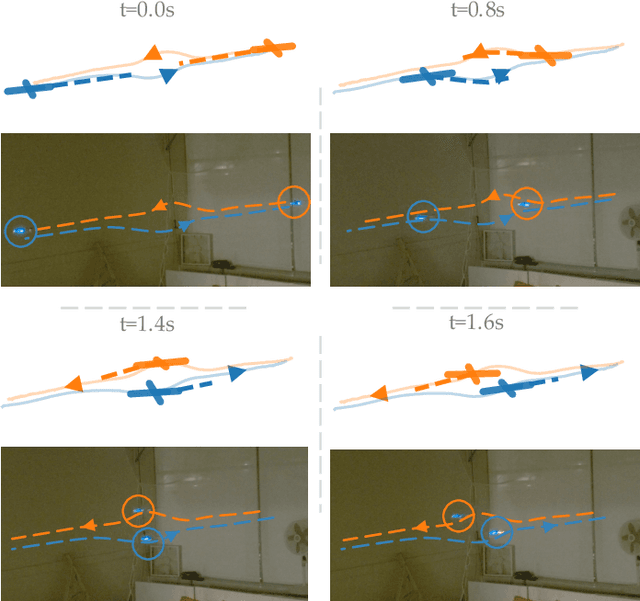

Abstract:Many robotic applications involve interactions between multiple agents where an agent's decisions affect the behavior of other agents. Such behaviors can be captured by the equilibria of differential games which provide an expressive framework for modeling the agents' mutual influence. However, finding the equilibria of differential games is in general challenging as it involves solving a set of coupled optimal control problems. In this work, we propose to leverage the special structure of multi-agent interactions to generate interactive trajectories by simply solving a single optimal control problem, namely, the optimal control problem associated with minimizing the potential function of the differential game. Our key insight is that for a certain class of multi-agent interactions, the underlying differential game is indeed a potential differential game for which equilibria can be found by solving a single optimal control problem. We introduce such an optimal control problem and build on single-agent trajectory optimization methods to develop a computationally tractable and scalable algorithm for planning multi-agent interactive trajectories. We will demonstrate the performance of our algorithm in simulation and show that our algorithm outperforms the state-of-the-art game solvers. To further show the real-time capabilities of our algorithm, we will demonstrate the application of our proposed algorithm in a set of experiments involving interactive trajectories for two quadcopters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge