Ayanna Howard

Mitigating Racial Biases in Toxic Language Detection with an Equity-Based Ensemble Framework

Sep 27, 2021

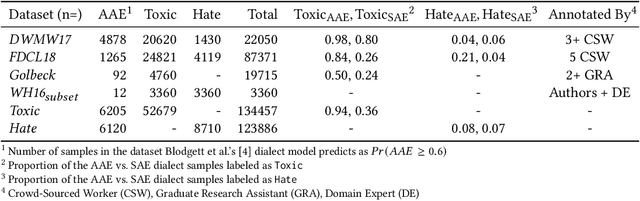

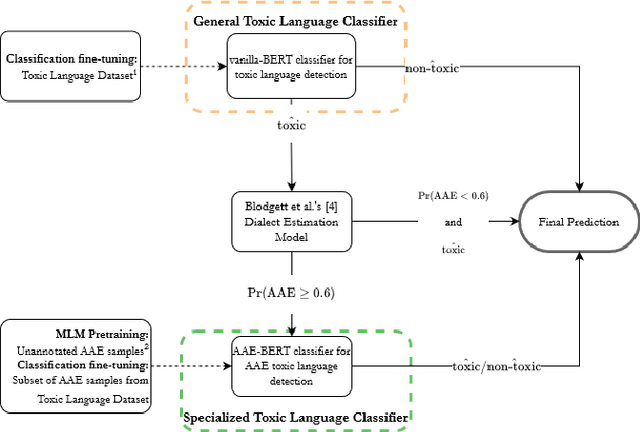

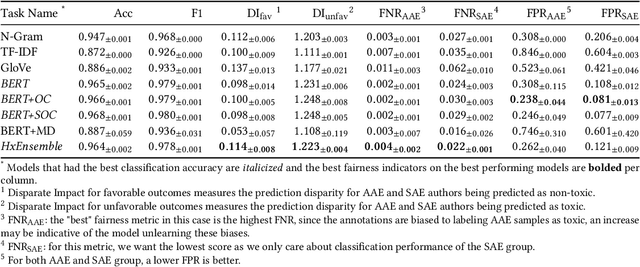

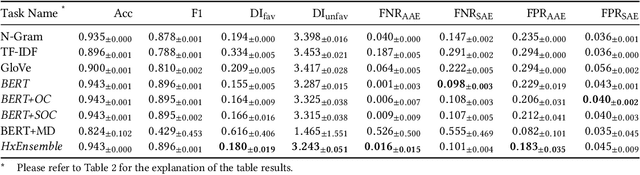

Abstract:Recent research has demonstrated how racial biases against users who write African American English exists in popular toxic language datasets. While previous work has focused on a single fairness criteria, we propose to use additional descriptive fairness metrics to better understand the source of these biases. We demonstrate that different benchmark classifiers, as well as two in-process bias-remediation techniques, propagate racial biases even in a larger corpus. We then propose a novel ensemble-framework that uses a specialized classifier that is fine-tuned to the African American English dialect. We show that our proposed framework substantially reduces the racial biases that the model learns from these datasets. We demonstrate how the ensemble framework improves fairness metrics across all sample datasets with minimal impact on the classification performance, and provide empirical evidence in its ability to unlearn the annotation biases towards authors who use African American English. ** Please note that this work may contain examples of offensive words and phrases.

A Bayesian Framework for Nash Equilibrium Inference in Human-Robot Parallel Play

Jun 10, 2020

Abstract:We consider shared workspace scenarios with humans and robots acting to achieve independent goals, termed as parallel play. We model these as general-sum games and construct a framework that utilizes the Nash equilibrium solution concept to consider the interactive effect of both agents while planning. We find multiple Pareto-optimal equilibria in these tasks. We hypothesize that people act by choosing an equilibrium based on social norms and their personalities. To enable coordination, we infer the equilibrium online using a probabilistic model that includes these two factors and use it to select the robot's action. We apply our approach to a close-proximity pick-and-place task involving a robot and a simulated human with three potential behaviors - defensive, selfish, and norm-following. We showed that using a Bayesian approach to infer the equilibrium enables the robot to complete the task with less than half the number of collisions while also reducing the task execution time as compared to the best baseline. We also performed a study with human participants interacting either with other humans or with different robot agents and observed that our proposed approach performs similar to human-human parallel play interactions. The code is available at https://github.com/shray/bayes-nash

Does Removing Stereotype Priming Remove Bias? A Pilot Human-Robot Interaction Study

Jul 03, 2018

Abstract:Robots capable of participating in complex social interactions have shown great potential in a variety of applications. As these robots grow more popular, it is essential to continuously evaluate the dynamics of the human-robot relationship. One factor shown to have potential impacts on this critical relationship is the human projection of stereotypes onto social robots, a practice that is implicitly known to effect both developers and users of this technology. As such, in this research, we wished to investigate the difference in participants' perceptions of the robot interaction if we removed stereotype priming. This has not yet been a common practice in similar studies. Given the stereotypes of emotions among ethnic groups, especially in the U.S., this study specifically sought to investigate the impact that robot "skin color" could potentially have on the human perception of a robot's emotional expressive behavior. A between-subject experiment with 198 individuals was conducted. The results showed no significant differences in the overall emotion classification or intensity ratings for the different robot skin colors. These results lend credence to our hypothesis that when individuals are not primed with information related to human stereotypes, robots are evaluated based on functional attributes versus stereotypical attributes. This provides some confidence that robots, if designed correctly, can potentially be used as a tool to override stereotype-based biases associated with human behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge