Aurora Rofena

OmniMRI: A Unified Vision--Language Foundation Model for Generalist MRI Interpretation

Aug 24, 2025Abstract:Magnetic Resonance Imaging (MRI) is indispensable in clinical practice but remains constrained by fragmented, multi-stage workflows encompassing acquisition, reconstruction, segmentation, detection, diagnosis, and reporting. While deep learning has achieved progress in individual tasks, existing approaches are often anatomy- or application-specific and lack generalizability across diverse clinical settings. Moreover, current pipelines rarely integrate imaging data with complementary language information that radiologists rely on in routine practice. Here, we introduce OmniMRI, a unified vision-language foundation model designed to generalize across the entire MRI workflow. OmniMRI is trained on a large-scale, heterogeneous corpus curated from 60 public datasets, over 220,000 MRI volumes and 19 million MRI slices, incorporating image-only data, paired vision-text data, and instruction-response data. Its multi-stage training paradigm, comprising self-supervised vision pretraining, vision-language alignment, multimodal pretraining, and multi-task instruction tuning, progressively equips the model with transferable visual representations, cross-modal reasoning, and robust instruction-following capabilities. Qualitative results demonstrate OmniMRI's ability to perform diverse tasks within a single architecture, including MRI reconstruction, anatomical and pathological segmentation, abnormality detection, diagnostic suggestion, and radiology report generation. These findings highlight OmniMRI's potential to consolidate fragmented pipelines into a scalable, generalist framework, paving the way toward foundation models that unify imaging and clinical language for comprehensive, end-to-end MRI interpretation.

Lesion-Aware Generative Artificial Intelligence for Virtual Contrast-Enhanced Mammography in Breast Cancer

May 05, 2025

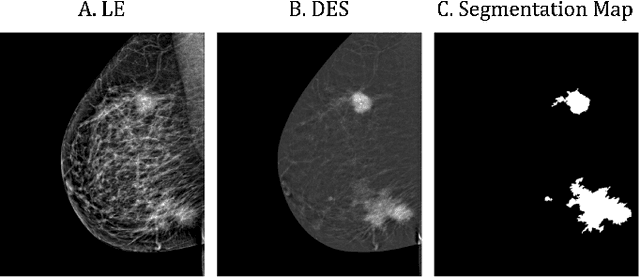

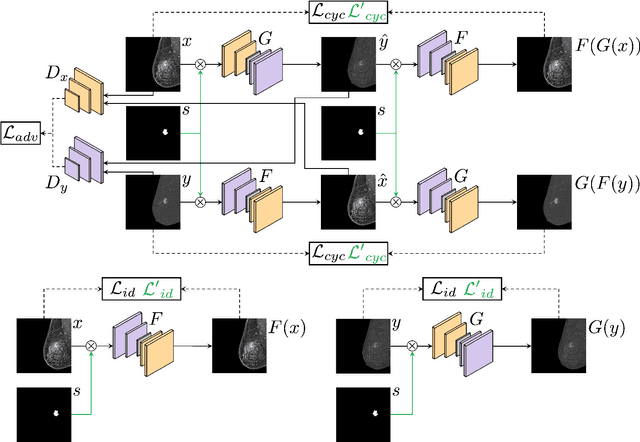

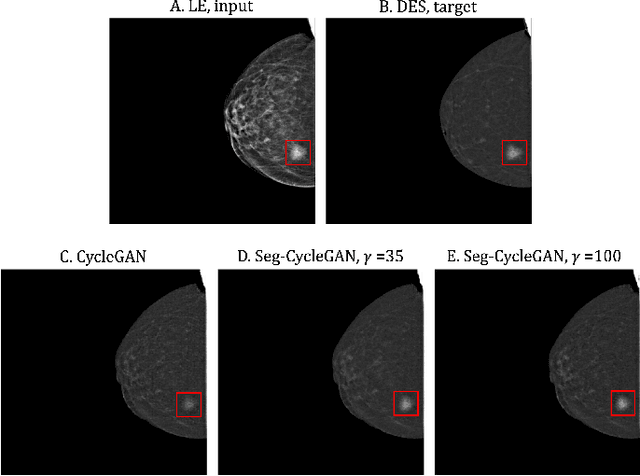

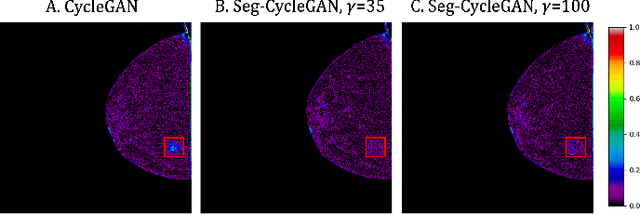

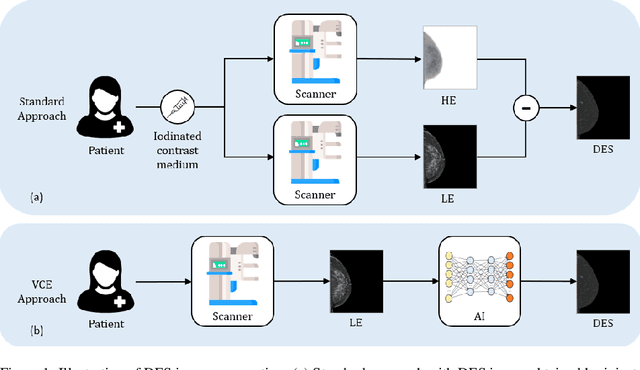

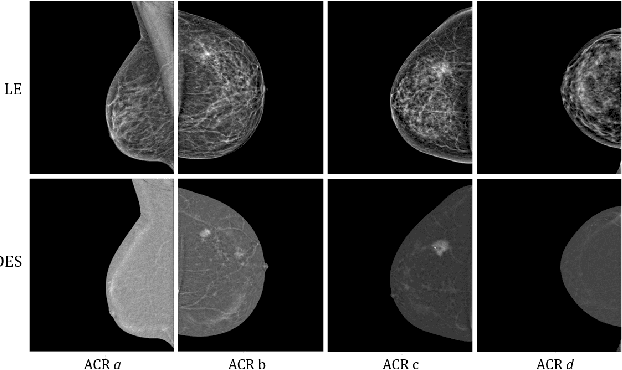

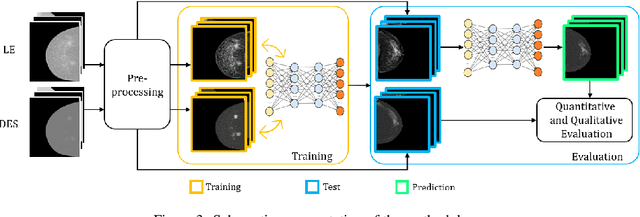

Abstract:Contrast-Enhanced Spectral Mammography (CESM) is a dual-energy mammographic technique that improves lesion visibility through the administration of an iodinated contrast agent. It acquires both a low-energy image, comparable to standard mammography, and a high-energy image, which are then combined to produce a dual-energy subtracted image highlighting lesion contrast enhancement. While CESM offers superior diagnostic accuracy compared to standard mammography, its use entails higher radiation exposure and potential side effects associated with the contrast medium. To address these limitations, we propose Seg-CycleGAN, a generative deep learning framework for Virtual Contrast Enhancement in CESM. The model synthesizes high-fidelity dual-energy subtracted images from low-energy images, leveraging lesion segmentation maps to guide the generative process and improve lesion reconstruction. Building upon the standard CycleGAN architecture, Seg-CycleGAN introduces localized loss terms focused on lesion areas, enhancing the synthesis of diagnostically relevant regions. Experiments on the CESM@UCBM dataset demonstrate that Seg-CycleGAN outperforms the baseline in terms of PSNR and SSIM, while maintaining competitive MSE and VIF. Qualitative evaluations further confirm improved lesion fidelity in the generated images. These results suggest that segmentation-aware generative models offer a viable pathway toward contrast-free CESM alternatives.

Augmented Intelligence for Multimodal Virtual Biopsy in Breast Cancer Using Generative Artificial Intelligence

Jan 31, 2025

Abstract:Full-Field Digital Mammography (FFDM) is the primary imaging modality for routine breast cancer screening; however, its effectiveness is limited in patients with dense breast tissue or fibrocystic conditions. Contrast-Enhanced Spectral Mammography (CESM), a second-level imaging technique, offers enhanced accuracy in tumor detection. Nonetheless, its application is restricted due to higher radiation exposure, the use of contrast agents, and limited accessibility. As a result, CESM is typically reserved for select cases, leaving many patients to rely solely on FFDM despite the superior diagnostic performance of CESM. While biopsy remains the gold standard for definitive diagnosis, it is an invasive procedure that can cause discomfort for patients. We introduce a multimodal, multi-view deep learning approach for virtual biopsy, integrating FFDM and CESM modalities in craniocaudal and mediolateral oblique views to classify lesions as malignant or benign. To address the challenge of missing CESM data, we leverage generative artificial intelligence to impute CESM images from FFDM scans. Experimental results demonstrate that incorporating the CESM modality is crucial to enhance the performance of virtual biopsy. When real CESM data is missing, synthetic CESM images proved effective, outperforming the use of FFDM alone, particularly in multimodal configurations that combine FFDM and CESM modalities. The proposed approach has the potential to improve diagnostic workflows, providing clinicians with augmented intelligence tools to improve diagnostic accuracy and patient care. Additionally, as a contribution to the research community, we publicly release the dataset used in our experiments, facilitating further advancements in this field.

A Systematic Review of Intermediate Fusion in Multimodal Deep Learning for Biomedical Applications

Aug 02, 2024Abstract:Deep learning has revolutionized biomedical research by providing sophisticated methods to handle complex, high-dimensional data. Multimodal deep learning (MDL) further enhances this capability by integrating diverse data types such as imaging, textual data, and genetic information, leading to more robust and accurate predictive models. In MDL, differently from early and late fusion methods, intermediate fusion stands out for its ability to effectively combine modality-specific features during the learning process. This systematic review aims to comprehensively analyze and formalize current intermediate fusion methods in biomedical applications. We investigate the techniques employed, the challenges faced, and potential future directions for advancing intermediate fusion methods. Additionally, we introduce a structured notation to enhance the understanding and application of these methods beyond the biomedical domain. Our findings are intended to support researchers, healthcare professionals, and the broader deep learning community in developing more sophisticated and insightful multimodal models. Through this review, we aim to provide a foundational framework for future research and practical applications in the dynamic field of MDL.

A Deep Learning Approach for Virtual Contrast Enhancement in Contrast Enhanced Spectral Mammography

Aug 03, 2023

Abstract:Contrast Enhanced Spectral Mammography (CESM) is a dual-energy mammographic imaging technique that first needs intravenously administration of an iodinated contrast medium; then, it collects both a low-energy image, comparable to standard mammography, and a high-energy image. The two scans are then combined to get a recombined image showing contrast enhancement. Despite CESM diagnostic advantages for breast cancer diagnosis, the use of contrast medium can cause side effects, and CESM also beams patients with a higher radiation dose compared to standard mammography. To address these limitations this work proposes to use deep generative models for virtual contrast enhancement on CESM, aiming to make the CESM contrast-free as well as to reduce the radiation dose. Our deep networks, consisting of an autoencoder and two Generative Adversarial Networks, the Pix2Pix, and the CycleGAN, generate synthetic recombined images solely from low-energy images. We perform an extensive quantitative and qualitative analysis of the model's performance, also exploiting radiologists' assessments, on a novel CESM dataset that includes 1138 images that, as a further contribution of this work, we make publicly available. The results show that CycleGAN is the most promising deep network to generate synthetic recombined images, highlighting the potential of artificial intelligence techniques for virtual contrast enhancement in this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge