Ashok Panigrahy

Medical Knowledge-Guided Deep Learning for Imbalanced Medical Image Classification

Nov 20, 2021

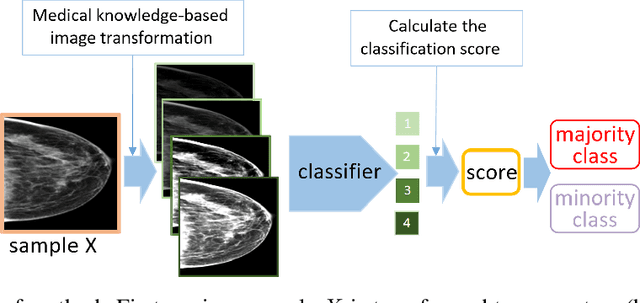

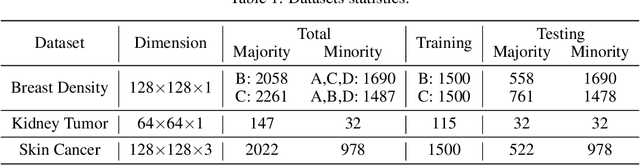

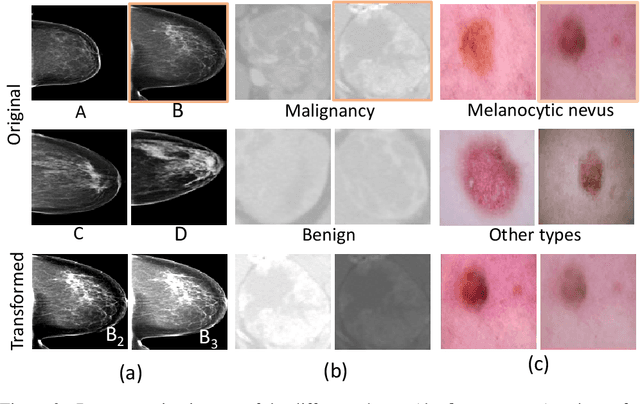

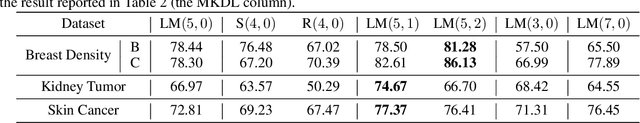

Abstract:Deep learning models have gained remarkable performance on a variety of image classification tasks. However, many models suffer from limited performance in clinical or medical settings when data are imbalanced. To address this challenge, we propose a medical-knowledge-guided one-class classification approach that leverages domain-specific knowledge of classification tasks to boost the model's performance. The rationale behind our approach is that some existing prior medical knowledge can be incorporated into data-driven deep learning to facilitate model learning. We design a deep learning-based one-class classification pipeline for imbalanced image classification, and demonstrate in three use cases how we take advantage of medical knowledge of each specific classification task by generating additional middle classes to achieve higher classification performances. We evaluate our approach on three different clinical image classification tasks (a total of 8459 images) and show superior model performance when compared to six state-of-the-art methods. All codes of this work will be publicly available upon acceptance of the paper.

Constrained Deep One-Class Feature Learning For Classifying Imbalanced Medical Images

Nov 20, 2021

Abstract:Medical image data are usually imbalanced across different classes. One-class classification has attracted increasing attention to address the data imbalance problem by distinguishing the samples of the minority class from the majority class. Previous methods generally aim to either learn a new feature space to map training samples together or to fit training samples by autoencoder-like models. These methods mainly focus on capturing either compact or descriptive features, where the information of the samples of a given one class is not sufficiently utilized. In this paper, we propose a novel deep learning-based method to learn compact features by adding constraints on the bottleneck features, and to preserve descriptive features by training an autoencoder at the same time. Through jointly optimizing the constraining loss and the autoencoder's reconstruction loss, our method can learn more relevant features associated with the given class, making the majority and minority samples more distinguishable. Experimental results on three clinical datasets (including the MRI breast images, FFDM breast images and chest X-ray images) obtains state-of-art performance compared to previous methods.

Knowledge-Guided Multiview Deep Curriculum Learning for Elbow Fracture Classification

Oct 20, 2021

Abstract:Elbow fracture diagnosis often requires patients to take both frontal and lateral views of elbow X-ray radiographs. In this paper, we propose a multiview deep learning method for an elbow fracture subtype classification task. Our strategy leverages transfer learning by first training two single-view models, one for frontal view and the other for lateral view, and then transferring the weights to the corresponding layers in the proposed multiview network architecture. Meanwhile, quantitative medical knowledge was integrated into the training process through a curriculum learning framework, which enables the model to first learn from "easier" samples and then transition to "harder" samples to reach better performance. In addition, our multiview network can work both in a dual-view setting and with a single view as input. We evaluate our method through extensive experiments on a classification task of elbow fracture with a dataset of 1,964 images. Results show that our method outperforms two related methods on bone fracture study in multiple settings, and our technique is able to boost the performance of the compared methods. The code is available at https://github.com/ljaiverson/multiview-curriculum.

* MICCAI 2021 workshop. DOI: https://doi.org/10.1007/978-3-030-87589-3_57. URL: https://link.springer.com/chapter/10.1007/978-3-030-87589-3_57

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge