Arturo Mendoza

Double Descent Meets Out-of-Distribution Detection: Theoretical Insights and Empirical Analysis on the role of model complexity

Nov 04, 2024

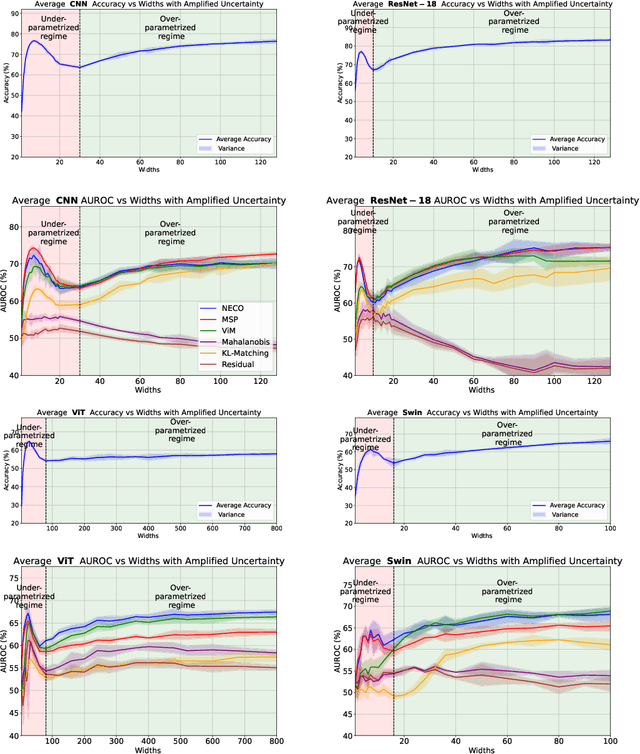

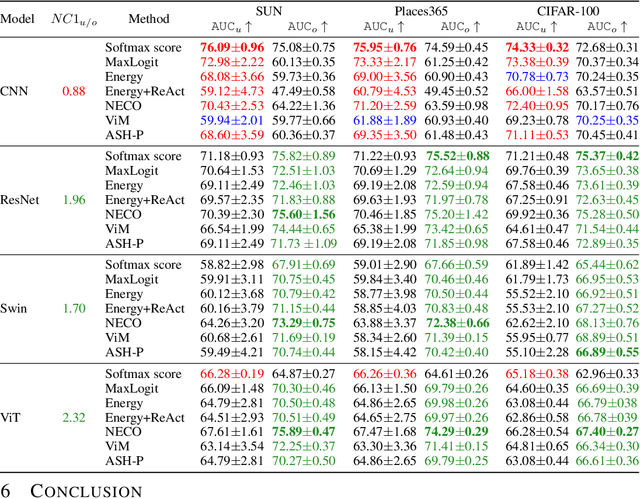

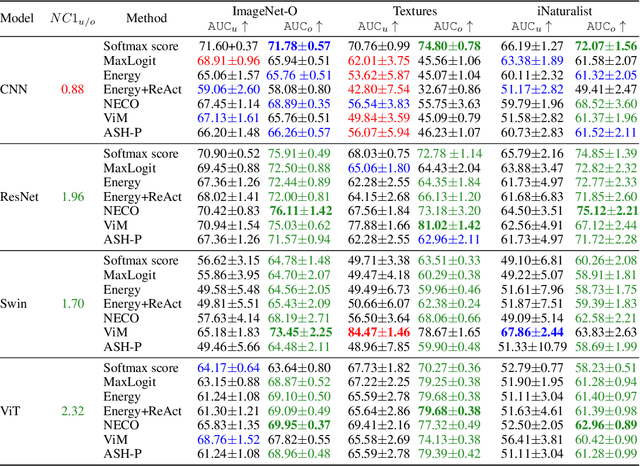

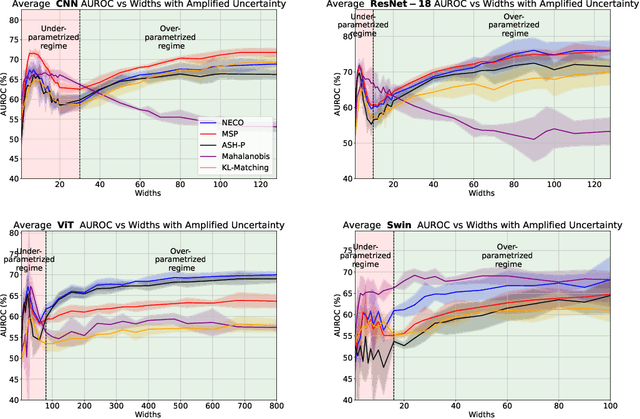

Abstract:While overparameterization is known to benefit generalization, its impact on Out-Of-Distribution (OOD) detection is less understood. This paper investigates the influence of model complexity in OOD detection. We propose an expected OOD risk metric to evaluate classifiers confidence on both training and OOD samples. Leveraging Random Matrix Theory, we derive bounds for the expected OOD risk of binary least-squares classifiers applied to Gaussian data. We show that the OOD risk depicts an infinite peak, when the number of parameters is equal to the number of samples, which we associate with the double descent phenomenon. Our experimental study on different OOD detection methods across multiple neural architectures extends our theoretical insights and highlights a double descent curve. Our observations suggest that overparameterization does not necessarily lead to better OOD detection. Using the Neural Collapse framework, we provide insights to better understand this behavior. To facilitate reproducibility, our code will be made publicly available upon publication.

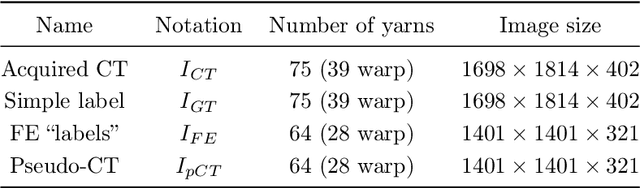

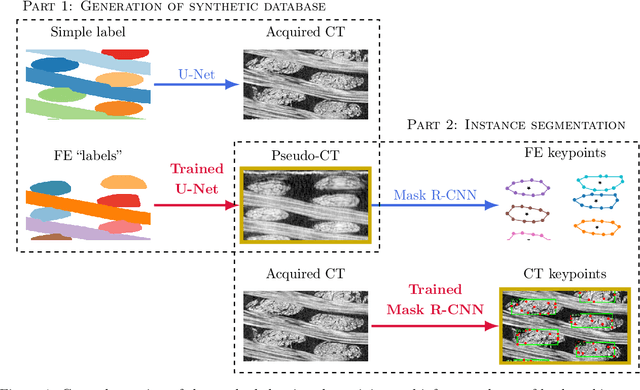

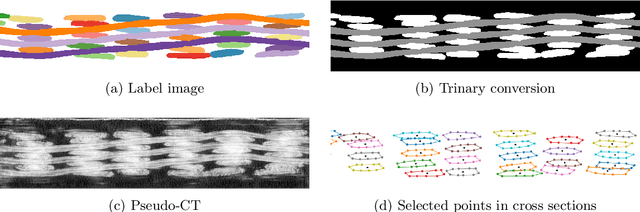

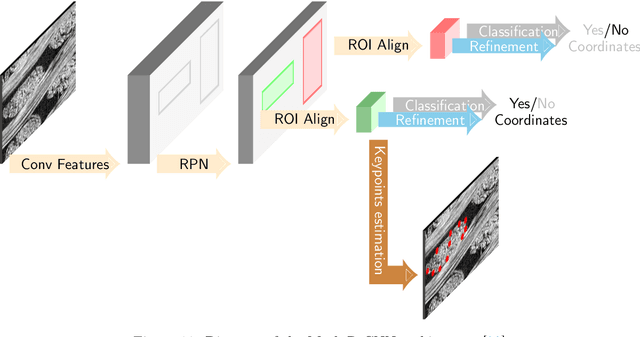

Descriptive Modeling of Textiles using FE Simulations and Deep Learning

Jun 26, 2021

Abstract:In this work we propose a novel and fully automated method for extracting the yarn geometrical features in woven composites so that a direct parametrization of the textile reinforcement is achieved (e.g., FE mesh). Thus, our aim is not only to perform yarn segmentation from tomographic images but rather to provide a complete descriptive modeling of the fabric. As such, this direct approach improves on previous methods that use voxel-wise masks as intermediate representations followed by re-meshing operations (yarn envelope estimation). The proposed approach employs two deep neural network architectures (U-Net and Mask RCNN). First, we train the U-Net to generate synthetic CT images from the corresponding FE simulations. This allows to generate large quantities of annotated data without requiring costly manual annotations. This data is then used to train the Mask R-CNN, which is focused on predicting contour points around each of the yarns in the image. Experimental results show that our method is accurate and robust for performing yarn instance segmentation on CT images, this is further validated by quantitative and qualitative analyses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge