Arttu Lämsä

Iterative Learning for Instance Segmentation

Feb 18, 2022

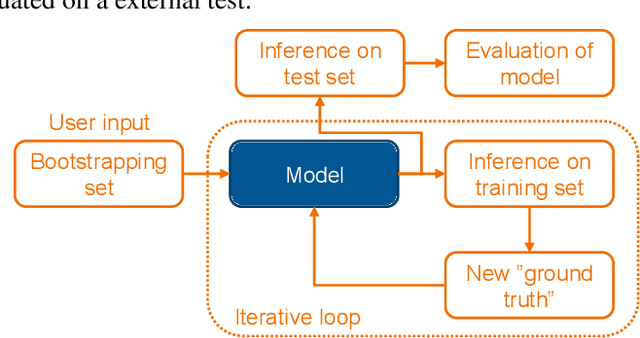

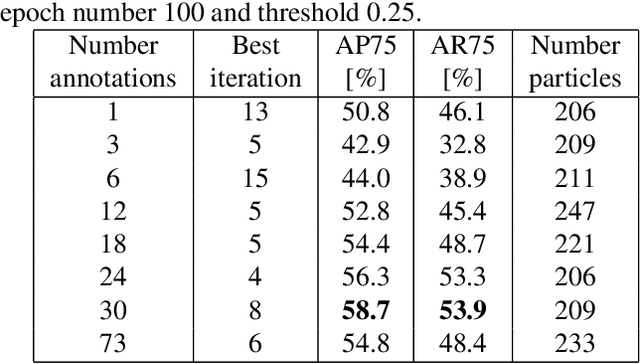

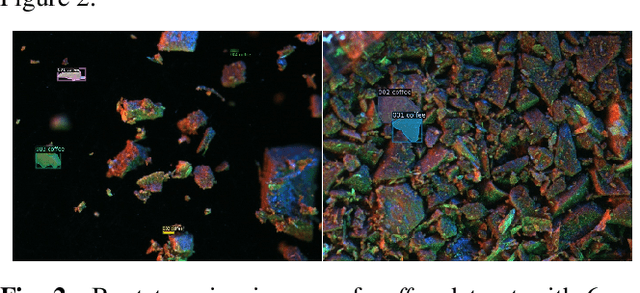

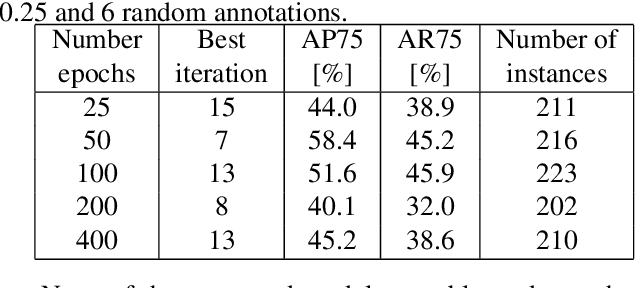

Abstract:Instance segmentation is a computer vision task where separate objects in an image are detected and segmented. State-of-the-art deep neural network models require large amounts of labeled data in order to perform well in this task. Making these annotations is time-consuming. We propose for the first time, an iterative learning and annotation method that is able to detect, segment and annotate instances in datasets composed of multiple similar objects. The approach requires minimal human intervention and needs only a bootstrapping set containing very few annotations. Experiments on two different datasets show the validity of the approach in different applications related to visual inspection.

Video2IMU: Realistic IMU features and signals from videos

Feb 14, 2022

Abstract:Human Activity Recognition (HAR) from wearable sensor data identifies movements or activities in unconstrained environments. HAR is a challenging problem as it presents great variability across subjects. Obtaining large amounts of labelled data is not straightforward, since wearable sensor signals are not easy to label upon simple human inspection. In our work, we propose the use of neural networks for the generation of realistic signals and features using human activity monocular videos. We show how these generated features and signals can be utilized, instead of their real counterparts, to train HAR models that can recognize activities using signals obtained with wearable sensors. To prove the validity of our methods, we perform experiments on an activity recognition dataset created for the improvement of industrial work safety. We show that our model is able to realistically generate virtual sensor signals and features usable to train a HAR classifier with comparable performance as the one trained using real sensor data. Our results enable the use of available, labelled video data for training HAR models to classify signals from wearable sensors.

Computational Graph Approach for Detection of Composite Human Activities

Dec 05, 2018

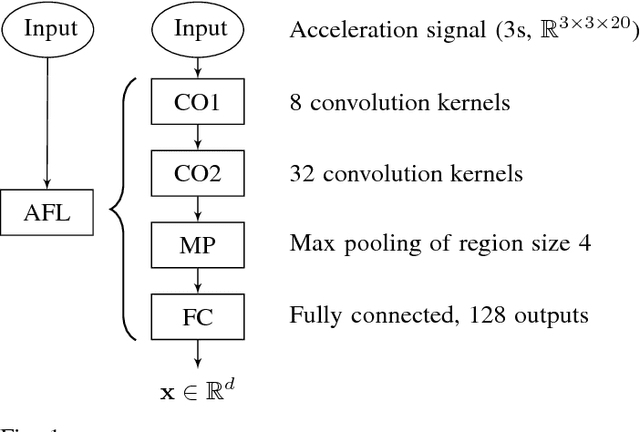

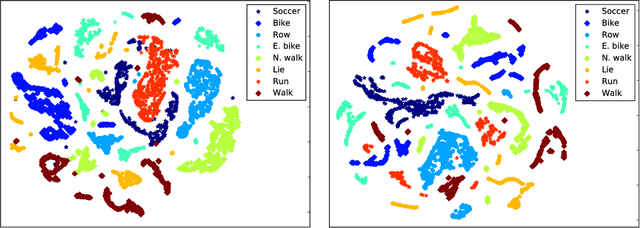

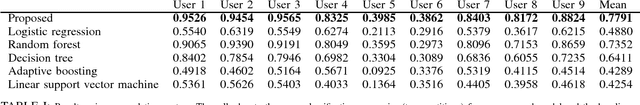

Abstract:Existing work in human activity detection classifies physical activities using a single fixed-length subset of a sensor signal. However, temporally consecutive subsets of a sensor signal are not utilized. This is not optimal for classifying physical activities (composite activities) that are composed of a temporal series of simpler activities (atomic activities). A sport consists of physical activities combined in a fashion unique to that sport. The constituent physical activities and the sport are not fundamentally different. We propose a computational graph architecture for human activity detection based on the readings of a triaxial accelerometer. The resulting model learns 1) a representation of the atomic activities of a sport and 2) to classify physical activities as compositions of the atomic activities. The proposed model, alongside with a set of baseline models, was tested for a simultaneous classification of eight physical activities (walking, nordic walking, running, soccer, rowing, bicycling, exercise bicycling and lying down). The proposed model obtained an overall mean accuracy of 77.91% (population) and 95.28% (personalized). The corresponding accuracies of the best baseline model were 73.52% and 90.03%. However, without combining consecutive atomic activities, the corresponding accuracies of the proposed model were 71.52% and 91.22%. The results show that our proposed model is accurate, outperforms the baseline models and learns to combine simple activities into complex activities. Composite activities can be classified as combinations of atomic activities. Our proposed architecture is a basis for accurate models in human activity detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge