Ariel Ruiz-Garcia

Self-Supervised Transformers for Activity Classification using Ambient Sensors

Nov 22, 2020

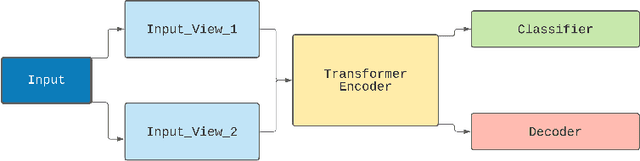

Abstract:Providing care for ageing populations is an onerous task, and as life expectancy estimates continue to rise, the number of people that require senior care is growing rapidly. This paper proposes a methodology based on Transformer Neural Networks to classify the activities of a resident within an ambient sensor based environment. We also propose a methodology to pre-train Transformers in a self-supervised manner, as a hybrid autoencoder-classifier model instead of using contrastive loss. The social impact of the research is considered with wider benefits of the approach and next steps for identifying transitions in human behaviour. In recent years there has been an increasing drive for integrating sensor based technologies within care facilities for data collection. This allows for employing machine learning for many aspects including activity recognition and anomaly detection. Due to the sensitivity of healthcare environments, some methods of data collection used in current research are considered to be intrusive within the senior care industry, including cameras for image based activity recognition, and wearables for activity tracking, but recent studies have shown that using these methods commonly result in poor data quality due to the lack of resident interest in participating in data gathering. This has led to a focus on ambient sensors, such as binary PIR motion, connected domestic appliances, and electricity and water metering. By having consistency in ambient data collection, the quality of data is considerably more reliable, presenting the opportunity to perform classification with enhanced accuracy. Therefore, in this research we looked to find an optimal way of using deep learning to classify human activity with ambient sensor data.

Generative Adversarial Stacked Autoencoders

Nov 22, 2020

Abstract:Generative Adversarial Networks (GANs) have become predominant in image generation tasks. Their success is attributed to the training regime which employs two models: a generator G and discriminator D that compete in a minimax zero sum game. Nonetheless, GANs are difficult to train due to their sensitivity to hyperparameter and parameter initialisation, which often leads to vanishing gradients, non-convergence, or mode collapse, where the generator is unable to create samples with different variations. In this work, we propose a novel Generative Adversarial Stacked Convolutional Autoencoder(GASCA) model and a generative adversarial gradual greedy layer-wise learning algorithm de-signed to train Adversarial Autoencoders in an efficient and incremental manner. Our training approach produces images with significantly lower reconstruction error than vanilla joint training.

* arXiv admin note: text overlap with arXiv:2007.09790

Generative Adversarial Stacked Autoencoders for Facial Pose Normalization and Emotion Recognition

Jul 19, 2020

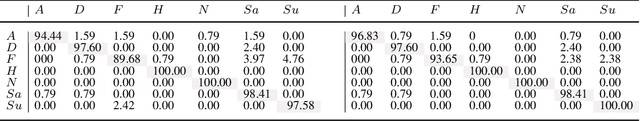

Abstract:In this work, we propose a novel Generative Adversarial Stacked Autoencoder that learns to map facial expressions, with up to plus or minus 60 degrees, to an illumination invariant facial representation of 0 degrees. We accomplish this by using a novel convolutional layer that exploits both local and global spatial information, and a convolutional layer with a reduced number of parameters that exploits facial symmetry. Furthermore, we introduce a generative adversarial gradual greedy layer-wise learning algorithm designed to train Adversarial Autoencoders in an efficient and incremental manner. We demonstrate the efficiency of our method and report state-of-the-art performance on several facial emotion recognition corpora, including one collected in the wild.

Sokoto Coventry Fingerprint Dataset

Jul 24, 2018

Abstract:This paper presents the Sokoto Coventry Fingerprint Dataset (SOCOFing), a biometric fingerprint database designed for academic research purposes. SOCOFing is made up of 6,000 fingerprint images from 600 African subjects. SOCOFing contains unique attributes such as labels for gender, hand and finger name as well as synthetically altered versions with three different levels of alteration for obliteration, central rotation, and z-cut. The dataset is freely available for noncommercial research purposes at: https://www.kaggle.com/ruizgara/socofing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge