Arianna Trozze

Large Language Models in Cryptocurrency Securities Cases: Can ChatGPT Replace Lawyers?

Aug 30, 2023Abstract:Large Language Models (LLMs) could enhance access to the legal system. However, empirical research on their effectiveness in conducting legal tasks is scant. We study securities cases involving cryptocurrencies as one of numerous contexts where AI could support the legal process, studying LLMs' legal reasoning and drafting capabilities. We examine whether a) an LLM can accurately determine which laws are potentially being violated from a fact pattern, and b) whether there is a difference in juror decision-making based on complaints written by a lawyer compared to an LLM. We feed fact patterns from real-life cases to GPT-3.5 and evaluate its ability to determine correct potential violations from the scenario and exclude spurious violations. Second, we had mock jurors assess complaints written by the LLM and lawyers. GPT-3.5's legal reasoning skills proved weak, though we expect improvement in future models, particularly given the violations it suggested tended to be correct (it merely missed additional, correct violations). GPT-3.5 performed better at legal drafting, and jurors' decisions were not statistically significantly associated with the author of the document upon which they based their decisions. Because LLMs cannot satisfactorily conduct legal reasoning tasks, they would be unable to replace lawyers at this stage. However, their drafting skills (though, perhaps, still inferior to lawyers), could provide access to justice for more individuals by reducing the cost of legal services. Our research is the first to systematically study LLMs' legal drafting and reasoning capabilities in litigation, as well as in securities law and cryptocurrency-related misconduct.

Detecting DeFi Securities Violations from Token Smart Contract Code with Random Forest Classification

Dec 06, 2021

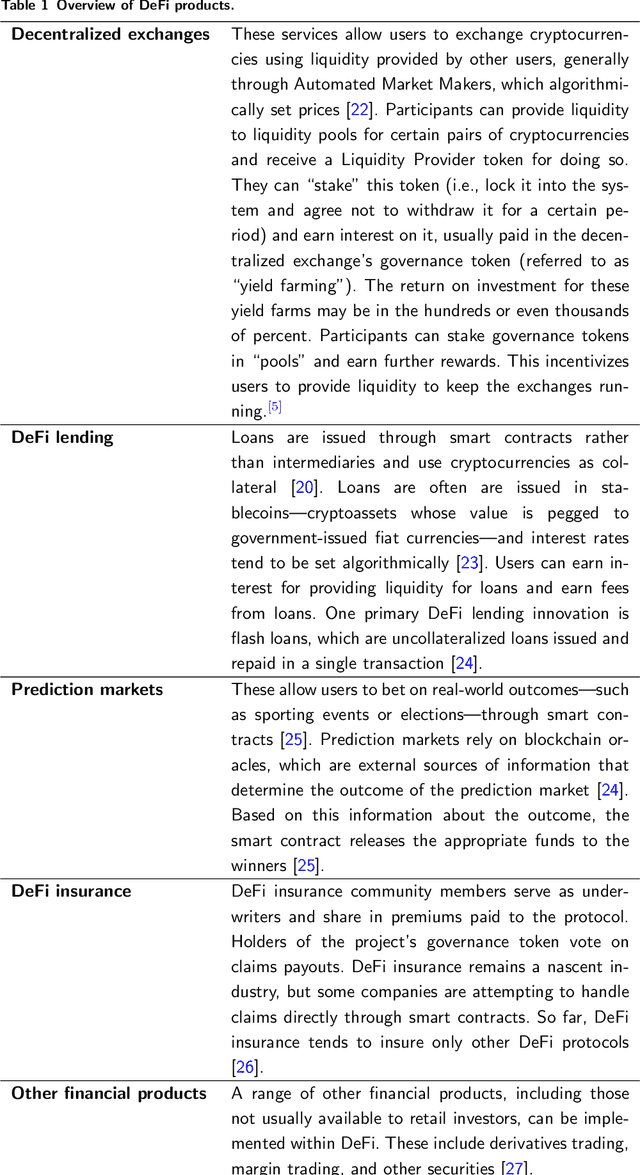

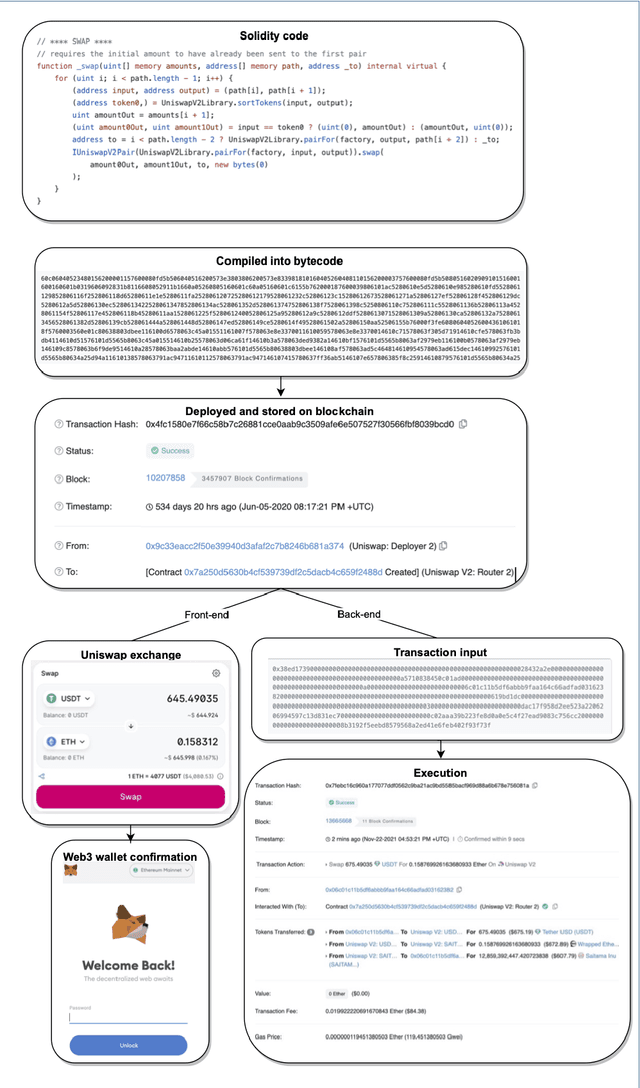

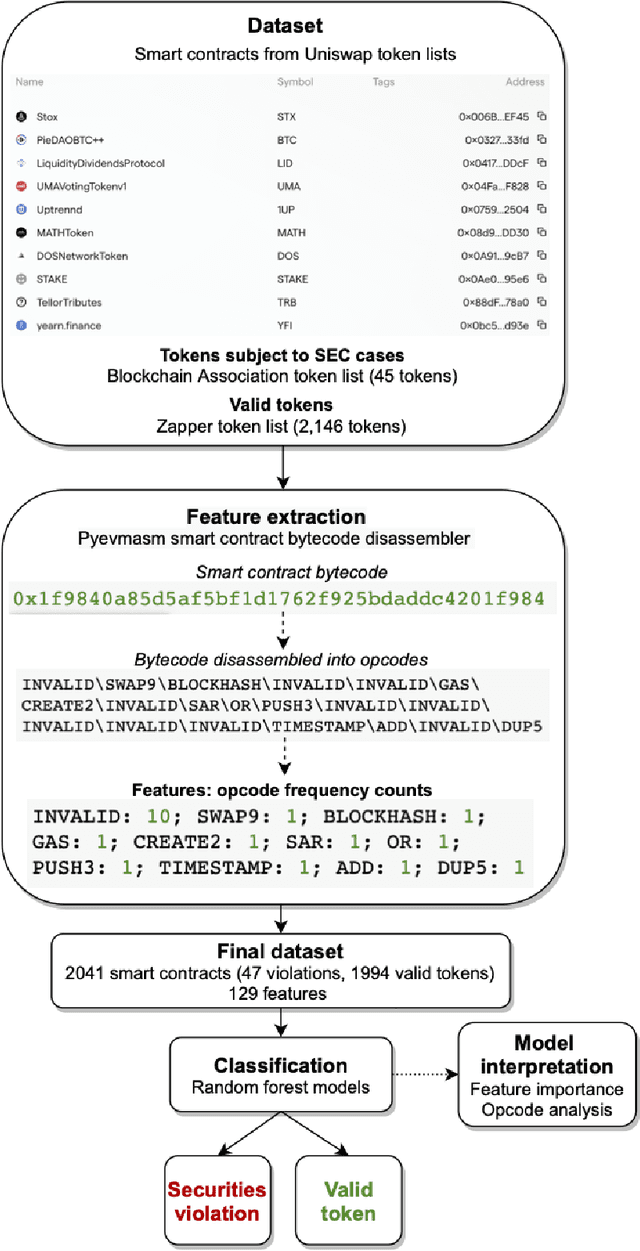

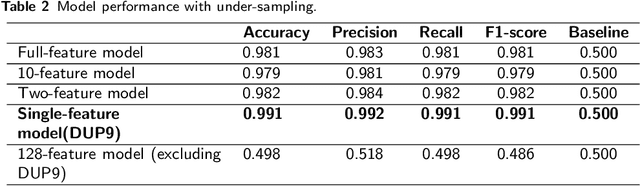

Abstract:Decentralized Finance (DeFi) is a system of financial products and services built and delivered through smart contracts on various blockchains. In the past year, DeFi has gained popularity and market capitalization. However, it has also become an epicenter of cryptocurrency-related crime, in particular, various types of securities violations. The lack of Know Your Customer requirements in DeFi has left governments unsure of how to handle the magnitude of offending in this space. This study aims to address this problem with a machine learning approach to identify DeFi projects potentially engaging in securities violations based on their tokens' smart contract code. We adapt prior work on detecting specific types of securities violations across Ethereum more broadly, building a random forest classifier based on features extracted from DeFi projects' tokens' smart contract code. The final classifier achieves a 99.1% F1-score. Such high performance is surprising for any classification problem, however, from further feature-level, we find a single feature makes this a highly detectable problem. Another contribution of our study is a new dataset, comprised of (a) a verified ground truth dataset for tokens involved in securities violations and (b) a set of valid tokens from a DeFi aggregator which conducts due diligence on the projects it lists. This paper further discusses the use of our model by prosecutors in enforcement efforts and connects its potential use to the wider legal context.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge