Arda Inceoglu

Multimodal Detection and Identification of Robot Manipulation Failures

May 08, 2023

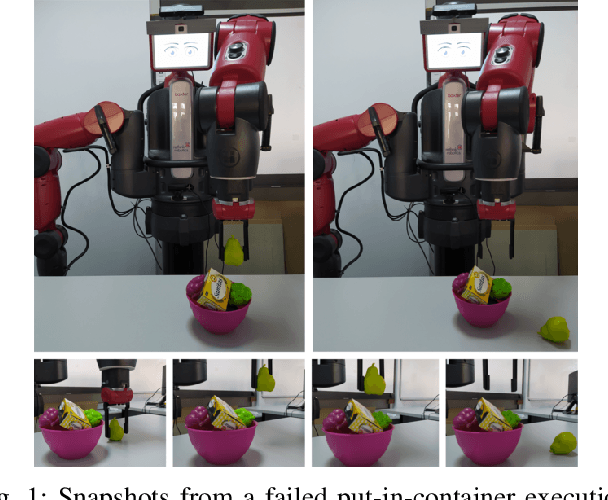

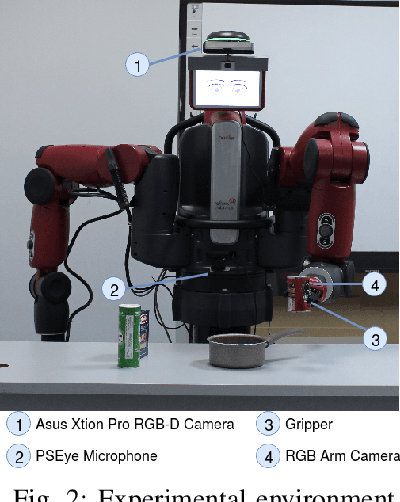

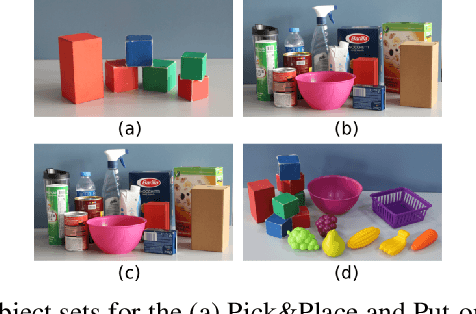

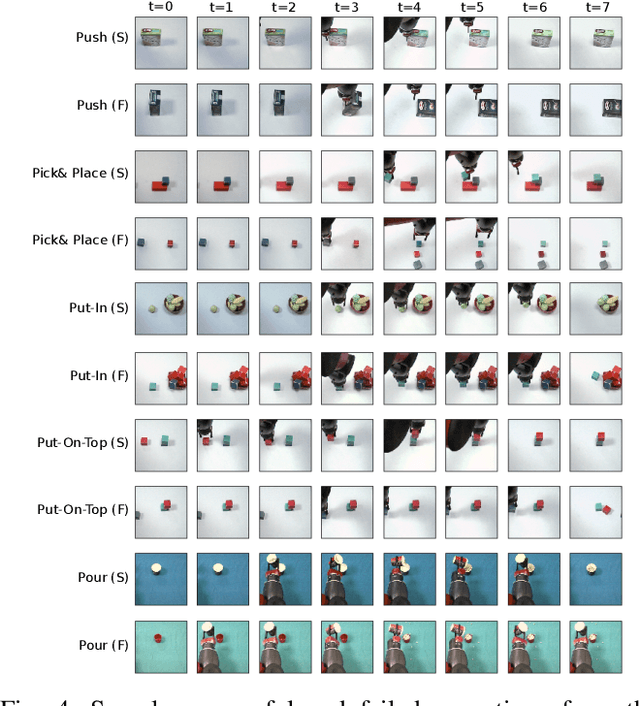

Abstract:An autonomous service robot should be able to interact with its environment safely and robustly without requiring human assistance. Unstructured environments are challenging for robots since the exact prediction of outcomes is not always possible. Even when the robot behaviors are well-designed, the unpredictable nature of physical robot-object interaction may prevent success in object manipulation. Therefore, execution of a manipulation action may result in an undesirable outcome involving accidents or damages to the objects or environment. Situation awareness becomes important in such cases to enable the robot to (i) maintain the integrity of both itself and the environment, (ii) recover from failed tasks in the short term, and (iii) learn to avoid failures in the long term. For this purpose, robot executions should be continuously monitored, and failures should be detected and classified appropriately. In this work, we focus on detecting and classifying both manipulation and post-manipulation phase failures using the same exteroception setup. We cover a diverse set of failure types for primary tabletop manipulation actions. In order to detect these failures, we propose FINO-Net [1], a deep multimodal sensor fusion based classifier network. Proposed network accurately detects and classifies failures from raw sensory data without any prior knowledge. In this work, we use our extended FAILURE dataset [1] with 99 new multimodal manipulation recordings and annotate them with their corresponding failure types. FINO-Net achieves 0.87 failure detection and 0.80 failure classification F1 scores. Experimental results show that proposed architecture is also appropriate for real-time use.

FINO-Net: A Deep Multimodal Sensor Fusion Framework for Manipulation Failure Detection

Nov 11, 2020

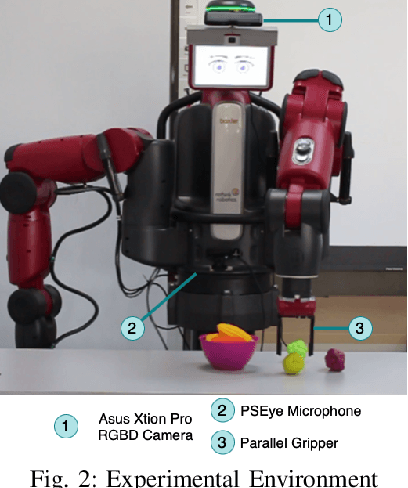

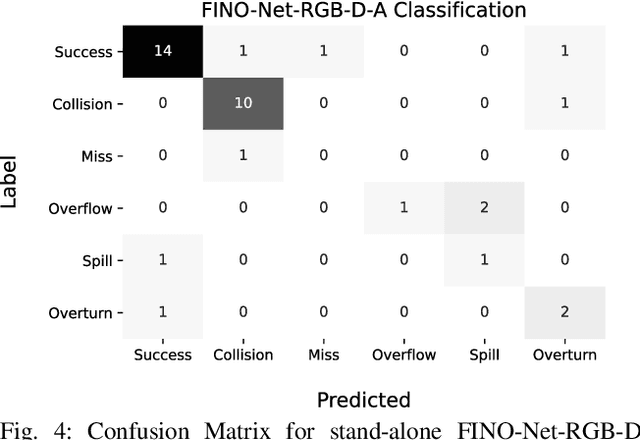

Abstract:Safe manipulation in unstructured environments for service robots is a challenging problem. A failure detection system is needed to monitor and detect unintended outcomes. We propose FINO-Net, a novel multimodal sensor fusion based deep neural network to detect and identify manipulation failures. We also introduce a multimodal dataset, containing 229 real-world manipulation data recorded with a Baxter robot. Our network combines RGB, depth and audio readings to effectively detect and classify failures. Results indicate that fusing RGB with depth and audio modalities significantly improves the performance. FINO-Net achieves 98.60% detection and 87.31% classification accuracy on our novel dataset. Code and data are publicly available at https://github.com/ardai/fino-net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge