Ao Guo

RadarRGBD A Multi-Sensor Fusion Dataset for Perception with RGB-D and mmWave Radar

May 21, 2025Abstract:Multi-sensor fusion has significant potential in perception tasks for both indoor and outdoor environments. Especially under challenging conditions such as adverse weather and low-light environments, the combined use of millimeter-wave radar and RGB-D sensors has shown distinct advantages. However, existing multi-sensor datasets in the fields of autonomous driving and robotics often lack high-quality millimeter-wave radar data. To address this gap, we present a new multi-sensor dataset:RadarRGBD. This dataset includes RGB-D data, millimeter-wave radar point clouds, and raw radar matrices, covering various indoor and outdoor scenes, as well as low-light environments. Compared to existing datasets, RadarRGBD employs higher-resolution millimeter-wave radar and provides raw data, offering a new research foundation for the fusion of millimeter-wave radar and visual sensors. Furthermore, to tackle the noise and gaps in depth maps captured by Kinect V2 due to occlusions and mismatches, we fine-tune an open-source relative depth estimation framework, incorporating the absolute depth information from the dataset for depth supervision. We also introduce pseudo-relative depth scale information to further optimize the global depth scale estimation. Experimental results demonstrate that the proposed method effectively fills in missing regions in sensor data. Our dataset and related documentation will be publicly available at: https://github.com/song4399/RadarRGBD.

Team Flow at DRC2023: Building Common Ground and Text-based Turn-taking in a Travel Agent Spoken Dialogue System

Dec 21, 2023Abstract:At the Dialogue Robot Competition 2023 (DRC2023), which was held to improve the capability of dialogue robots, our team developed a system that could build common ground and take more natural turns based on user utterance texts. Our system generated queries for sightseeing spot searches using the common ground and engaged in dialogue while waiting for user comprehension.

Team Flow at DRC2022: Pipeline System for Travel Destination Recommendation Task in Spoken Dialogue

Oct 18, 2022

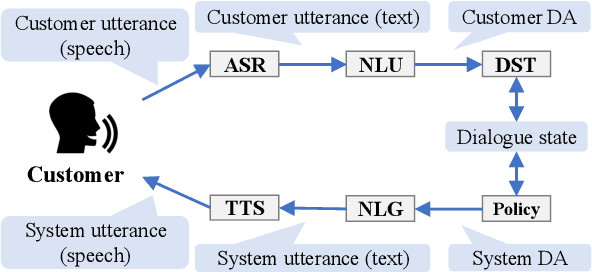

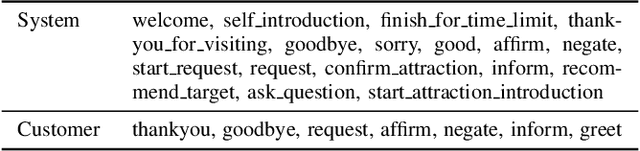

Abstract:To improve the interactive capabilities of a dialogue system, e.g., to adapt to different customers, the Dialogue Robot Competition (DRC2022) was held. As one of the teams, we built a dialogue system with a pipeline structure containing four modules. The natural language understanding (NLU) and natural language generation (NLG) modules were GPT-2 based models, and the dialogue state tracking (DST) and policy modules were designed on the basis of hand-crafted rules. After the preliminary round of the competition, we found that the low variation in training examples for the NLU and failed recommendation due to the policy used were probably the main reasons for the limited performance of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge