Antonio Sze-To

Searching for Pneumothorax in X-Ray Images Using Autoencoded Deep Features

Feb 11, 2021

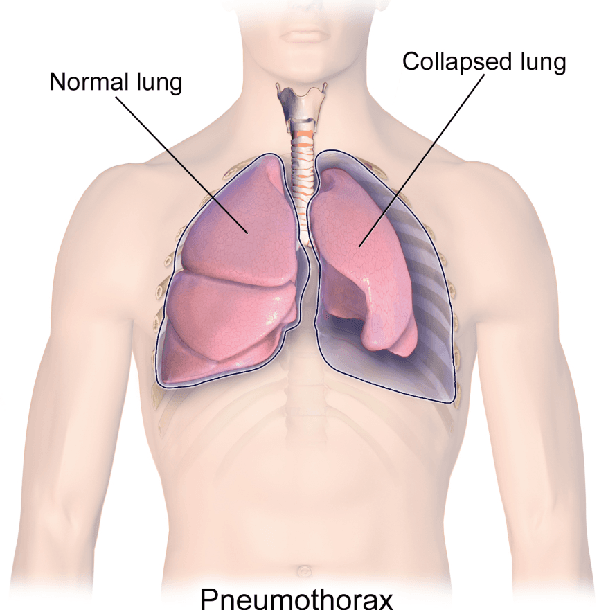

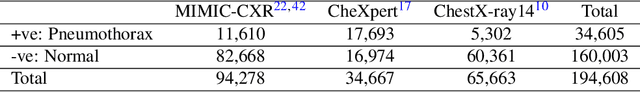

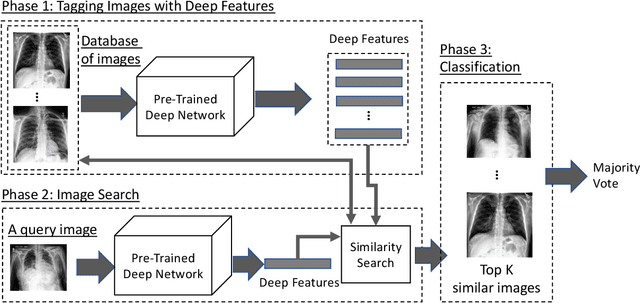

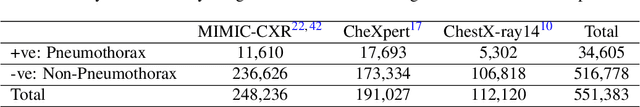

Abstract:Fast diagnosis and treatment of pneumothorax, a collapsed or dropped lung, is crucial to avoid fatalities. Pneumothorax is typically detected on a chest X-ray image through visual inspection by experienced radiologists. However, the detection rate is quite low. Therefore, there is a strong need for automated detection systems to assist radiologists. Despite the high accuracy levels generally reported for deep learning classifiers in many applications, they may not be useful in clinical practice due to the lack of large number of high-quality labelled images as well as a lack of interpretation possibility. Alternatively, searching in the archive of past cases to find matching images may serve as a 'virtual second opinion' through accessing the metadata of matched evidently diagnosed cases. To use image search as a triaging/diagnosis tool, all chest X-ray images must first be tagged with identifiers, i.e., deep features. Then, given a query chest X-ray image, the majority vote among the top k retrieved images can provide a more explainable output. While image search can be clinically more viable, its detection performance needs to be investigated at a scale closer to real-world practice. We combined 3 public datasets to assemble a repository with more than 550,000 chest X-ray images. We developed the Autoencoding Thorax Net (short AutoThorax-Net) for image search in chest radiographs compressing three inputs: the left chest side, the flipped right side, and the entire chest image. Experimental results show that image search based on AutoThorax-Net features can achieve high identification rates providing a path towards real-world deployment. We achieved 92% AUC accuracy for a semi-automated search in 194,608 images (pneumothorax and normal) and 82% AUC accuracy for fully automated search in 551,383 images (normal, pneumothorax and many other chest diseases).

Searching for Pneumothorax in Half a Million Chest X-Ray Images

Jul 30, 2020

Abstract:Pneumothorax, a collapsed or dropped lung, is a fatal condition typically detected on a chest X-ray by an experienced radiologist. Due to shortage of such experts, automated detection systems based on deep neural networks have been developed. Nevertheless, applying such systems in practice remains a challenge. These systems, mostly compute a single probability as output, may not be enough for diagnosis. On the contrary, content-based medical image retrieval (CBIR) systems, such as image search, can assist clinicians for diagnostic purposes by enabling them to compare the case they are examining with previous (already diagnosed) cases. However, there is a lack of study on such attempt. In this study, we explored the use of image search to classify pneumothorax among chest X-ray images. All chest X-ray images were first tagged with deep pretrained features, which were obtained from existing deep learning models. Given a query chest X-ray image, the majority voting of the top K retrieved images was then used as a classifier, in which similar cases in the archive of past cases are provided besides the probability output. In our experiments, 551,383 chest X-ray images were obtained from three large recently released public datasets. Using 10-fold cross-validation, it is shown that image search on deep pretrained features achieved promising results compared to those obtained by traditional classifiers trained on the same features. To the best of knowledge, it is the first study to demonstrate that deep pretrained features can be used for CBIR of pneumothorax in half a million chest X-ray images.

Binary Codes for Tagging X-Ray Images via Deep De-Noising Autoencoders

Apr 24, 2016

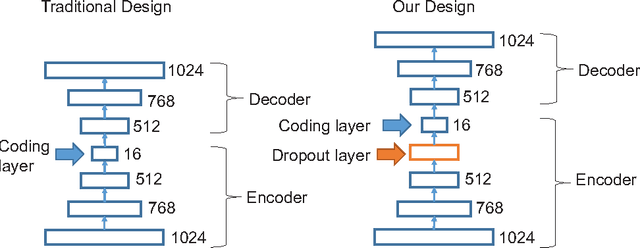

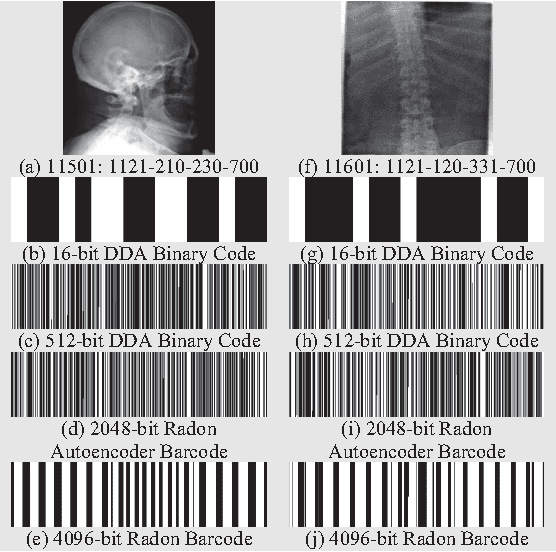

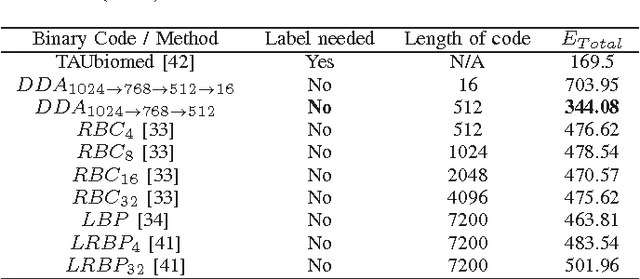

Abstract:A Content-Based Image Retrieval (CBIR) system which identifies similar medical images based on a query image can assist clinicians for more accurate diagnosis. The recent CBIR research trend favors the construction and use of binary codes to represent images. Deep architectures could learn the non-linear relationship among image pixels adaptively, allowing the automatic learning of high-level features from raw pixels. However, most of them require class labels, which are expensive to obtain, particularly for medical images. The methods which do not need class labels utilize a deep autoencoder for binary hashing, but the code construction involves a specific training algorithm and an ad-hoc regularization technique. In this study, we explored using a deep de-noising autoencoder (DDA), with a new unsupervised training scheme using only backpropagation and dropout, to hash images into binary codes. We conducted experiments on more than 14,000 x-ray images. By using class labels only for evaluating the retrieval results, we constructed a 16-bit DDA and a 512-bit DDA independently. Comparing to other unsupervised methods, we succeeded to obtain the lowest total error by using the 512-bit codes for retrieval via exhaustive search, and speed up 9.27 times with the use of the 16-bit codes while keeping a comparable total error. We found that our new training scheme could reduce the total retrieval error significantly by 21.9%. To further boost the image retrieval performance, we developed Radon Autoencoder Barcode (RABC) which are learned from the Radon projections of images using a de-noising autoencoder. Experimental results demonstrated its superior performance in retrieval when it was combined with DDA binary codes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge