Antonio R. Porras

BabyNet: Reconstructing 3D faces of babies from uncalibrated photographs

Mar 11, 2022

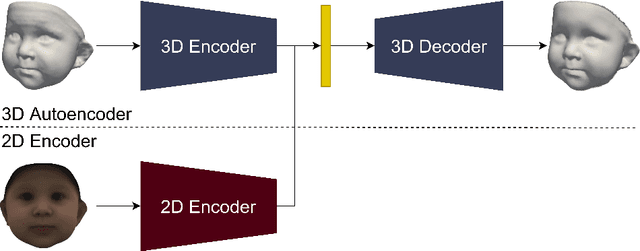

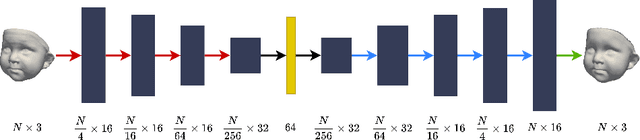

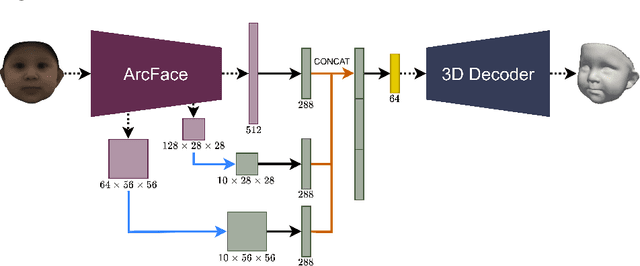

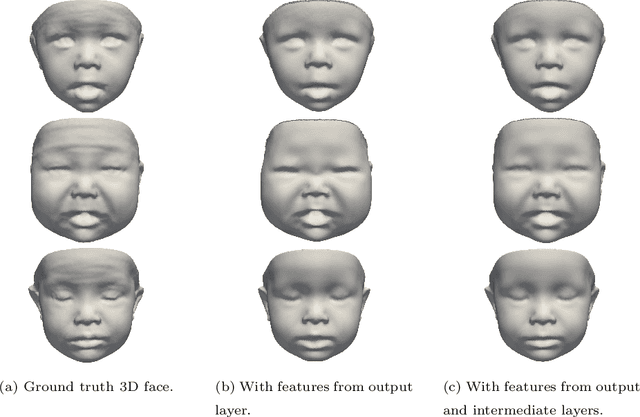

Abstract:We present a 3D face reconstruction system that aims at recovering the 3D facial geometry of babies from uncalibrated photographs, BabyNet. Since the 3D facial geometry of babies differs substantially from that of adults, baby-specific facial reconstruction systems are needed. BabyNet consists of two stages: 1) a 3D graph convolutional autoencoder learns a latent space of the baby 3D facial shape; and 2) a 2D encoder that maps photographs to the 3D latent space based on representative features extracted using transfer learning. In this way, using the pre-trained 3D decoder, we can recover a 3D face from 2D images. We evaluate BabyNet and show that 1) methods based on adult datasets cannot model the 3D facial geometry of babies, which proves the need for a baby-specific method, and 2) BabyNet outperforms classical model-fitting methods even when a baby-specific 3D morphable model, such as BabyFM, is used.

Region Proposal Networks with Contextual Selective Attention for Real-Time Organ Detection

Dec 26, 2018

Abstract:State-of-the-art methods for object detection use region proposal networks (RPN) to hypothesize object location. These networks simultaneously predicts object bounding boxes and \emph{objectness} scores at each location in the image. Unlike natural images for which RPN algorithms were originally designed, most medical images are acquired following standard protocols, thus organs in the image are typically at a similar location and possess similar geometrical characteristics (e.g. scale, aspect-ratio, etc.). Therefore, medical image acquisition protocols hold critical localization and geometric information that can be incorporated for faster and more accurate detection. This paper presents a novel attention mechanism for the detection of organs by incorporating imaging protocol information. Our novel selective attention approach (i) effectively shrinks the search space inside the feature map, (ii) appends useful localization information to the hypothesized proposal for the detection architecture to learn where to look for each organ, and (iii) modifies the pyramid of regression references in the RPN by incorporating organ- and modality-specific information, which results in additional time reduction. We evaluated the proposed framework on a dataset of 768 chest X-ray images obtained from a diverse set of sources. Our results demonstrate superior performance for the detection of the lung field compared to the state-of-the-art, both in terms of detection accuracy, demonstrating an improvement of $>7\%$ in Dice score, and reduced processing time by $27.53\%$ due to fewer hypotheses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge