Antonio Gomez

Speaker Diarization and Identification from Single-Channel Classroom Audio Recording Using Virtual Microphones

Jul 01, 2022

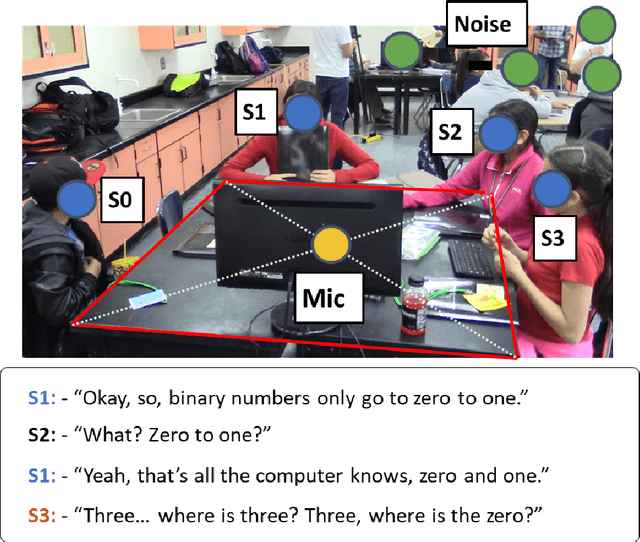

Abstract:Speaker identification in noisy audio recordings, specifically those from collaborative learning environments, can be extremely challenging. There is a need to identify individual students talking in small groups from other students talking at the same time. To solve the problem, we assume the use of a single microphone per student group without any access to previous large datasets for training. This dissertation proposes a method of speaker identification using cross-correlation patterns associated to an array of virtual microphones, centered around the physical microphone. The virtual microphones are simulated by using approximate speaker geometry observed from a video recording. The patterns are constructed based on estimates of the room impulse responses for each virtual microphone. The correlation patterns are then used to identify the speakers. The proposed method is validated with classroom audios and shown to substantially outperform diarization services provided by Google Cloud and Amazon AWS.

Bilingual Speech Recognition by Estimating Speaker Geometry from Video Data

Dec 26, 2021

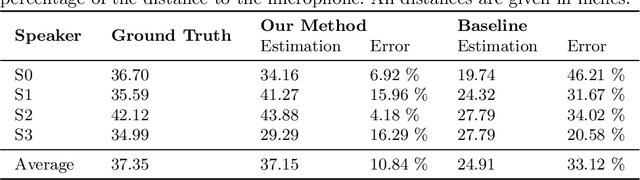

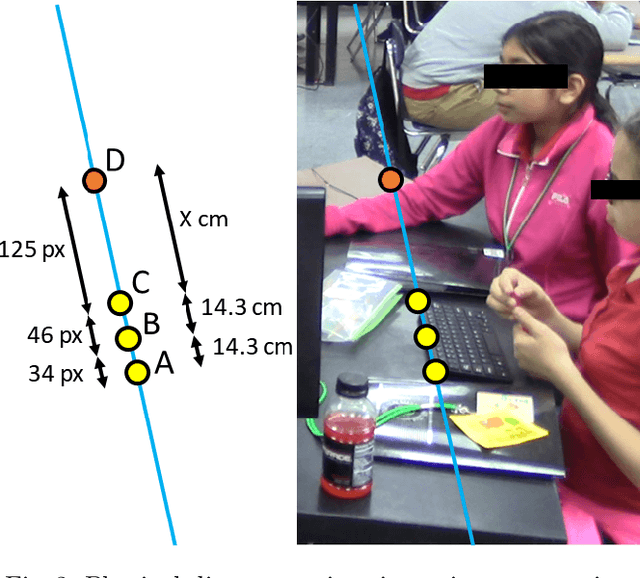

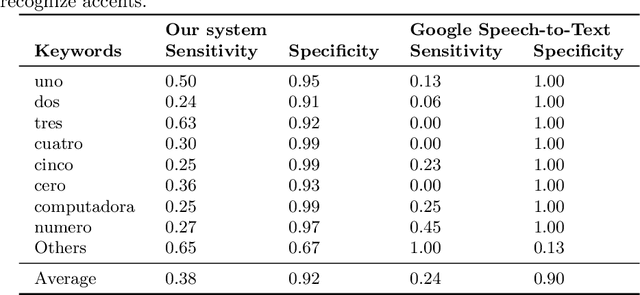

Abstract:Speech recognition is very challenging in student learning environments that are characterized by significant cross-talk and background noise. To address this problem, we present a bilingual speech recognition system that uses an interactive video analysis system to estimate the 3D speaker geometry for realistic audio simulations. We demonstrate the use of our system in generating a complex audio dataset that contains significant cross-talk and background noise that approximate real-life classroom recordings. We then test our proposed system with real-life recordings. In terms of the distance of the speakers from the microphone, our interactive video analysis system obtained a better average error rate of 10.83% compared to 33.12% for a baseline approach. Our proposed system gave an accuracy of 27.92% that is 1.5% better than Google Speech-to-text on the same dataset. In terms of 9 important keywords, our approach gave an average sensitivity of 38% compared to 24% for Google Speech-to-text, while both methods maintained high average specificity of 90% and 92%. On average, sensitivity improved from 24% to 38% for our proposed approach. On the other hand, specificity remained high for both methods (90% to 92%).

* 11 pages, 6 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge