Antonio Di Cecco

GloNets: Globally Connected Neural Networks

Nov 27, 2023Abstract:Deep learning architectures suffer from depth-related performance degradation, limiting the effective depth of neural networks. Approaches like ResNet are able to mitigate this, but they do not completely eliminate the problem. We introduce Globally Connected Neural Networks (GloNet), a novel architecture overcoming depth-related issues, designed to be superimposed on any model, enhancing its depth without increasing complexity or reducing performance. With GloNet, the network's head uniformly receives information from all parts of the network, regardless of their level of abstraction. This enables GloNet to self-regulate information flow during training, reducing the influence of less effective deeper layers, and allowing for stable training irrespective of network depth. This paper details GloNet's design, its theoretical basis, and a comparison with existing similar architectures. Experiments show GloNet's self-regulation ability and resilience to depth-related learning challenges, like performance degradation. Our findings suggest GloNet as a strong alternative to traditional architectures like ResNets.

A Novel Resampling Technique for Imbalanced Dataset Optimization

Dec 30, 2020

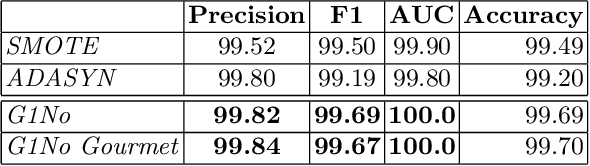

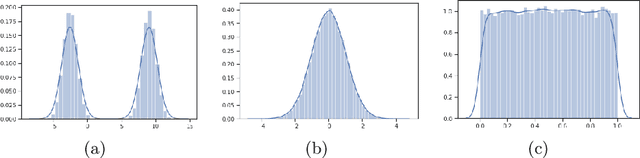

Abstract:Despite the enormous amount of data, particular events of interest can still be quite rare. Classification of rare events is a common problem in many domains, such as fraudulent transactions, malware traffic analysis and network intrusion detection. Many studies have been developed for malware detection using machine learning approaches on various datasets, but as far as we know only the MTA-KDD'19 dataset has the peculiarity of updating the representative set of malicious traffic on a daily basis. This daily updating is the added value of the dataset, but it translates into a potential due to the class imbalance problem that the RRw-Optimized MTA-KDD'19 will occur. We capture difficulties of class distribution in real datasets by considering four types of minority class examples: safe, borderline, rare and outliers. In this work, we developed two versions of Generative Silhouette Resampling 1-Nearest Neighbour (G1Nos) oversampling algorithms for dealing with class imbalance problem. The first module of G1Nos algorithms performs a coefficient-based instance selection silhouette identifying the critical threshold of Imbalance Degree. (ID), the second module generates synthetic samples using a SMOTE-like oversampling algorithm. The balancing of the classes is done by our G1Nos algorithms to re-establish the proportions between the two classes of the used dataset. The experimental results show that our oversampling algorithm work better than the other two SOTA methodologies in all the metrics considered.

Dataset Optimization Strategies for MalwareTraffic Detection

Sep 23, 2020

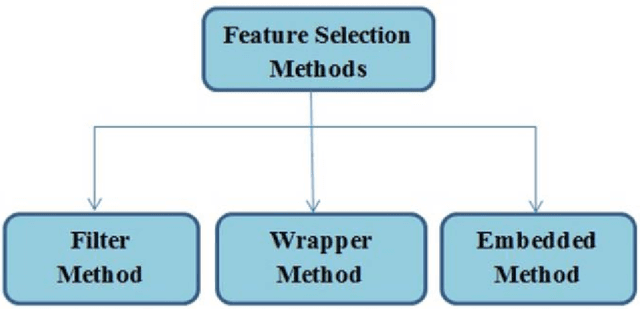

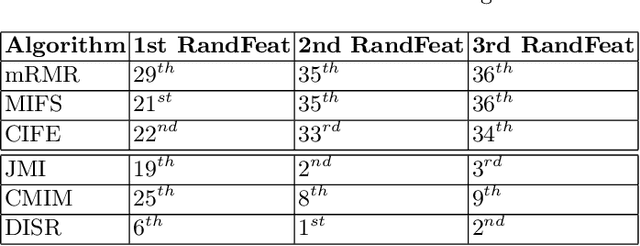

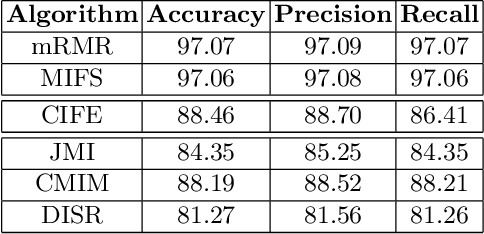

Abstract:Machine learning is rapidly becoming one of the most important technology for malware traffic detection, since the continuous evolution of malware requires a constant adaptation and the ability to generalize. However, network traffic datasets are usually oversized and contain redundant and irrelevant information, and this may dramatically increase the computational cost and decrease the accuracy of most classifiers, with the risk to introduce further noise. We propose two novel dataset optimization strategies which exploit and combine several state-of-the-art approaches in order to achieve an effective optimization of the network traffic datasets used to train malware detectors. The first approach is a feature selection technique based on mutual information measures and sensibility enhancement. The second is a dimensional reduction technique based autoencoders. Both these approaches have been experimentally applied on the MTA-KDD'19 dataset, and the optimized results evaluated and compared using a Multi Layer Perceptron as machine learning model for malware detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge