Annabelle Blangero

Agentic RAG with Knowledge Graphs for Complex Multi-Hop Reasoning in Real-World Applications

Jul 22, 2025Abstract:Conventional Retrieval-Augmented Generation (RAG) systems enhance Large Language Models (LLMs) but often fall short on complex queries, delivering limited, extractive answers and struggling with multiple targeted retrievals or navigating intricate entity relationships. This is a critical gap in knowledge-intensive domains. We introduce INRAExplorer, an agentic RAG system for exploring the scientific data of INRAE (France's National Research Institute for Agriculture, Food and Environment). INRAExplorer employs an LLM-based agent with a multi-tool architecture to dynamically engage a rich knowledge base, through a comprehensive knowledge graph derived from open access INRAE publications. This design empowers INRAExplorer to conduct iterative, targeted queries, retrieve exhaustive datasets (e.g., all publications by an author), perform multi-hop reasoning, and deliver structured, comprehensive answers. INRAExplorer serves as a concrete illustration of enhancing knowledge interaction in specialized fields.

Mitigating Text Toxicity with Counterfactual Generation

May 16, 2024

Abstract:Toxicity mitigation consists in rephrasing text in order to remove offensive or harmful meaning. Neural natural language processing (NLP) models have been widely used to target and mitigate textual toxicity. However, existing methods fail to detoxify text while preserving the initial non-toxic meaning at the same time. In this work, we propose to apply counterfactual generation methods from the eXplainable AI (XAI) field to target and mitigate textual toxicity. In particular, we perform text detoxification by applying local feature importance and counterfactual generation methods to a toxicity classifier distinguishing between toxic and non-toxic texts. We carry out text detoxification through counterfactual generation on three datasets and compare our approach to three competitors. Automatic and human evaluations show that recently developed NLP counterfactual generators can mitigate toxicity accurately while better preserving the meaning of the initial text as compared to classical detoxification methods. Finally, we take a step back from using automated detoxification tools, and discuss how to manage the polysemous nature of toxicity and the risk of malicious use of detoxification tools. This work is the first to bridge the gap between counterfactual generation and text detoxification and paves the way towards more practical application of XAI methods.

ClimateQ&A: Bridging the gap between climate scientists and the general public

Mar 18, 2024

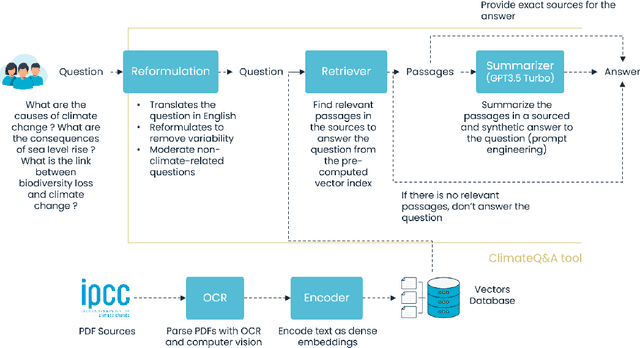

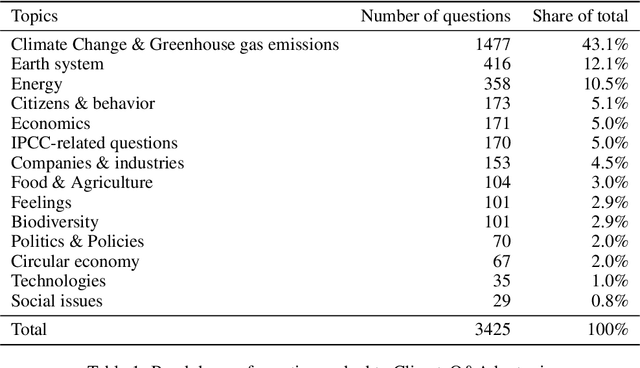

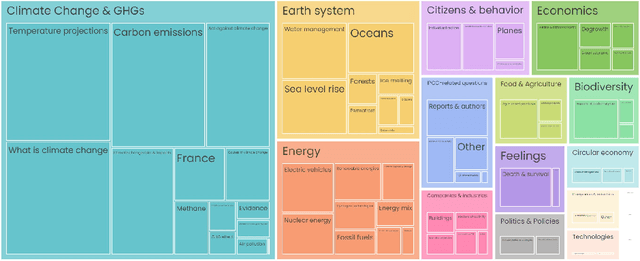

Abstract:This research paper investigates public views on climate change and biodiversity loss by analyzing questions asked to the ClimateQ&A platform. ClimateQ&A is a conversational agent that uses LLMs to respond to queries based on over 14,000 pages of scientific literature from the IPCC and IPBES reports. Launched online in March 2023, the tool has gathered over 30,000 questions, mainly from a French audience. Its chatbot interface allows for the free formulation of questions related to nature*. While its main goal is to make nature science more accessible, it also allows for the collection and analysis of questions and their themes. Unlike traditional surveys involving closed questions, this novel method offers a fresh perspective on individual interrogations about nature. Running NLP clustering algorithms on a sample of 3,425 questions, we find that a significant 25.8% inquire about how climate change and biodiversity loss will affect them personally (e.g., where they live or vacation, their consumption habits) and the specific impacts of their actions on nature (e.g., transportation or food choices). This suggests that traditional methods of surveying may not identify all existing knowledge gaps, and that relying solely on IPCC and IPBES reports may not address all individual inquiries about climate and biodiversity, potentially affecting public understanding and action on these issues. *we use 'nature' as an umbrella term for 'climate change' and 'biodiversity loss'

Evaluating self-attention interpretability through human-grounded experimental protocol

Mar 27, 2023Abstract:Attention mechanisms have played a crucial role in the development of complex architectures such as Transformers in natural language processing. However, Transformers remain hard to interpret and are considered as black-boxes. This paper aims to assess how attention coefficients from Transformers can help in providing interpretability. A new attention-based interpretability method called CLaSsification-Attention (CLS-A) is proposed. CLS-A computes an interpretability score for each word based on the attention coefficient distribution related to the part specific to the classification task within the Transformer architecture. A human-grounded experiment is conducted to evaluate and compare CLS-A to other interpretability methods. The experimental protocol relies on the capacity of an interpretability method to provide explanation in line with human reasoning. Experiment design includes measuring reaction times and correct response rates by human subjects. CLS-A performs comparably to usual interpretability methods regarding average participant reaction time and accuracy. The lower computational cost of CLS-A compared to other interpretability methods and its availability by design within the classifier make it particularly interesting. Data analysis also highlights the link between the probability score of a classifier prediction and adequate explanations. Finally, our work confirms the relevancy of the use of CLS-A and shows to which extent self-attention contains rich information to explain Transformer classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge