Anjie Peng

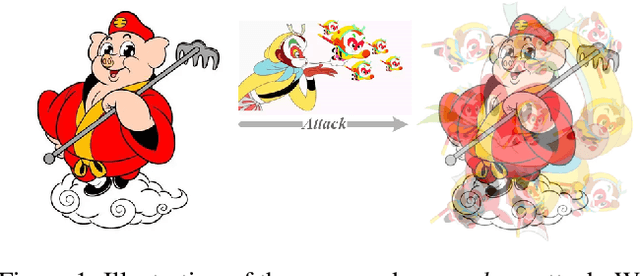

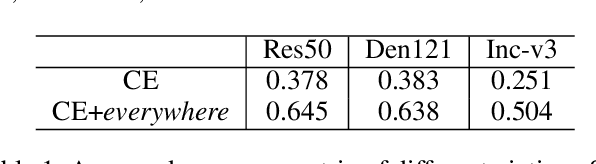

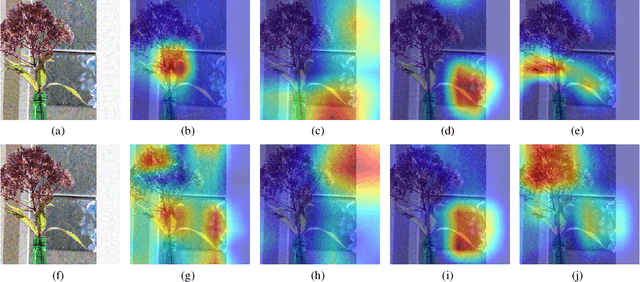

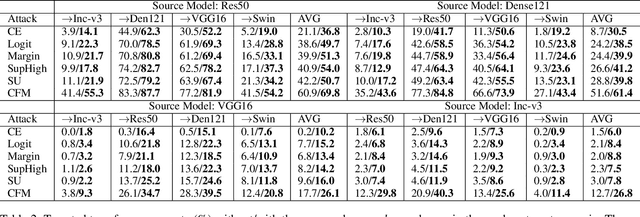

Everywhere Attack: Attacking Locally and Globally to Boost Targeted Transferability

Jan 01, 2025

Abstract:Adversarial examples' (AE) transferability refers to the phenomenon that AEs crafted with one surrogate model can also fool other models. Notwithstanding remarkable progress in untargeted transferability, its targeted counterpart remains challenging. This paper proposes an everywhere scheme to boost targeted transferability. Our idea is to attack a victim image both globally and locally. We aim to optimize 'an army of targets' in every local image region instead of the previous works that optimize a high-confidence target in the image. Specifically, we split a victim image into non-overlap blocks and jointly mount a targeted attack on each block. Such a strategy mitigates transfer failures caused by attention inconsistency between surrogate and victim models and thus results in stronger transferability. Our approach is method-agnostic, which means it can be easily combined with existing transferable attacks for even higher transferability. Extensive experiments on ImageNet demonstrate that the proposed approach universally improves the state-of-the-art targeted attacks by a clear margin, e.g., the transferability of the widely adopted Logit attack can be improved by 28.8%-300%.We also evaluate the crafted AEs on a real-world platform: Google Cloud Vision. Results further support the superiority of the proposed method.

Two Heads Are Better Than One: Averaging along Fine-Tuning to Improve Targeted Transferability

Dec 30, 2024

Abstract:With much longer optimization time than that of untargeted attacks notwithstanding, the transferability of targeted attacks is still far from satisfactory. Recent studies reveal that fine-tuning an existing adversarial example (AE) in feature space can efficiently boost its targeted transferability. However, existing fine-tuning schemes only utilize the endpoint and ignore the valuable information in the fine-tuning trajectory. Noting that the vanilla fine-tuning trajectory tends to oscillate around the periphery of a flat region of the loss surface, we propose averaging over the fine-tuning trajectory to pull the crafted AE towards a more centered region. We compare the proposed method with existing fine-tuning schemes by integrating them with state-of-the-art targeted attacks in various attacking scenarios. Experimental results uphold the superiority of the proposed method in boosting targeted transferability. The code is available at github.com/zengh5/Avg_FT.

Enhancing targeted transferability via feature space fine-tuning

Jan 13, 2024

Abstract:Adversarial examples (AEs) have been extensively studied due to their potential for privacy protection and inspiring robust neural networks. Yet, making a targeted AE transferable across unknown models remains challenging. In this paper, to alleviate the overfitting dilemma common in an AE crafted by existing simple iterative attacks, we propose fine-tuning it in the feature space. Specifically, starting with an AE generated by a baseline attack, we encourage the features conducive to the target class and discourage the features to the original class in a middle layer of the source model. Extensive experiments demonstrate that only a few iterations of fine-tuning can boost existing attacks' targeted transferability nontrivially and universally. Our results also verify that the simple iterative attacks can yield comparable or even better transferability than the resource-intensive methods, which rest on training target-specific classifiers or generators with additional data. The code is available at: github.com/zengh5/TA_feature_FT.

A comparison study of CNN denoisers on PRNU extraction

Dec 06, 2021

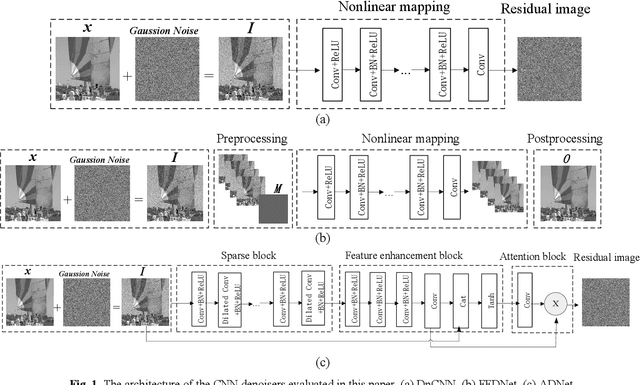

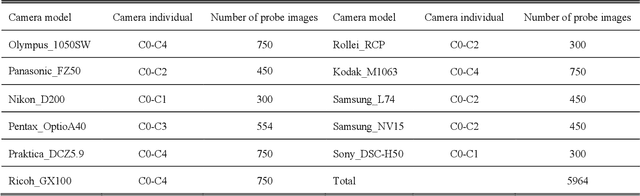

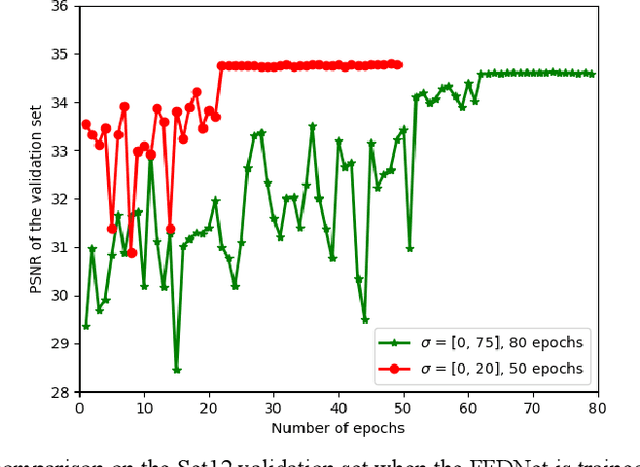

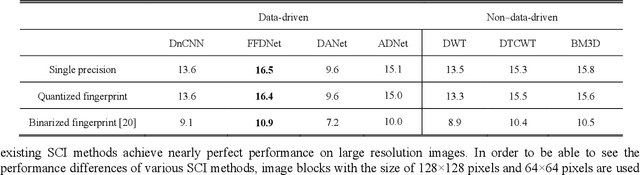

Abstract:Performance of the sensor-based camera identification (SCI) method heavily relies on the denoising filter in estimating Photo-Response Non-Uniformity (PRNU). Given various attempts on enhancing the quality of the extracted PRNU, it still suffers from unsatisfactory performance in low-resolution images and high computational demand. Leveraging the similarity of PRNU estimation and image denoising, we take advantage of the latest achievements of Convolutional Neural Network (CNN)-based denoisers for PRNU extraction. In this paper, a comparative evaluation of such CNN denoisers on SCI performance is carried out on the public "Dresden Image Database". Our findings are two-fold. From one aspect, both the PRNU extraction and image denoising separate noise from the image content. Hence, SCI can benefit from the recent CNN denoisers if carefully trained. From another aspect, the goals and the scenarios of PRNU extraction and image denoising are different since one optimizes the quality of noise and the other optimizes the image quality. A carefully tailored training is needed when CNN denoisers are used for PRNU estimation. Alternative strategies of training data preparation and loss function design are analyzed theoretically and evaluated experimentally. We point out that feeding the CNNs with image-PRNU pairs and training them with correlation-based loss function result in the best PRNU estimation performance. To facilitate further studies of SCI, we also propose a minimum-loss camera fingerprint quantization scheme using which we save the fingerprints as image files in PNG format. Furthermore, we make the quantized fingerprints of the cameras from the "Dresden Image Database" publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge