Anindya Paul

Synthetic Dataset Generation for Adversarial Machine Learning Research

Jul 21, 2022

Abstract:Existing adversarial example research focuses on digitally inserted perturbations on top of existing natural image datasets. This construction of adversarial examples is not realistic because it may be difficult, or even impossible, for an attacker to deploy such an attack in the real-world due to sensing and environmental effects. To better understand adversarial examples against cyber-physical systems, we propose approximating the real-world through simulation. In this paper we describe our synthetic dataset generation tool that enables scalable collection of such a synthetic dataset with realistic adversarial examples. We use the CARLA simulator to collect such a dataset and demonstrate simulated attacks that undergo the same environmental transforms and processing as real-world images. Our tools have been used to collect datasets to help evaluate the efficacy of adversarial examples, and can be found at https://github.com/carla-simulator/carla/pull/4992.

Towards resilient machine learning for ransomware detection

Dec 21, 2018

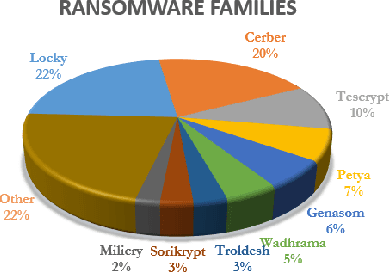

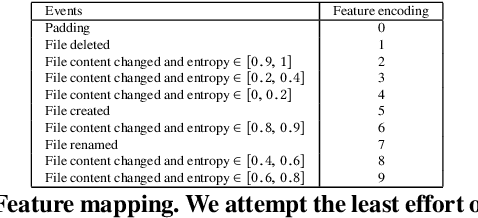

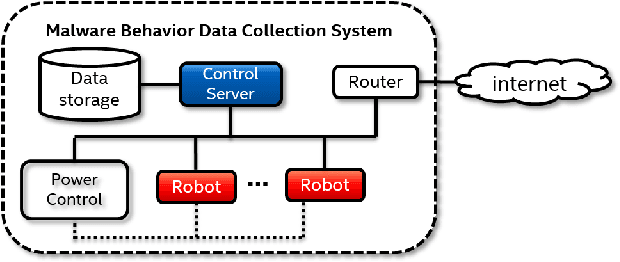

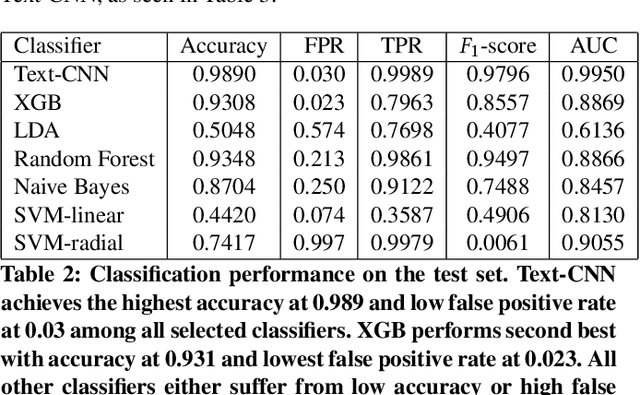

Abstract:There has been a surge of interest in using machine learning (ML) to automatically detect malware through their dynamic behaviors. These approaches have achieved significant improvement in detection rates and lower false positive rates at large scale compared with traditional malware analysis methods. ML in threat detection has demonstrated to be a good cop to guard platform security. However it is imperative to evaluate - is ML-powered security resilient enough? In this paper, we juxtapose the resiliency and trustworthiness of ML algorithms for security, via a case study of evaluating the resiliency of ransomware detection via the generative adversarial network (GAN). In this case study, we propose to use GAN to automatically produce dynamic features that exhibit generalized malicious behaviors that can reduce the efficacy of black-box ransomware classifiers. We examine the quality of the GAN-generated samples by comparing the statistical similarity of these samples to real ransomware and benign software. Further we investigate the latent subspace where the GAN-generated samples lie and explore reasons why such samples cause a certain class of ransomware classifiers to degrade in performance. Our focus is to emphasize necessary defense improvement in ML-based approaches for ransomware detection before deployment in the wild. Our results and discoveries should pose relevant questions for defenders such as how ML models can be made more resilient for robust enforcement of security objectives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge