Andrey Gorodetskiy

Model-based Policy Optimization using Symbolic World Model

Jul 18, 2024

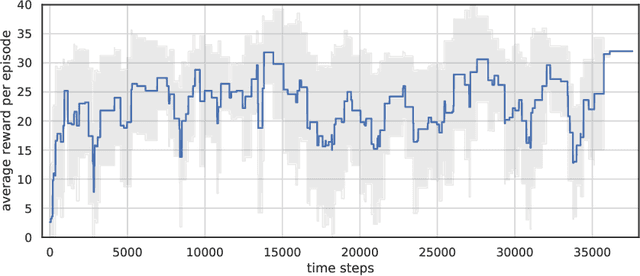

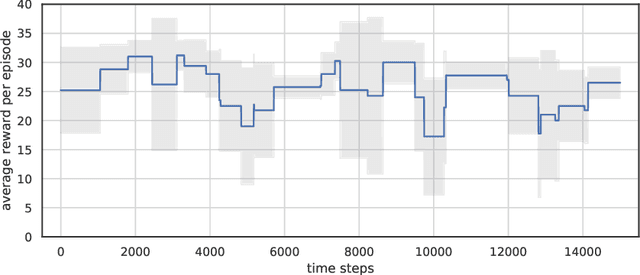

Abstract:The application of learning-based control methods in robotics presents significant challenges. One is that model-free reinforcement learning algorithms use observation data with low sample efficiency. To address this challenge, a prevalent approach is model-based reinforcement learning, which involves employing an environment dynamics model. We suggest approximating transition dynamics with symbolic expressions, which are generated via symbolic regression. Approximation of a mechanical system with a symbolic model has fewer parameters than approximation with neural networks, which can potentially lead to higher accuracy and quality of extrapolation. We use a symbolic dynamics model to generate trajectories in model-based policy optimization to improve the sample efficiency of the learning algorithm. We evaluate our approach across various tasks within simulated environments. Our method demonstrates superior sample efficiency in these tasks compared to model-free and model-based baseline methods.

Delta Schema Network in Model-based Reinforcement Learning

Jul 08, 2020

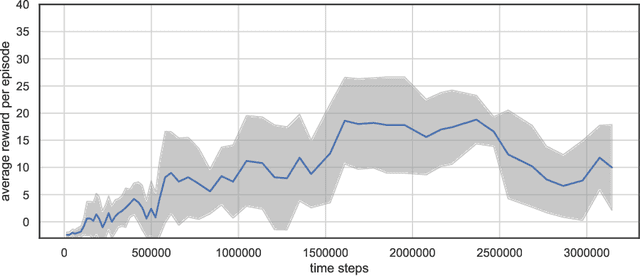

Abstract:This work is devoted to unresolved problems of Artificial General Intelligence - the inefficiency of transfer learning. One of the mechanisms that are used to solve this problem in the area of reinforcement learning is a model-based approach. In the paper we are expanding the schema networks method which allows to extract the logical relationships between objects and actions from the environment data. We present algorithms for training a Delta Schema Network (DSN), predicting future states of the environment and planning actions that will lead to positive reward. DSN shows strong performance of transfer learning on the classic Atari game environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge