Andreas Krug

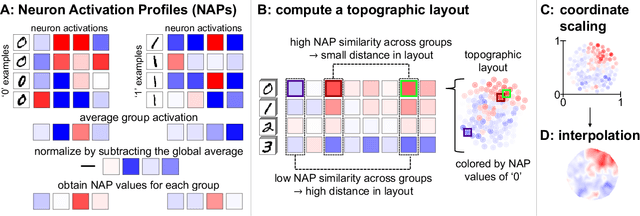

Visualizing Deep Neural Networks with Topographic Activation Maps

Apr 07, 2022

Abstract:Machine Learning with Deep Neural Networks (DNNs) has become a successful tool in solving tasks across various fields of application. The success of DNNs is strongly connected to their high complexity in terms of the number of network layers or of neurons in each layer, which severely complicates to understand how DNNs solve their learned task. To improve the explainability of DNNs, we adapt methods from neuroscience because this field has a rich experience in analyzing complex and opaque systems. In this work, we draw inspiration from how neuroscience uses topographic maps to visualize the activity of the brain when it performs certain tasks. Transferring this approach to DNNs can help to visualize and understand their internal processes more intuitively, too. However, the inner structures of brains and DNNs differ substantially. Therefore, to be able to visualize activations of neurons in DNNs as topographic maps, we research techniques to layout the neurons in a two-dimensional space in which neurons of similar activity are in the vicinity of each other. In this work, we introduce and compare different methods to obtain a topographic layout of the neurons in a network layer. Moreover, we demonstrate how to use the resulting topographic activation maps to identify errors or encoded biases in DNNs or data sets. Our novel visualization technique improves the transparency of DNN-based algorithmic decision-making systems and is accessible to a broad audience because topographic maps are intuitive to interpret without expert-knowledge in Machine Learning.

Gradient-Adjusted Neuron Activation Profiles for Comprehensive Introspection of Convolutional Speech Recognition Models

Feb 19, 2020

Abstract:Deep Learning based Automatic Speech Recognition (ASR) models are very successful, but hard to interpret. To gain better understanding of how Artificial Neural Networks (ANNs) accomplish their tasks, introspection methods have been proposed. Adapting such techniques from computer vision to speech recognition is not straight-forward, because speech data is more complex and less interpretable than image data. In this work, we introduce Gradient-adjusted Neuron Activation Profiles (GradNAPs) as means to interpret features and representations in Deep Neural Networks. GradNAPs are characteristic responses of ANNs to particular groups of inputs, which incorporate the relevance of neurons for prediction. We show how to utilize GradNAPs to gain insight about how data is processed in ANNs. This includes different ways of visualizing features and clustering of GradNAPs to compare embeddings of different groups of inputs in any layer of a given network. We demonstrate our proposed techniques using a fully-convolutional ASR model.

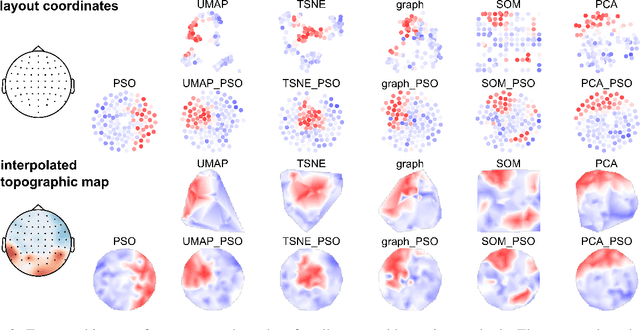

Visualizing Deep Neural Networks for Speech Recognition with Learned Topographic Filter Maps

Dec 06, 2019

Abstract:The uninformative ordering of artificial neurons in Deep Neural Networks complicates visualizing activations in deeper layers. This is one reason why the internal structure of such models is very unintuitive. In neuroscience, activity of real brains can be visualized by highlighting active regions. Inspired by those techniques, we train a convolutional speech recognition model, where filters are arranged in a 2D grid and neighboring filters are similar to each other. We show, how those topographic filter maps visualize artificial neuron activations more intuitively. Moreover, we investigate, whether this causes phoneme-responsive neurons to be grouped in certain regions of the topographic map.

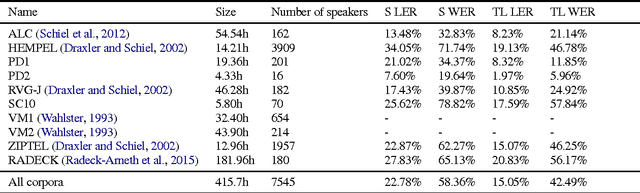

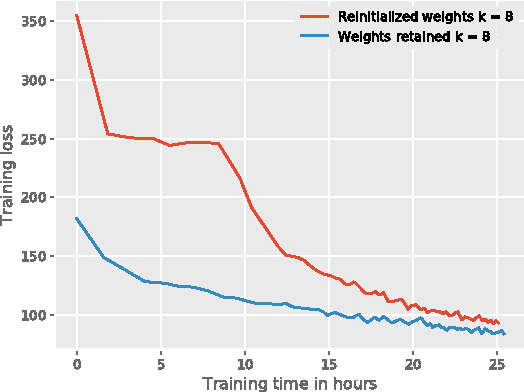

Transfer Learning for Speech Recognition on a Budget

Jun 01, 2017

Abstract:End-to-end training of automated speech recognition (ASR) systems requires massive data and compute resources. We explore transfer learning based on model adaptation as an approach for training ASR models under constrained GPU memory, throughput and training data. We conduct several systematic experiments adapting a Wav2Letter convolutional neural network originally trained for English ASR to the German language. We show that this technique allows faster training on consumer-grade resources while requiring less training data in order to achieve the same accuracy, thereby lowering the cost of training ASR models in other languages. Model introspection revealed that small adaptations to the network's weights were sufficient for good performance, especially for inner layers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge