Andreas Floros

On the Anisotropy of Score-Based Generative Models

Oct 27, 2025Abstract:We investigate the role of network architecture in shaping the inductive biases of modern score-based generative models. To this end, we introduce the Score Anisotropy Directions (SADs), architecture-dependent directions that reveal how different networks preferentially capture data structure. Our analysis shows that SADs form adaptive bases aligned with the architecture's output geometry, providing a principled way to predict generalization ability in score models prior to training. Through both synthetic data and standard image benchmarks, we demonstrate that SADs reliably capture fine-grained model behavior and correlate with downstream performance, as measured by Wasserstein metrics. Our work offers a new lens for explaining and predicting directional biases of generative models.

Trustworthy Image Super-Resolution via Generative Pseudoinverse

May 18, 2025Abstract:We consider the problem of trustworthy image restoration, taking the form of a constrained optimization over the prior density. To this end, we develop generative models for the task of image super-resolution that respect the degradation process and that can be made asymptotically consistent with the low-resolution measurements, outperforming existing methods by a large margin in that respect.

Tracing the Roots: Leveraging Temporal Dynamics in Diffusion Trajectories for Origin Attribution

Nov 12, 2024

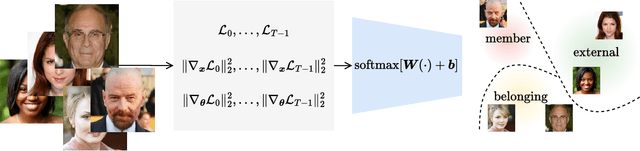

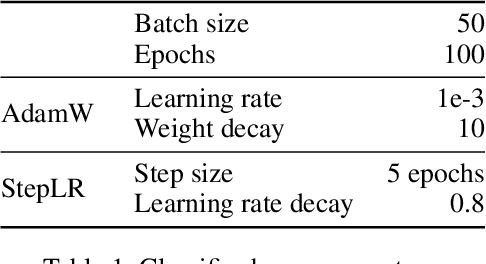

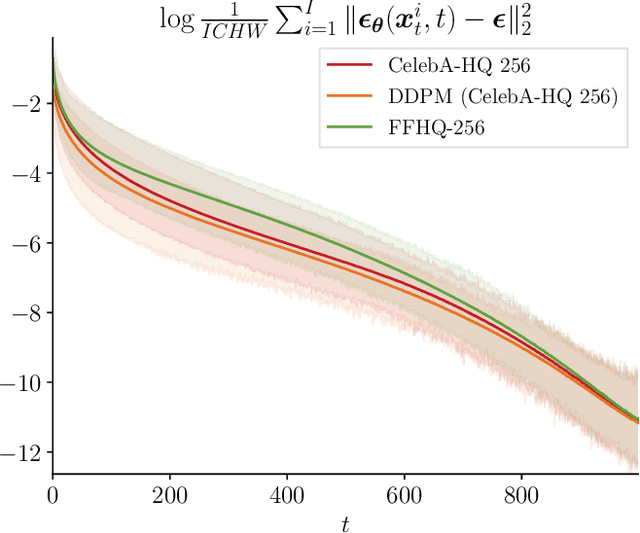

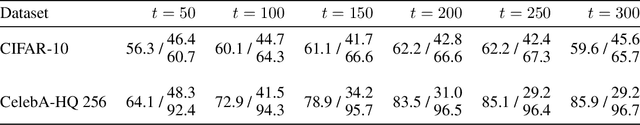

Abstract:Diffusion models have revolutionized image synthesis, garnering significant research interest in recent years. Diffusion is an iterative algorithm in which samples are generated step-by-step, starting from pure noise. This process introduces the notion of diffusion trajectories, i.e., paths from the standard Gaussian distribution to the target image distribution. In this context, we study discriminative algorithms operating on these trajectories. Specifically, given a pre-trained diffusion model, we consider the problem of classifying images as part of the training dataset, generated by the model or originating from an external source. Our approach demonstrates the presence of patterns across steps that can be leveraged for classification. We also conduct ablation studies, which reveal that using higher-order gradient features to characterize the trajectories leads to significant performance gains and more robust algorithms.

INDigo: An INN-Guided Probabilistic Diffusion Algorithm for Inverse Problems

Jun 05, 2023Abstract:Recently it has been shown that using diffusion models for inverse problems can lead to remarkable results. However, these approaches require a closed-form expression of the degradation model and can not support complex degradations. To overcome this limitation, we propose a method (INDigo) that combines invertible neural networks (INN) and diffusion models for general inverse problems. Specifically, we train the forward process of INN to simulate an arbitrary degradation process and use the inverse as a reconstruction process. During the diffusion sampling process, we impose an additional data-consistency step that minimizes the distance between the intermediate result and the INN-optimized result at every iteration, where the INN-optimized image is composed of the coarse information given by the observed degraded image and the details generated by the diffusion process. With the help of INN, our algorithm effectively estimates the details lost in the degradation process and is no longer limited by the requirement of knowing the closed-form expression of the degradation model. Experiments demonstrate that our algorithm obtains competitive results compared with recently leading methods both quantitatively and visually. Moreover, our algorithm performs well on more complex degradation models and real-world low-quality images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge