Andrea Rubbi

MixFlow: Mixture-Conditioned Flow Matching for Out-of-Distribution Generalization

Jan 16, 2026Abstract:Achieving robust generalization under distribution shift remains a central challenge in conditional generative modeling, as existing conditional flow-based methods often struggle to extrapolate beyond the training conditions. We introduce MixFlow, a conditional flow-matching framework for descriptor-controlled generation that directly targets this limitation by jointly learning a descriptor-conditioned base distribution and a descriptor-conditioned flow field via shortest-path flow matching. By modeling the base distribution as a learnable, descriptor-dependent mixture, MixFlow enables smooth interpolation and extrapolation to unseen conditions, leading to substantially improved out-of-distribution generalization. We provide analytical insights into the behavior of the proposed framework and empirically demonstrate its effectiveness across multiple domains, including prediction of responses to unseen perturbations in single-cell transcriptomic data and high-content microscopy-based drug screening tasks. Across these diverse settings, MixFlow consistently outperforms standard conditional flow-matching baselines. Overall, MixFlow offers a simple yet powerful approach for achieving robust, generalizable, and controllable generative modeling across heterogeneous domains.

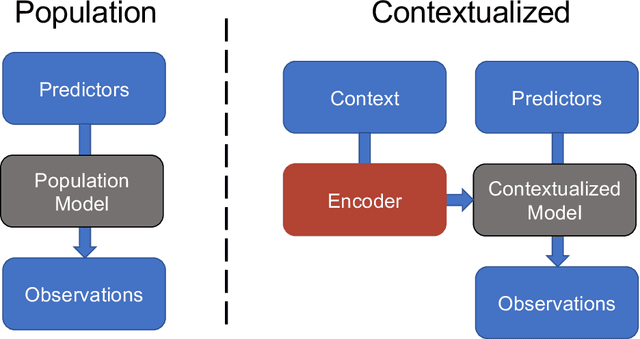

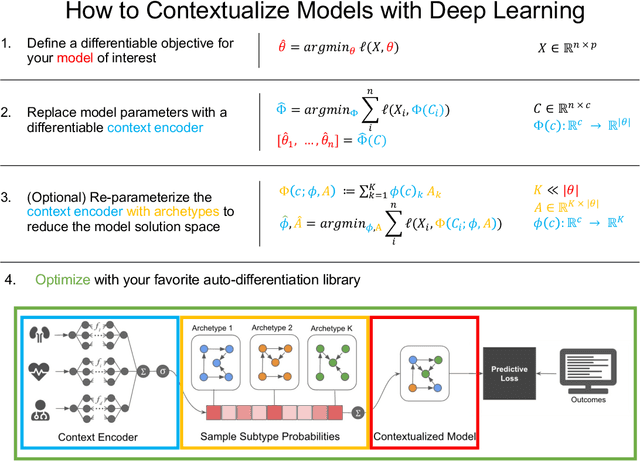

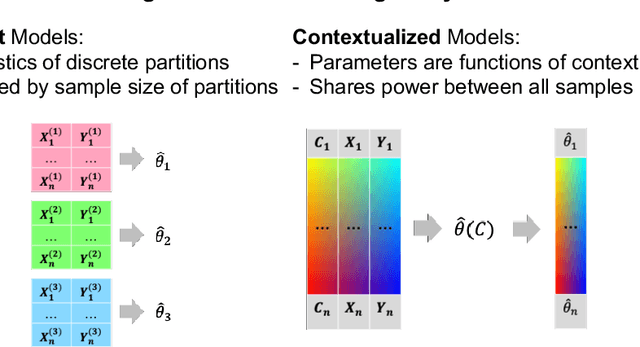

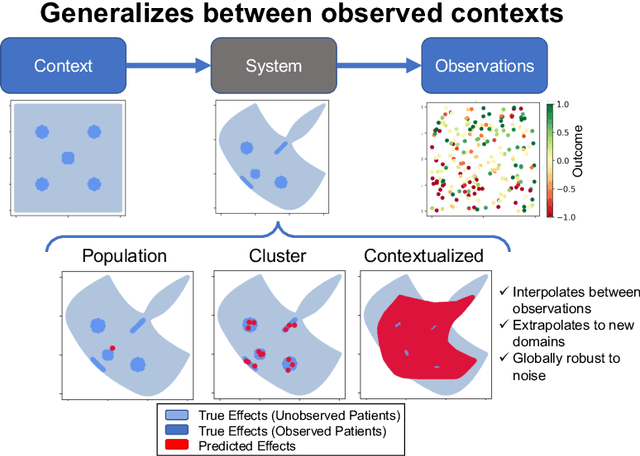

Contextualized Machine Learning

Oct 17, 2023

Abstract:We examine Contextualized Machine Learning (ML), a paradigm for learning heterogeneous and context-dependent effects. Contextualized ML estimates heterogeneous functions by applying deep learning to the meta-relationship between contextual information and context-specific parametric models. This is a form of varying-coefficient modeling that unifies existing frameworks including cluster analysis and cohort modeling by introducing two reusable concepts: a context encoder which translates sample context into model parameters, and sample-specific model which operates on sample predictors. We review the process of developing contextualized models, nonparametric inference from contextualized models, and identifiability conditions of contextualized models. Finally, we present the open-source PyTorch package ContextualizedML.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge