Andrea Bordone Molini

Deep 3D World Models for Multi-Image Super-Resolution Beyond Optical Flow

Jan 30, 2024

Abstract:Multi-image super-resolution (MISR) allows to increase the spatial resolution of a low-resolution (LR) acquisition by combining multiple images carrying complementary information in the form of sub-pixel offsets in the scene sampling, and can be significantly more effective than its single-image counterpart. Its main difficulty lies in accurately registering and fusing the multi-image information. Currently studied settings, such as burst photography, typically involve assumptions of small geometric disparity between the LR images and rely on optical flow for image registration. We study a MISR method that can increase the resolution of sets of images acquired with arbitrary, and potentially wildly different, camera positions and orientations, generalizing the currently studied MISR settings. Our proposed model, called EpiMISR, moves away from optical flow and explicitly uses the epipolar geometry of the acquisition process, together with transformer-based processing of radiance feature fields to substantially improve over state-of-the-art MISR methods in presence of large disparities in the LR images.

Speckle2Void: Deep Self-Supervised SAR Despeckling with Blind-Spot Convolutional Neural Networks

Jul 04, 2020

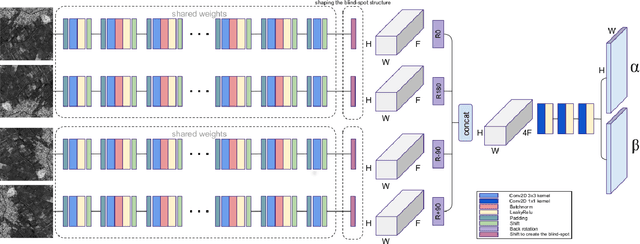

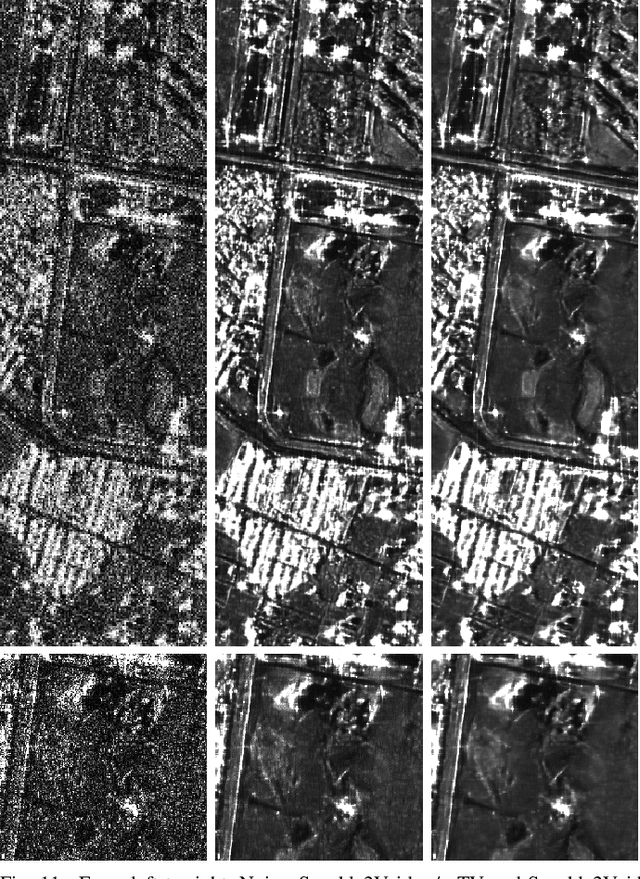

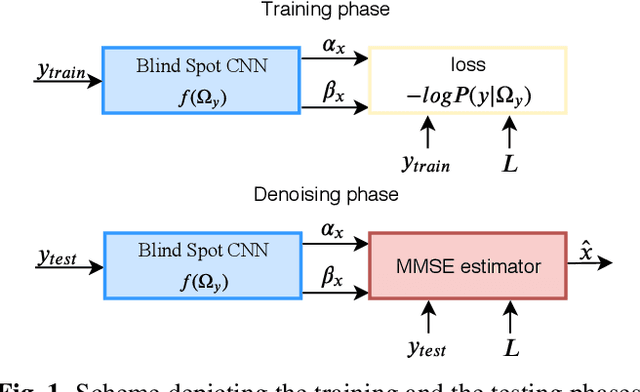

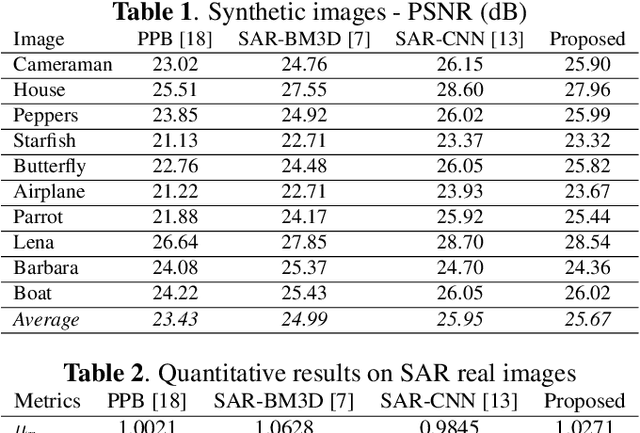

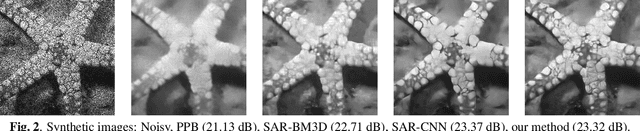

Abstract:Information extraction from synthetic aperture radar (SAR) images is heavily impaired by speckle noise, hence despeckling is a crucial preliminary step in scene analysis algorithms. The recent success of deep learning envisions a new generation of despeckling techniques that could outperform classical model-based methods. However, current deep learning approaches to despeckling require supervision for training, whereas clean SAR images are impossible to obtain. In the literature, this issue is tackled by resorting to either synthetically speckled optical images, which exhibit different properties with respect to true SAR images, or multi-temporal SAR images, which are difficult to acquire or fuse accurately. In this paper, inspired by recent works on blind-spot denoising networks, we propose a self-supervised Bayesian despeckling method. The proposed method is trained employing only noisy SAR images and can therefore learn features of real SAR images rather than synthetic data. Experiments show that the performance of the proposed approach is very close to the supervised training approach on synthetic data and superior on real data in both quantitative and visual assessments.

Towards Deep Unsupervised SAR Despeckling with Blind-Spot Convolutional Neural Networks

Jan 15, 2020

Abstract:SAR despeckling is a problem of paramount importance in remote sensing, since it represents the first step of many scene analysis algorithms. Recently, deep learning techniques have outperformed classical model-based despeckling algorithms. However, such methods require clean ground truth images for training, thus resorting to synthetically speckled optical images since clean SAR images cannot be acquired. In this paper, inspired by recent works on blind-spot denoising networks, we propose a self-supervised Bayesian despeckling method. The proposed method is trained employing only noisy images and can therefore learn features of real SAR images rather than synthetic data. We show that the performance of the proposed network is very close to the supervised training approach on synthetic data and competitive on real data.

DeepSUM++: Non-local Deep Neural Network for Super-Resolution of Unregistered Multitemporal Images

Jan 15, 2020

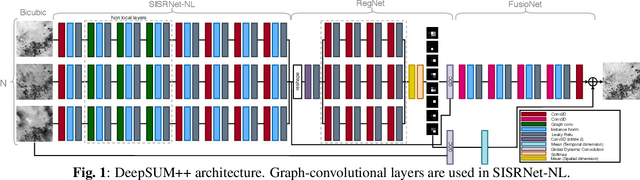

Abstract:Deep learning methods for super-resolution of a remote sensing scene from multiple unregistered low-resolution images have recently gained attention thanks to a challenge proposed by the European Space Agency. This paper presents an evolution of the winner of the challenge, showing how incorporating non-local information in a convolutional neural network allows to exploit self-similar patterns that provide enhanced regularization of the super-resolution problem. Experiments on the dataset of the challenge show improved performance over the state-of-the-art, which does not exploit non-local information.

DeepSUM: Deep neural network for Super-resolution of Unregistered Multitemporal images

Jul 15, 2019

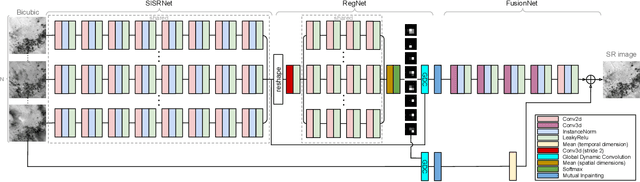

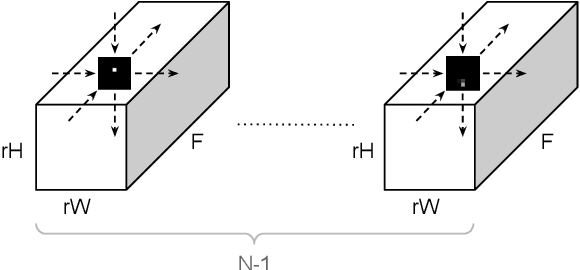

Abstract:Recently, convolutional neural networks (CNN) have been successfully applied to many remote sensing problems. However, deep learning techniques for multi-image super-resolution from multitemporal unregistered imagery have received little attention so far. This work proposes a novel CNN-based technique that exploits both spatial and temporal correlations to combine multiple images. This novel framework integrates the spatial registration task directly inside the CNN, and allows to exploit the representation learning capabilities of the network to enhance registration accuracy. The entire super-resolution process relies on a single CNN with three main stages: shared 2D convolutions to extract high-dimensional features from the input images; a subnetwork proposing registration filters derived from the high-dimensional feature representations; 3D convolutions for slow fusion of the features from multiple images. The whole network can be trained end-to-end to recover a single high resolution image from multiple unregistered low resolution images. The method presented in this paper is the winner of the PROBA-V super-resolution challenge issued by the European Space Agency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge