André Apitzsch

OmniFlow: Human Omnidirectional Optical Flow

Apr 16, 2021

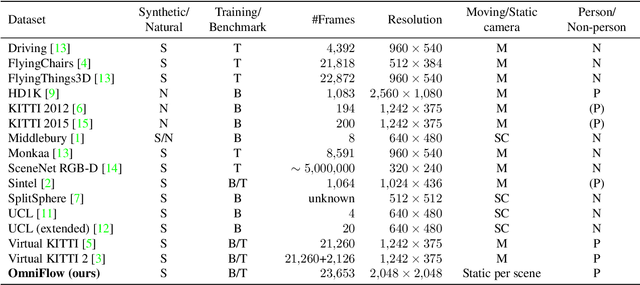

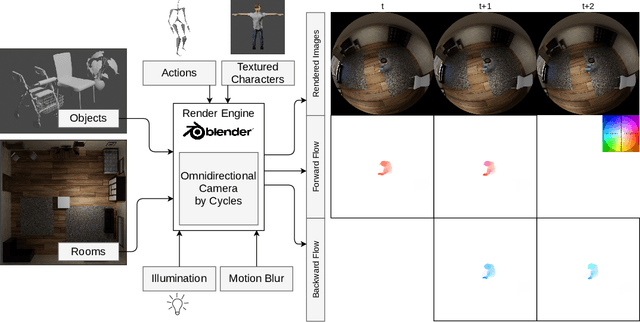

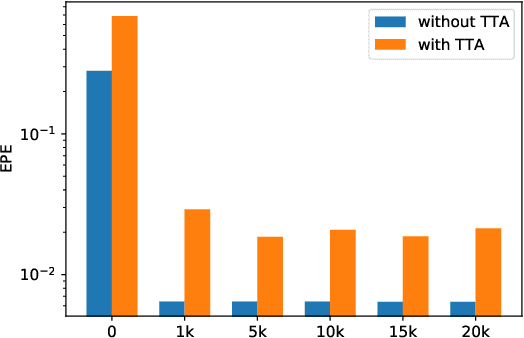

Abstract:Optical flow is the motion of a pixel between at least two consecutive video frames and can be estimated through an end-to-end trainable convolutional neural network. To this end, large training datasets are required to improve the accuracy of optical flow estimation. Our paper presents OmniFlow: a new synthetic omnidirectional human optical flow dataset. Based on a rendering engine we create a naturalistic 3D indoor environment with textured rooms, characters, actions, objects, illumination and motion blur where all components of the environment are shuffled during the data capturing process. The simulation has as output rendered images of household activities and the corresponding forward and backward optical flow. To verify the data for training volumetric correspondence networks for optical flow estimation we train different subsets of the data and test on OmniFlow with and without Test-Time-Augmentation. As a result we have generated 23,653 image pairs and corresponding forward and backward optical flow. Our dataset can be downloaded from: https://mytuc.org/byfs

OmniDetector: With Neural Networks to Bounding Boxes

May 22, 2018

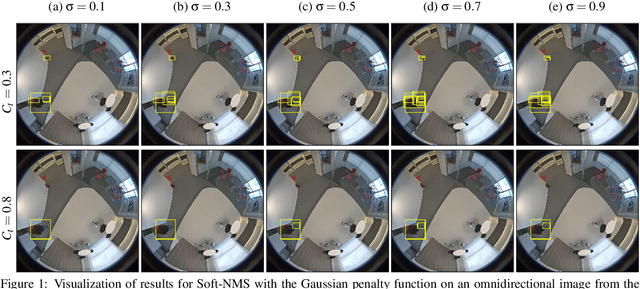

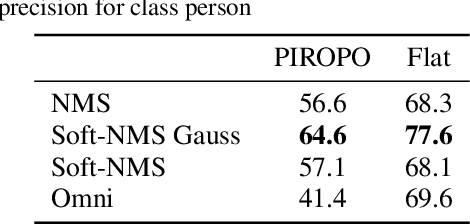

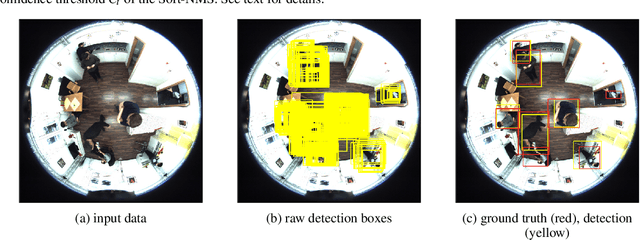

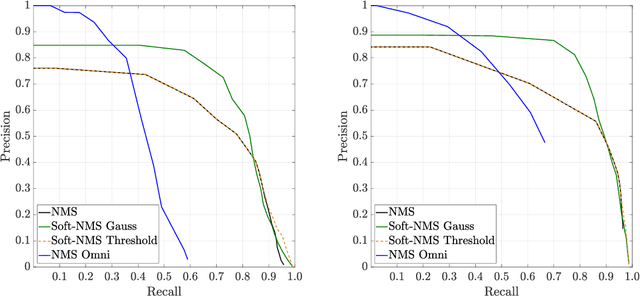

Abstract:We propose a person detector on omnidirectional images, an accurate method to generate minimal enclosing rectangles of persons. The basic idea is to adapt the qualitative detection performance of a convolutional neural network based method, namely YOLOv2 to fish-eye images. The design of our approach picks up the idea of a state-of-the-art object detector and highly overlapping areas of images with their regions of interests. This overlap reduces the number of false negatives. Based on the raw bounding boxes of the detector we fine-tuned overlapping bounding boxes by three approaches. The non-maximum suppression, the soft non-maximum suppression and the soft non-maximum suppression with Gaussian smoothing. The evaluation was done on the PIROPO database, supplemented with bounding boxes on omnidirectional images. We achieve an average precision of 64.4 % with YOLOv2 for the class person. For this purpose we fine-tuned the soft non-maximum suppression with Gaussian smoothing.

Cubes3D: Neural Network based Optical Flow in Omnidirectional Image Scenes

May 22, 2018

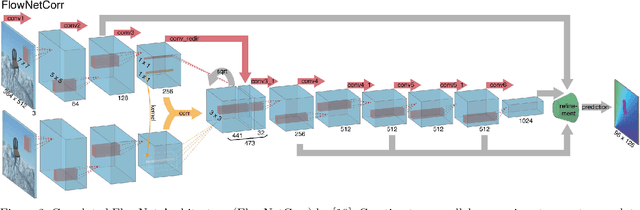

Abstract:Optical flow estimation with convolutional neural networks (CNNs) has recently solved various tasks of computer vision successfully. In this paper we adapt a state-of-the-art approach for optical flow estimation to omnidirectional images. We investigate CNN architectures to determine high motion variations caused by the geometry of fish-eye images. Further we determine the qualitative influence of texture on the non-rigid object to the motion vectors. For evaluation of the results we create ground truth motion fields synthetically. The ground truth contains cubes with static background. We test variations of pre-trained FlowNet 2.0 architectures by indicating common error metrics. We generate competitive results for the motion of the foreground with inhomogeneous texture on the moving object.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge