OmniDetector: With Neural Networks to Bounding Boxes

Paper and Code

May 22, 2018

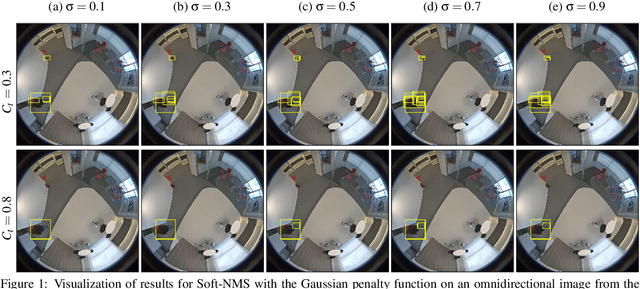

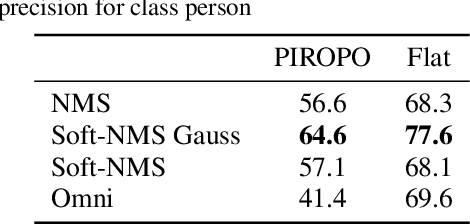

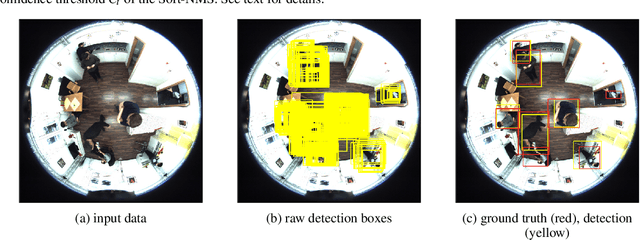

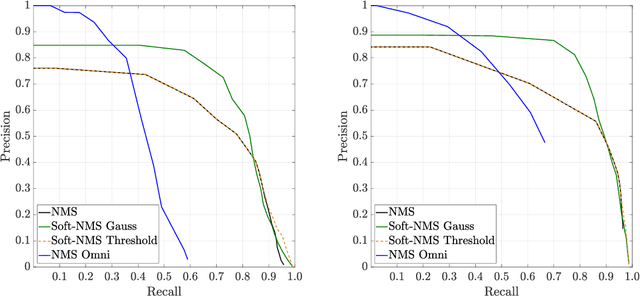

We propose a person detector on omnidirectional images, an accurate method to generate minimal enclosing rectangles of persons. The basic idea is to adapt the qualitative detection performance of a convolutional neural network based method, namely YOLOv2 to fish-eye images. The design of our approach picks up the idea of a state-of-the-art object detector and highly overlapping areas of images with their regions of interests. This overlap reduces the number of false negatives. Based on the raw bounding boxes of the detector we fine-tuned overlapping bounding boxes by three approaches. The non-maximum suppression, the soft non-maximum suppression and the soft non-maximum suppression with Gaussian smoothing. The evaluation was done on the PIROPO database, supplemented with bounding boxes on omnidirectional images. We achieve an average precision of 64.4 % with YOLOv2 for the class person. For this purpose we fine-tuned the soft non-maximum suppression with Gaussian smoothing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge