Cubes3D: Neural Network based Optical Flow in Omnidirectional Image Scenes

Paper and Code

May 22, 2018

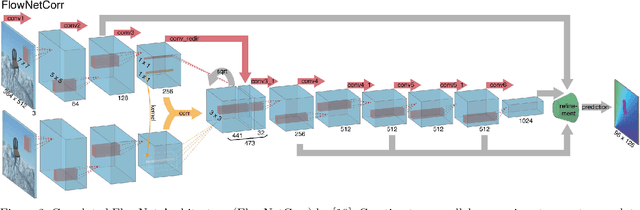

Optical flow estimation with convolutional neural networks (CNNs) has recently solved various tasks of computer vision successfully. In this paper we adapt a state-of-the-art approach for optical flow estimation to omnidirectional images. We investigate CNN architectures to determine high motion variations caused by the geometry of fish-eye images. Further we determine the qualitative influence of texture on the non-rigid object to the motion vectors. For evaluation of the results we create ground truth motion fields synthetically. The ground truth contains cubes with static background. We test variations of pre-trained FlowNet 2.0 architectures by indicating common error metrics. We generate competitive results for the motion of the foreground with inhomogeneous texture on the moving object.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge