Andew Howard

The channel-spatial attention-based vision transformer network for automated, accurate prediction of crop nitrogen status from UAV imagery

Nov 12, 2021

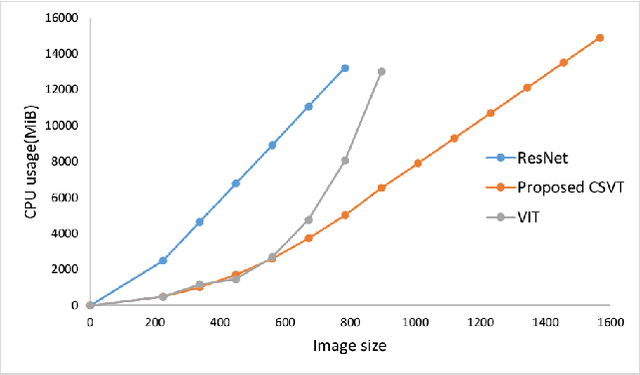

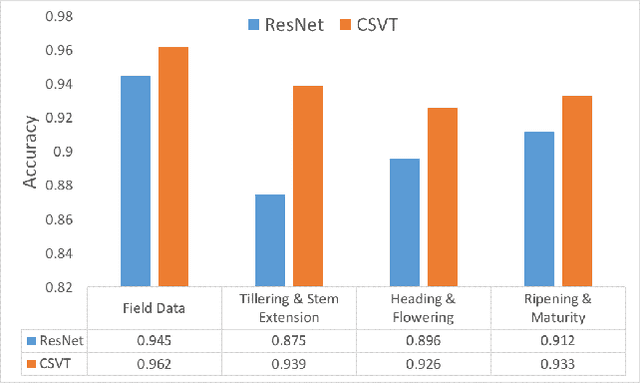

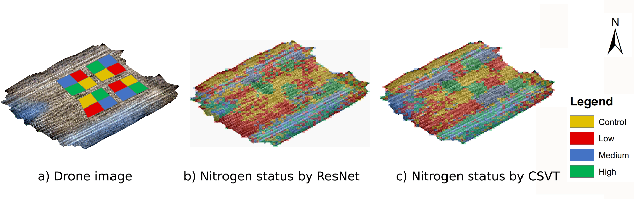

Abstract:Nitrogen (N) fertiliser is routinely applied by farmers to increase crop yields. At present, farmers often over-apply N fertilizer in some locations or timepoints because they do not have high-resolution crop N status data. N-use efficiency can be low, with the remaining N lost to the environment, resulting in high production costs and environmental pollution. Accurate and timely estimation of N status in crops is crucial to improving cropping systems' economic and environmental sustainability. The conventional approaches based on tissue analysis in the laboratory for estimating N status in plants are time consuming and destructive. Recent advances in remote sensing and machine learning have shown promise in addressing the aforementioned challenges in a non-destructive way. We propose a novel deep learning framework: a channel-spatial attention-based vision transformer (CSVT) for estimating crop N status from large images collected from a UAV in a wheat field. Unlike the existing works, the proposed CSVT introduces a Channel Attention Block (CAB) and a Spatial Interaction Block (SIB), which allows capturing nonlinear characteristics of spatial-wise and channel-wise features from UAV digital aerial imagery, for accurate N status prediction in wheat crops. Moreover, since acquiring labeled data is time consuming and costly, local-to-global self-supervised learning is introduced to pre-train the CSVT with extensive unlabelled data. The proposed CSVT has been compared with the state-of-the-art models, tested and validated on both testing and independent datasets. The proposed approach achieved high accuracy (0.96) with good generalizability and reproducibility for wheat N status estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge