Anand Kumar

Impatient Users Confuse AI Agents: High-fidelity Simulations of Human Traits for Testing Agents

Oct 06, 2025Abstract:Despite rapid progress in building conversational AI agents, robustness is still largely untested. Small shifts in user behavior, such as being more impatient, incoherent, or skeptical, can cause sharp drops in agent performance, revealing how brittle current AI agents are. Today's benchmarks fail to capture this fragility: agents may perform well under standard evaluations but degrade spectacularly in more realistic and varied settings. We address this robustness testing gap by introducing TraitBasis, a lightweight, model-agnostic method for systematically stress testing AI agents. TraitBasis learns directions in activation space corresponding to steerable user traits (e.g., impatience or incoherence), which can be controlled, scaled, composed, and applied at inference time without any fine-tuning or extra data. Using TraitBasis, we extend $\tau$-Bench to $\tau$-Trait, where user behaviors are altered via controlled trait vectors. We observe on average a 2%-30% performance degradation on $\tau$-Trait across frontier models, highlighting the lack of robustness of current AI agents to variations in user behavior. Together, these results highlight both the critical role of robustness testing and the promise of TraitBasis as a simple, data-efficient, and compositional tool. By powering simulation-driven stress tests and training loops, TraitBasis opens the door to building AI agents that remain reliable in the unpredictable dynamics of real-world human interactions. We have open-sourced $\tau$-Trai across four domains: airline, retail, telecom, and telehealth, so the community can systematically QA their agents under realistic, behaviorally diverse intents and trait scenarios: https://github.com/collinear-ai/tau-trait.

GS-TransUNet: Integrated 2D Gaussian Splatting and Transformer UNet for Accurate Skin Lesion Analysis

Feb 23, 2025Abstract:We can achieve fast and consistent early skin cancer detection with recent developments in computer vision and deep learning techniques. However, the existing skin lesion segmentation and classification prediction models run independently, thus missing potential efficiencies from their integrated execution. To unify skin lesion analysis, our paper presents the Gaussian Splatting - Transformer UNet (GS-TransUNet), a novel approach that synergistically combines 2D Gaussian splatting with the Transformer UNet architecture for automated skin cancer diagnosis. Our unified deep learning model efficiently delivers dual-function skin lesion classification and segmentation for clinical diagnosis. Evaluated on ISIC-2017 and PH2 datasets, our network demonstrates superior performance compared to existing state-of-the-art models across multiple metrics through 5-fold cross-validation. Our findings illustrate significant advancements in the precision of segmentation and classification. This integration sets new benchmarks in the field and highlights the potential for further research into multi-task medical image analysis methodologies, promising enhancements in automated diagnostic systems.

IntroStyle: Training-Free Introspective Style Attribution using Diffusion Features

Dec 19, 2024

Abstract:Text-to-image (T2I) models have gained widespread adoption among content creators and the general public. However, this has sparked significant concerns regarding data privacy and copyright infringement among artists. Consequently, there is an increasing demand for T2I models to incorporate mechanisms that prevent the generation of specific artistic styles, thereby safeguarding intellectual property rights. Existing methods for style extraction typically necessitate the collection of custom datasets and the training of specialized models. This, however, is resource-intensive, time-consuming, and often impractical for real-time applications. Moreover, it may not adequately address the dynamic nature of artistic styles and the rapidly evolving landscape of digital art. We present a novel, training-free framework to solve the style attribution problem, using the features produced by a diffusion model alone, without any external modules or retraining. This is denoted as introspective style attribution (IntroStyle) and demonstrates superior performance to state-of-the-art models for style retrieval. We also introduce a synthetic dataset of Style Hacks (SHacks) to isolate artistic style and evaluate fine-grained style attribution performance.

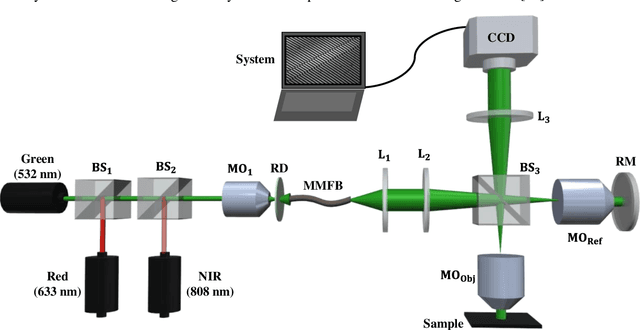

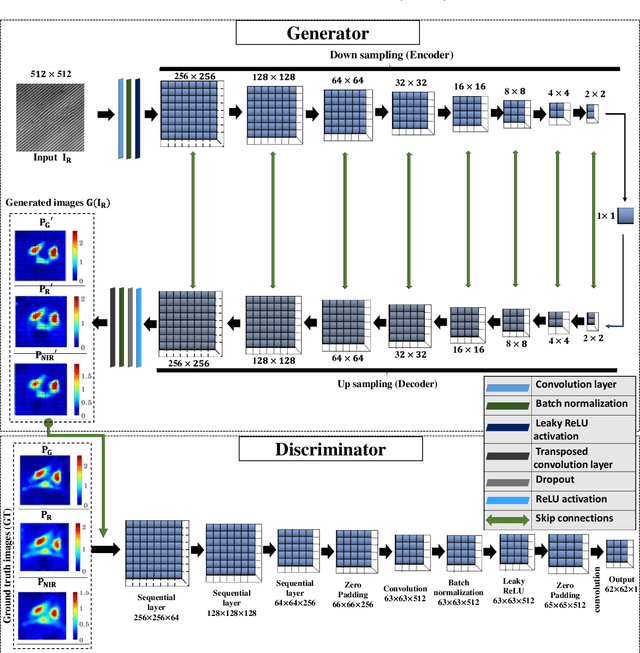

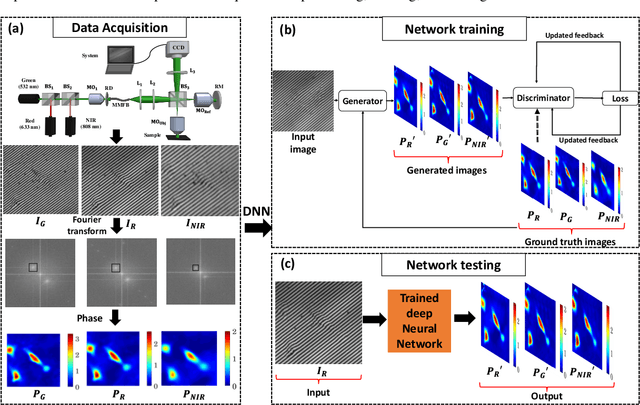

Single-shot multispectral quantitative phase imaging using deep neural network

Jan 05, 2022

Abstract:Multi-spectral quantitative phase imaging (MS-QPI) is a cutting-edge label-free technique to determine the morphological changes, refractive index variations and spectroscopic information of the specimens. The bottleneck to implement this technique to extract quantitative information, is the need of more than two measurements for generating MS-QPI images. We propose a single-shot MS-QPI technique using highly spatially sensitive digital holographic microscope assisted with deep neural network (DNN). Our method first acquires the interferometric datasets corresponding to multiple wavelengths ({\lambda}=532, 633 and 808 nm used here). The acquired datasets are used to train generative adversarial network (GAN) to generate multi-spectral quantitative phase maps from a single input interferogram. The network is trained and validated on two different samples, the optical waveguide and a MG63 osteosarcoma cells. Further, validation of the framework is performed by comparing the predicted phase maps with experimentally acquired and processed multi-spectral phase maps. The current MS-QPI+DNN framework can further empower spectroscopic QPI to improve the chemical specificity without complex instrumentation and color-cross talk.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge