Amy Bastine

Source Localization by Multidimensional Steered Response Power Mapping with Sparse Bayesian Learning

May 20, 2024

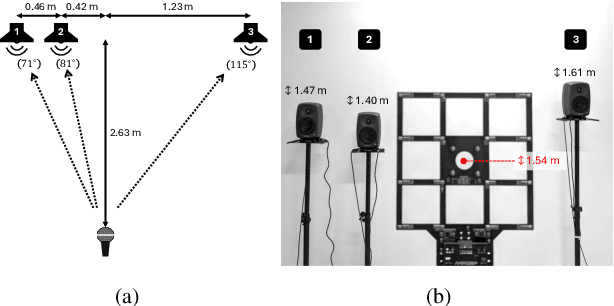

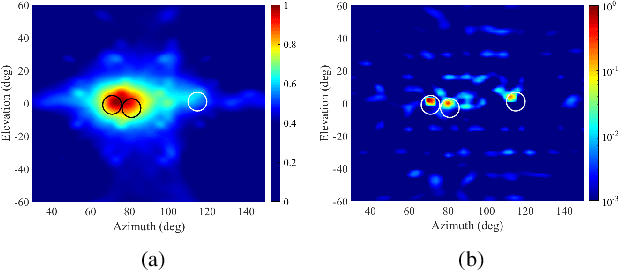

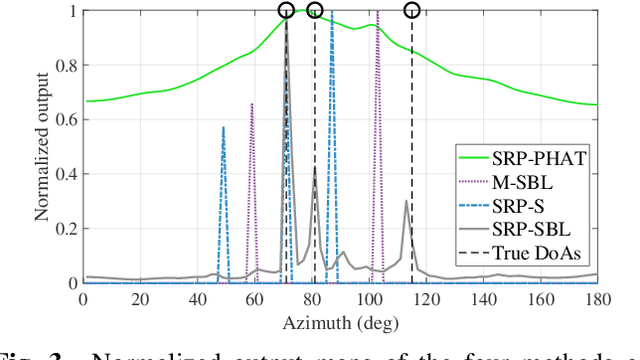

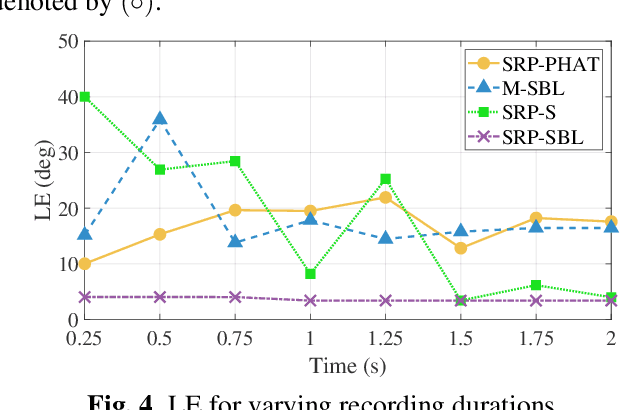

Abstract:We propose an advance Steered Response Power (SRP) method for localizing multiple sources. While conventional SRP performs well in adverse conditions, it remains to struggle in scenarios with closely neighboring sources, resulting in ambiguous SRP maps. We address this issue by applying sparsity optimization in SRP to obtain high-resolution maps. Our approach represents SRP maps as multidimensional matrices to preserve time-frequency information and further improve performance in unfavorable conditions. We use multi-dictionary Sparse Bayesian Learning to localize sources without needing prior knowledge of their quantity. We validate our method through practical experiments with a 16-channel planar microphone array and compare against three other SRP and sparsity-based methods. Our multidimensional SRP approach outperforms conventional SRP and the current state-of-the-art sparse SRP methods for localizing closely spaced sources in a reverberant room.

A Two-Step Approach for Narrowband Source Localization in Reverberant Rooms

Sep 25, 2023

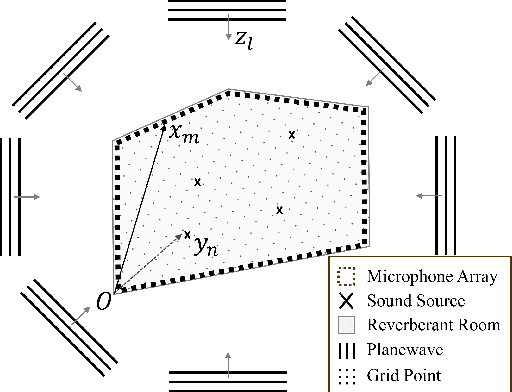

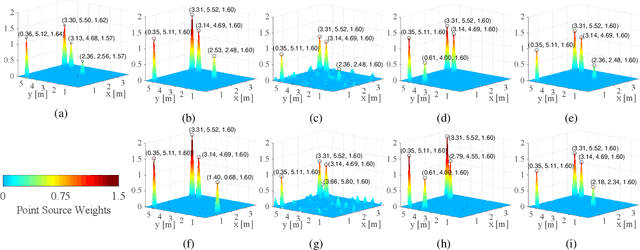

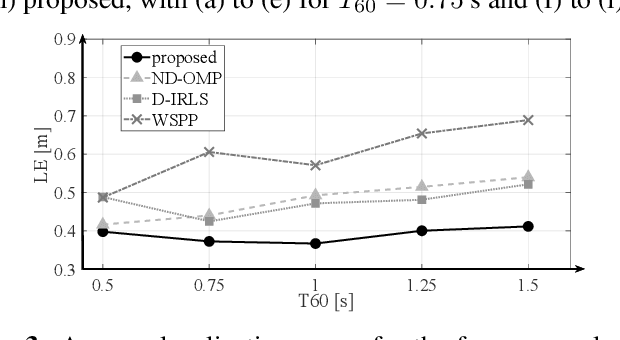

Abstract:This paper presents a two-step approach for narrowband source localization within reverberant rooms. The first step involves dereverberation by modeling the homogeneous component of the sound field by an equivalent decomposition of planewaves using Iteratively Reweighted Least Squares (IRLS), while the second step focuses on source localization by modeling the dereverberated component as a sparse representation of point-source distribution using Orthogonal Matching Pursuit (OMP). The proposed method enhances localization accuracy with fewer measurements, particularly in environments with strong reverberation. A numerical simulation in a conference room scenario, using a uniform microphone array affixed to the wall, demonstrates real-world feasibility. Notably, the proposed method and microphone placement effectively localize sound sources within the 2D-horizontal plane without requiring prior knowledge of boundary conditions and room geometry, making it versatile for application in different room types.

An Active Noise Control System Based on Soundfield Interpolation Using a Physics-informed Neural Network

Sep 19, 2023

Abstract:Conventional multiple-point active noise control (ANC) systems require placing error microphones within the region of interest (ROI), inconveniencing users. This paper designs a feasible monitoring microphone arrangement placed outside the ROI, providing a user with more freedom of movement. The soundfield within the ROI is interpolated from the microphone signals using a physics-informed neural network (PINN). PINN exploits the acoustic wave equation to assist soundfield interpolation under a limited number of monitoring microphones, and demonstrates better interpolation performance than the spherical harmonic method in simulations. An ANC system is designed to take advantage of the interpolated signal to reduce noise signal within the ROI. The PINN-assisted ANC system reduces noise more than that of the multiple-point ANC system in simulations.

Head-Related Transfer Function Interpolation with a Spherical CNN

Sep 15, 2023Abstract:Head-related transfer functions (HRTFs) are crucial for spatial soundfield reproduction in virtual reality applications. However, obtaining personalized, high-resolution HRTFs is a time-consuming and costly task. Recently, deep learning-based methods showed promise in interpolating high-resolution HRTFs from sparse measurements. Some of these methods treat HRTF interpolation as an image super-resolution task, which neglects spatial acoustic features. This paper proposes a spherical convolutional neural network method for HRTF interpolation. The proposed method realizes the convolution process by decomposing and reconstructing HRTF through the Spherical Harmonics (SHs). The SHs, an orthogonal function set defined on a sphere, allow the convolution layers to effectively capture the spatial features of HRTFs, which are sampled on a sphere. Simulation results demonstrate the effectiveness of the proposed method in achieving accurate interpolation from sparse measurements, outperforming the SH method and learning-based methods.

Sound Field Estimation around a Rigid Sphere with Physics-informed Neural Network

Jul 26, 2023Abstract:Accurate estimation of the sound field around a rigid sphere necessitates adequate sampling on the sphere, which may not always be possible. To overcome this challenge, this paper proposes a method for sound field estimation based on a physics-informed neural network. This approach integrates physical knowledge into the architecture and training process of the network. In contrast to other learning-based methods, the proposed method incorporates additional constraints derived from the Helmholtz equation and the zero radial velocity condition on the rigid sphere. Consequently, it can generate physically feasible estimations without requiring a large dataset. In contrast to the spherical harmonic-based method, the proposed approach has better fitting abilities and circumvents the ill condition caused by truncation. Simulation results demonstrate the effectiveness of the proposed method in achieving accurate sound field estimations from limited measurements, outperforming the spherical harmonic method and plane-wave decomposition method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge