Amir Iranmanesh

Text Mining using Nonnegative Matrix Factorization and Latent Semantic Analysis

Nov 12, 2019

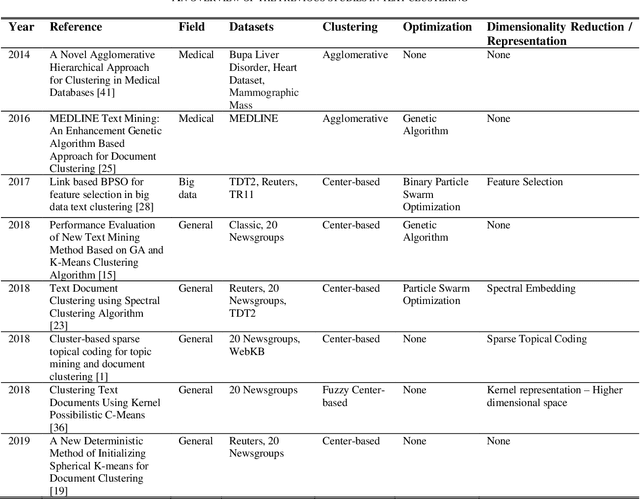

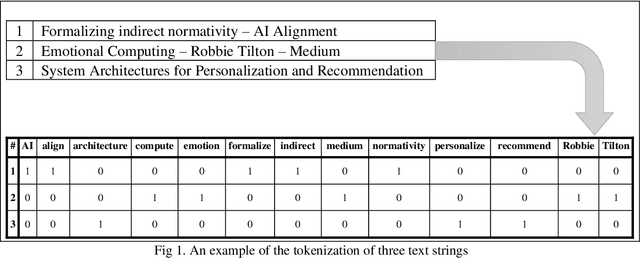

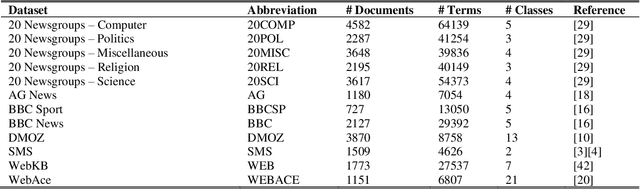

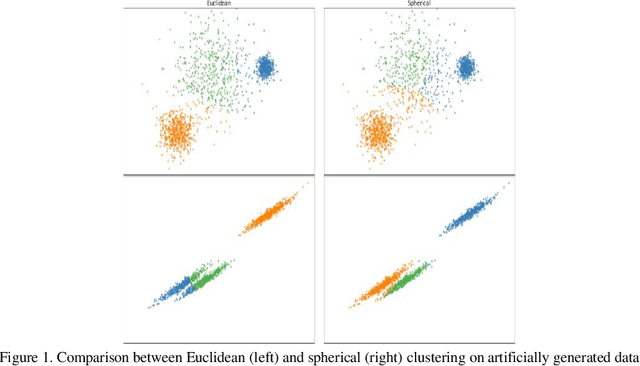

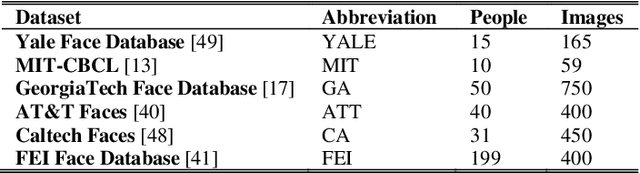

Abstract:Text clustering is arguably one of the most important topics in modern data mining. Nevertheless, text data require tokenization which usually yields a very large and highly sparse term-document matrix, which is usually difficult to process using conventional machine learning algorithms. Methods such as Latent Semantic Analysis have helped mitigate this issue, but are nevertheless not completely stable in practice. As a result, we propose a new feature agglomeration method based on Nonnegative Matrix Factorization. NMF is employed to separate the terms into groups, and then each group`s term vectors are agglomerated into a new feature vector. Together, these feature vectors create a new feature space much more suitable for clustering. In addition, we propose a new deterministic initialization for spherical K-Means, which proves very useful for this specific type of data. In order to evaluate the proposed method, we compare it to some of the latest research done in this field, as well as some of the most practiced methods. In our experiments, we conclude that the proposed method either significantly improves clustering performance, or maintains the performance of other methods, while improving stability in results.

DISCERN: Diversity-based Selection of Centroids for k-Estimation and Rapid Non-stochastic Clustering

Oct 14, 2019

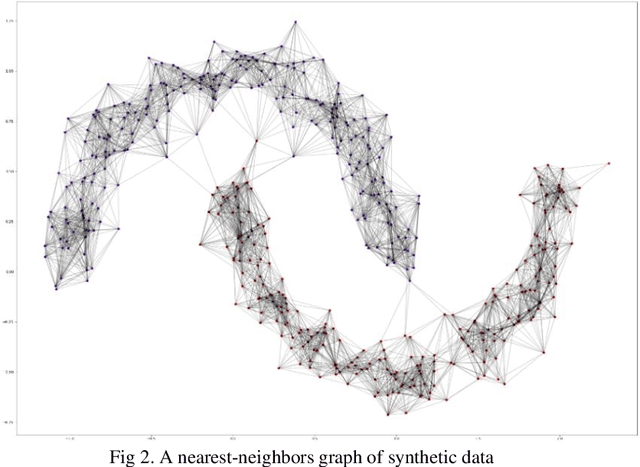

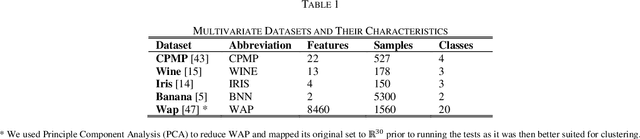

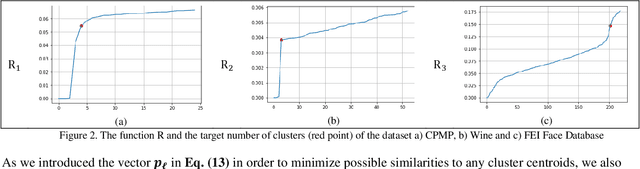

Abstract:As one of the most ubiquitously applied unsupervised learning methods, clustering has also been known to have a few disadvantages. More specifically, parameters such as the number of clusters and neighborhood radius are what call the `unsupervised` nature of these algorithms into question. Moreover, the stochastic nature of a great number of these algorithms is also a considerable point of weakness. In order to address these issues, we propose DISCERN which can serve as an initialization algorithm for K-Means, finding suitable centroids that increase the performance of K-Means. Following that, the algorithm can estimate the number of clusters if need be. The algorithm does all of that, while maintaining complete robustness and returning the same results at each separate run. We ran experiments on the proposed method processing multiple datasets and the results show its undeniable superiority in terms of results, computational time and robustness when compared to the randomized K-Means and K-Means++ initialization. In addition, the superiority in estimating the number of clusters is also discussed and we prove the lower complexity when compared to methods such as the elbow and silhouette methods in estimating the number of clusters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge