Amey Karkare

Targeted Example Generation for Compilation Errors

Sep 02, 2019

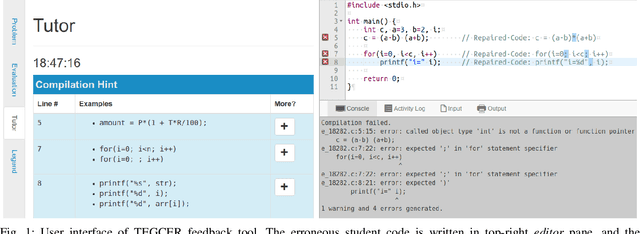

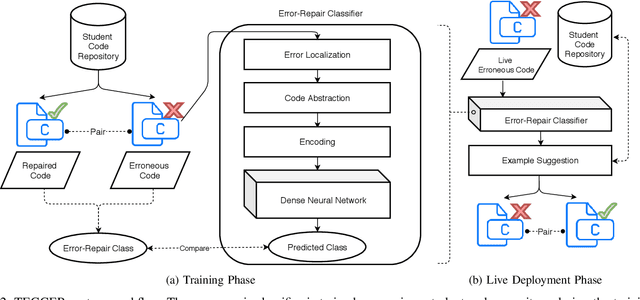

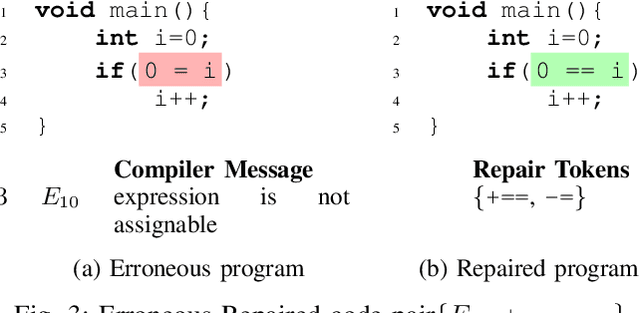

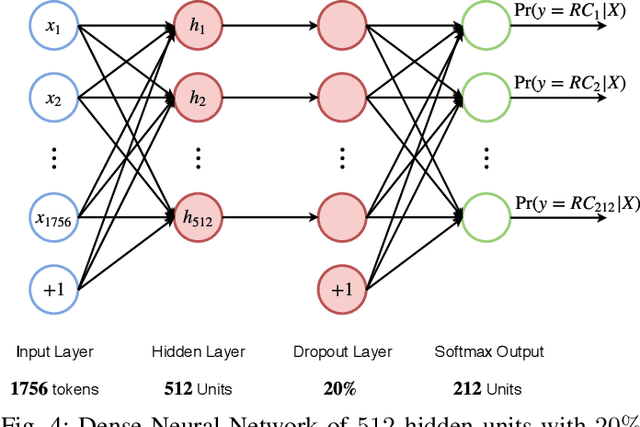

Abstract:We present TEGCER, an automated feedback tool for novice programmers. TEGCER uses supervised classification to match compilation errors in new code submissions with relevant pre-existing errors, submitted by other students before. The dense neural network used to perform this classification task is trained on 15000+ error-repair code examples. The proposed model yields a test set classification Pred@3 accuracy of 97.7% across 212 error category labels. Using this model as its base, TEGCER presents students with the closest relevant examples of solutions for their specific error on demand.

TipsC: Tips and Corrections for programming MOOCs

Apr 02, 2018

Abstract:With the widespread adoption of MOOCs in academic institutions, it has become imperative to come up with better techniques to solve the tutoring and grading problems posed by programming courses. Programming being the new 'writing', it becomes a challenge to ensure that a large section of the society is exposed to programming. Due to the gradient in learning abilities of students, the course instructor must ensure that everyone can cope up with the material, and receive adequate help in completing assignments while learning along the way. We introduce TipsC for this task. By analyzing a large number of correct submissions, TipsC can search for correct codes resembling a given incorrect solution. Without revealing the actual code, TipsC then suggests changes in the incorrect code to help the student fix logical runtime errors. In addition, this also serves as a cluster visualization tool for the instructor, revealing different patterns in user submissions. We evaluated the effectiveness of TipsC's clustering algorithm on data collected from previous offerings of an introductory programming course conducted at IIT Kanpur where the grades were given by human TAs. The results show the weighted average variance of marks for clusters when similar submissions are grouped together is 47% less compared to the case when all programs are grouped together.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge