Ali Karaali

SVBR-NET: A Non-Blind Spatially Varying Defocus Blur Removal Network

Jun 26, 2022

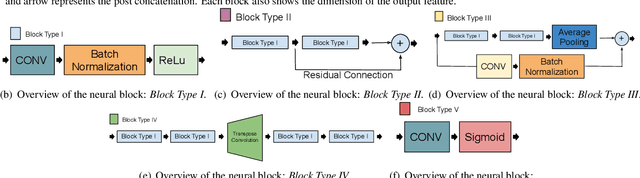

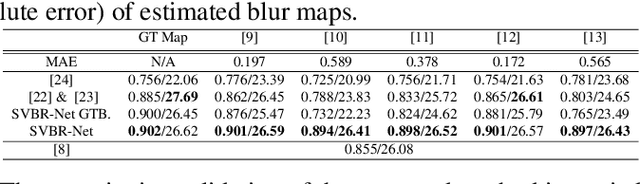

Abstract:Defocus blur is a physical consequence of the optical sensors used in most cameras. Although it can be used as a photographic style, it is commonly viewed as an image degradation modeled as the convolution of a sharp image with a spatially-varying blur kernel. Motivated by the advance of blur estimation methods in the past years, we propose a non-blind approach for image deblurring that can deal with spatially-varying kernels. We introduce two encoder-decoder sub-networks that are fed with the blurry image and the estimated blur map, respectively, and produce as output the deblurred (deconvolved) image. Each sub-network presents several skip connections that allow data propagation from layers spread apart, and also inter-subnetwork skip connections that ease the communication between the modules. The network is trained with synthetically blur kernels that are augmented to emulate blur maps produced by existing blur estimation methods, and our experimental results show that our method works well when combined with a variety of blur estimation methods.

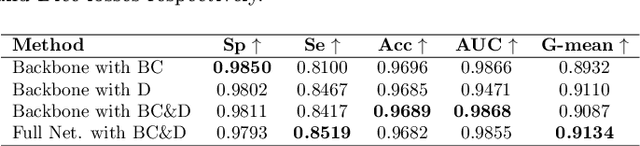

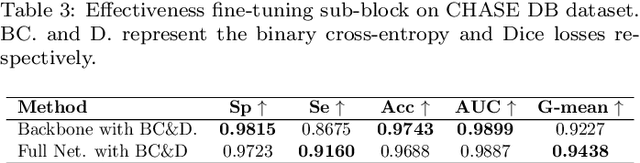

DR-VNet: Retinal Vessel Segmentation via Dense Residual UNet

Nov 08, 2021

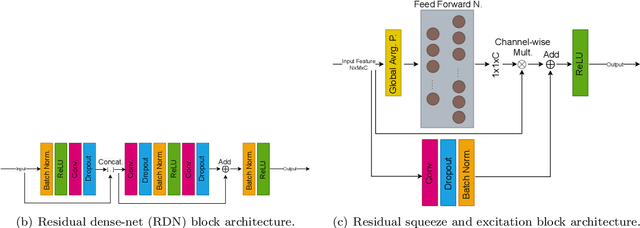

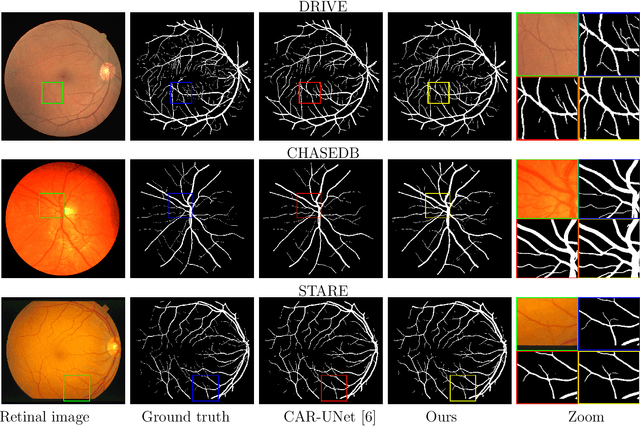

Abstract:Accurate retinal vessel segmentation is an important task for many computer-aided diagnosis systems. Yet, it is still a challenging problem due to the complex vessel structures of an eye. Numerous vessel segmentation methods have been proposed recently, however more research is needed to deal with poor segmentation of thin and tiny vessels. To address this, we propose a new deep learning pipeline combining the efficiency of residual dense net blocks and, residual squeeze and excitation blocks. We validate experimentally our approach on three datasets and show that our pipeline outperforms current state of the art techniques on the sensitivity metric relevant to assess capture of small vessels.

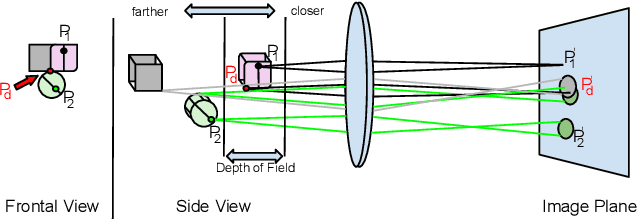

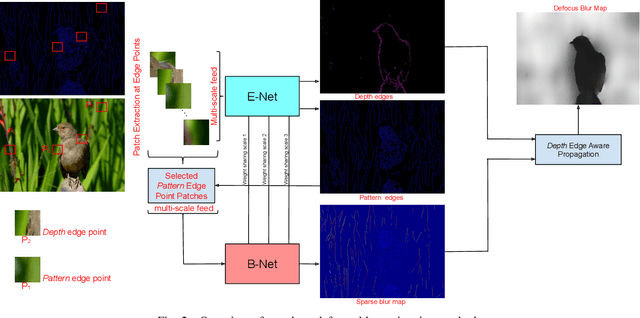

Deep Multi-Scale Feature Learning for Defocus Blur Estimation

Sep 24, 2020

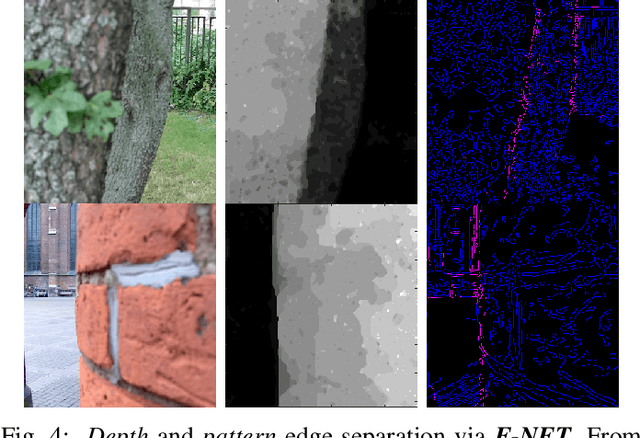

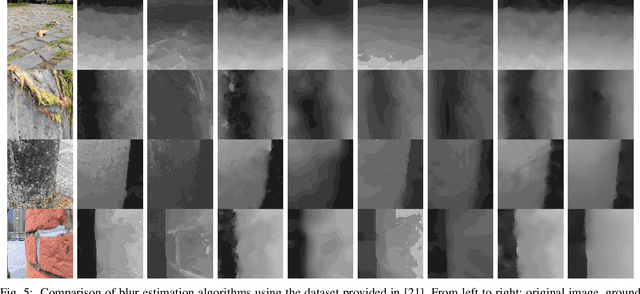

Abstract:This paper presents an edge-based defocus blur estimation method from a single defocused image. We first distinguish edges that lie at depth discontinuities (called depth edges, for which the blur estimate is ambiguous) from edges that lie at approximately constant depth regions (called pattern edges, for which the blur estimate is well-defined). Then, we estimate the defocus blur amount at pattern edges only, and explore an interpolation scheme based on guided filters that prevents data propagation across the detected depth edges to obtain a dense blur map with well-defined object boundaries. Both tasks (edge classification and blur estimation) are performed by deep convolutional neural networks (CNNs) that share weights to learn meaningful local features from multi-scale patches centered at edge locations. Experiments on naturally defocused images show that the proposed method presents qualitative and quantitative results that outperform state-of-the-art (SOTA) methods, with a good compromise between running time and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge