Alfiia Galimzianova

Dermatological Diagnosis Explainability Benchmark for Convolutional Neural Networks

Feb 23, 2023Abstract:In recent years, large strides have been taken in developing machine learning methods for dermatological applications, supported in part by the success of deep learning (DL). To date, diagnosing diseases from images is one of the most explored applications of DL within dermatology. Convolutional neural networks (ConvNets) are the most common (DL) method in medical imaging due to their training efficiency and accuracy, although they are often described as black boxes because of their limited explainability. One popular way to obtain insight into a ConvNet's decision mechanism is gradient class activation maps (Grad-CAM). A quantitative evaluation of the Grad-CAM explainability has been recently made possible by the release of DermXDB, a skin disease diagnosis explainability dataset which enables explainability benchmarking of ConvNet architectures. In this paper, we perform a literature review to identify the most common ConvNet architectures used for this task, and compare their Grad-CAM explanations with the explanation maps provided by DermXDB. We identified 11 architectures: DenseNet121, EfficientNet-B0, InceptionV3, InceptionResNetV2, MobileNet, MobileNetV2, NASNetMobile, ResNet50, ResNet50V2, VGG16, and Xception. We pre-trained all architectures on an clinical skin disease dataset, and fine-tuned them on a DermXDB subset. Validation results on the DermXDB holdout subset show an explainability F1 score of between 0.35-0.46, with Xception displaying the highest explainability performance. NASNetMobile reports the highest characteristic-level explainability sensitivity, despite it's mediocre diagnosis performance. These results highlight the importance of choosing the right architecture for the desired application and target market, underline need for additional explainability datasets, and further confirm the need for explainability benchmarking that relies on quantitative analyses.

Explainable Image Quality Assessments in Teledermatological Photography

Sep 10, 2022

Abstract:Image quality is a crucial factor in the success of teledermatological consultations. However, up to 50% of images sent by patients have quality issues, thus increasing the time to diagnosis and treatment. An automated, easily deployable, explainable method for assessing image quality is necessary to improve the current teledermatological consultation flow. We introduce ImageQX, a convolutional neural network trained for image quality assessment with a learning mechanism for identifying the most common poor image quality explanations: bad framing, bad lighting, blur, low resolution, and distance issues. ImageQX was trained on 26635 photographs and validated on 9874 photographs, each annotated with image quality labels and poor image quality explanations by up to 12 board-certified dermatologists. The photographic images were taken between 2017-2019 using a mobile skin disease tracking application accessible worldwide. Our method achieves expert-level performance for both image quality assessment and poor image quality explanation. For image quality assessment, ImageQX obtains a macro F1-score of 0.73 which places it within standard deviation of the pairwise inter-rater F1-score of 0.77. For poor image quality explanations, our method obtains F1-scores of between 0.37 and 0.70, similar to the inter-rater pairwise F1-score of between 0.24 and 0.83. Moreover, with a size of only 15 MB, ImageQX is easily deployable on mobile devices. With an image quality detection performance similar to that of dermatologists, incorporating ImageQX into the teledermatology flow can reduce the image evaluation burden on dermatologists, while at the same time reducing the time to diagnosis and treatment for patients. We introduce ImageQX, a first of its kind explainable image quality assessor which leverages domain expertise to improve the quality and efficiency of dermatological care in a virtual setting.

DermX: an end-to-end framework for explainable automated dermatological diagnosis

Feb 14, 2022

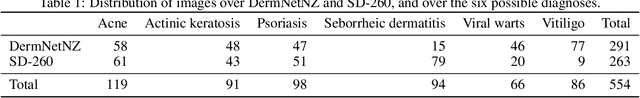

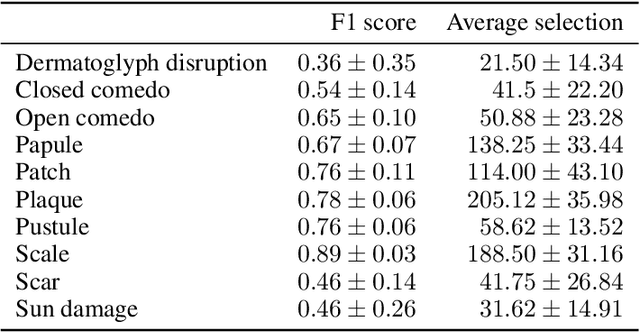

Abstract:Dermatological diagnosis automation is essential in addressing the high prevalence of skin diseases and critical shortage of dermatologists. Despite approaching expert-level diagnosis performance, convolutional neural network (ConvNet) adoption in clinical practice is impeded by their limited explainability, and by subjective, expensive explainability validations. We introduce DermX and DermX+, an end-to-end framework for explainable automated dermatological diagnosis. DermX is a clinically-inspired explainable dermatological diagnosis ConvNet, trained using DermXDB, a 554 images dataset annotated by eight dermatologists with diagnoses and supporting explanations. DermX+ extends DermX with guided attention training for explanation attention maps. Both methods achieve near-expert diagnosis performance, with DermX, DermX+, and dermatologist F1 scores of 0.79, 0.79, and 0.87, respectively. We assess the explanation plausibility in terms of identification and localization, by comparing model-selected with dermatologist-selected explanations, and gradient-weighted class-activation maps with dermatologist explanation maps. Both DermX and DermX+ obtain an identification F1 score of 0.78. The localization F1 score is 0.39 for DermX and 0.35 for DermX+. Explanation faithfulness is assessed through contrasting samples, DermX obtaining 0.53 faithfulness and DermX+ 0.25. These results show that explainability does not necessarily come at the expense of predictive power, as our high-performance models provide both plausible and faithful explanations for their diagnoses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge